Deploying My First PowerFlex Cluster shows all the necessary steps to create a nested PowerFlex Cluster.

We have written an article explaining some fundamental concepts about PowerFlex. Click here to read it before deploying the PowerFlex Cluster!

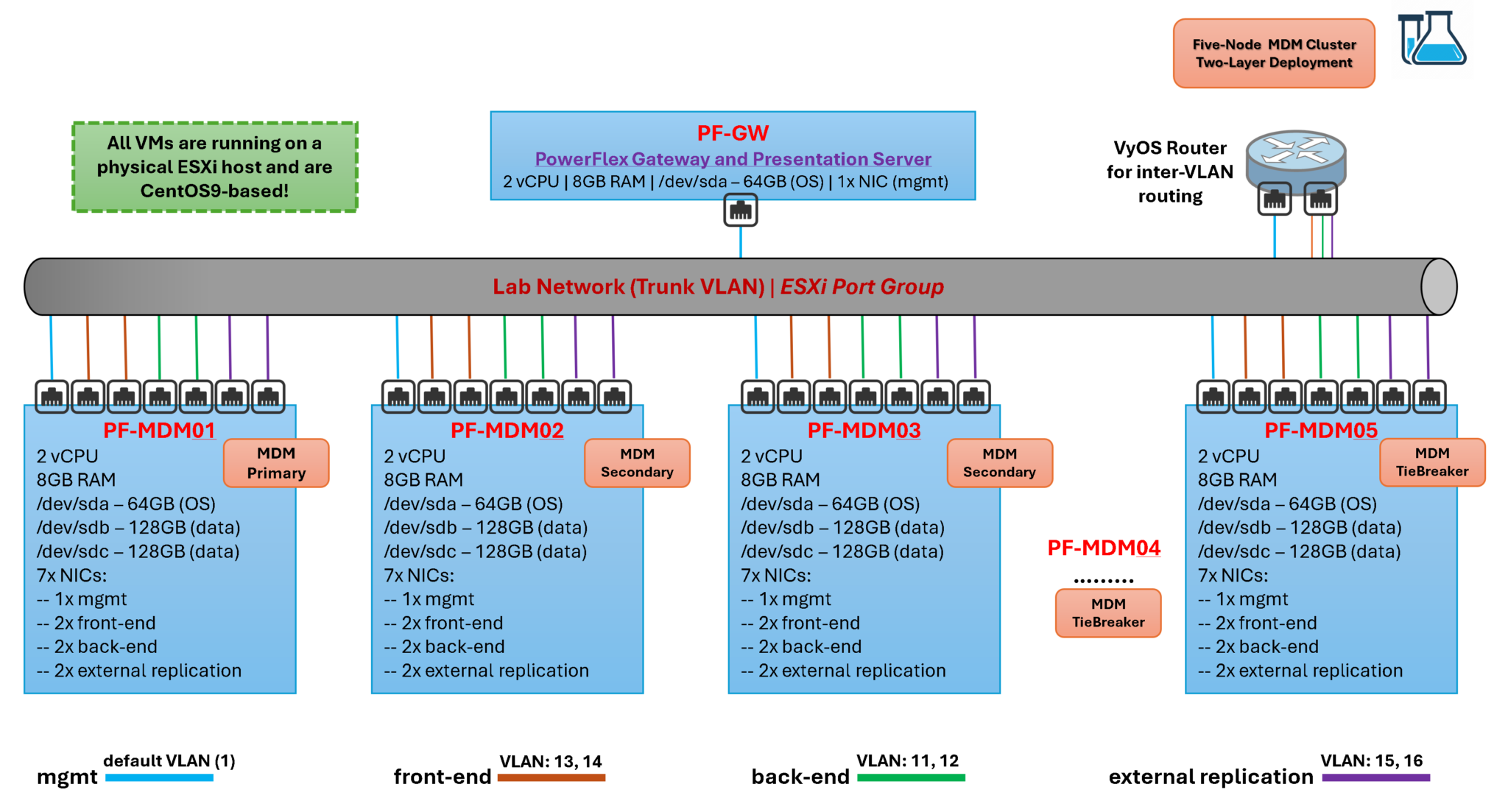

PowerFlex Lab Topology

First and foremost, we will use the PowerFlex version 3.6.4 for this lab to deploy the PowerFlex Cluster.

To create a PowerFlex cluster, we will deploy it using virtual machines (VMs).

It is essential to mention that it is only for labs and to learn how the PowerFlex works.

Our physical server is ESXi-based, and all virtual machines use the same Port Group under a vSphere Standard Switch. All virtual machines used in the PowerFlex cluster are CentOS-based.

We are deploying a five-node MDM cluster. Each VM has seven network adapters to segment all traffic types: management, front-end, back-end, and external replication. In addition to being part of the MDM cluster, each node is an SDS device, contributing to the creation of the Storage Pool with its local devices. Details of the VM’s virtual hardware can be checked on the following diagram/topology.

The deployment method is the Two-Layer. Remember, the Two-Layer separates compute resources from storage resources, allowing the independent expansion of compute or storage resources. It consists of PowerFlex compute-only nodes (supporting the SDC) and PowerFlex storage-only nodes (connected to and managed by the SDS). Compute-only nodes host end-user applications. Storage-only nodes contribute storage to the system pool. PowerFlex Metadata Manager (MDM) runs on PowerFlex storage-only nodes.

The PowerFlex Gateway and Presentation Server manage the PowerFlex Cluster and provide a graphical user interface for managing and configuring several aspects of the cluster. It is not recommended that both “roles” be run on the same virtual machine. However, we are running them together to demonstrate how they work.

To provide router capabilities for our virtual network, we use an instance of the VyOS router. We have written some articles about how to download and configure VyOS 😉

Step-by-step to configure the PowerFlex Nodes

1- (Optional)

By default, the network adapters are named with the following taxonomy, for example:

ens192

ens224

ens161

etc….

If you prefer, rename each network interface to an “eth” pattern.

Under the /etc/udev/rules.d directory, create a file “70-persistent-net.rules” and add the following entries:

# Accessing the directory

cd /etc/udev/rules.d/

# Creating the file

vim 70-persistent-net.rules

# Creating the rules to rename each network adapter. Each line is renaming one network adapter:

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:81:ee:a6", ATTR{type}=="1", KERNEL=="ens161", NAME="eth2"

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:81:67:2a", ATTR{type}=="1", KERNEL=="ens162", NAME="eth6"

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:81:6e:6d", ATTR{type}=="1", KERNEL=="ens192", NAME="eth0"

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:81:11:96", ATTR{type}=="1", KERNEL=="ens193", NAME="eth3"

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:81:29:9b", ATTR{type}=="1", KERNEL=="ens225", NAME="eth4"

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:81:01:ed", ATTR{type}=="1", KERNEL=="ens256", NAME="eth1"

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:81:5e:78", ATTR{type}=="1", KERNEL=="ens257", NAME="eth5"Let’s look at the first line:

SUBSYSTEM==”net”, ACTION==”add”, DRIVERS==”?*”, ATTR{address}==”00:50:56:81:ee:a6″, ATTR{type}==”1″, KERNEL==”ens161“, NAME=”eth2“

The network interface recognized as ens161 is being renamed to eth2. The rule must specify the network interface MAC address.

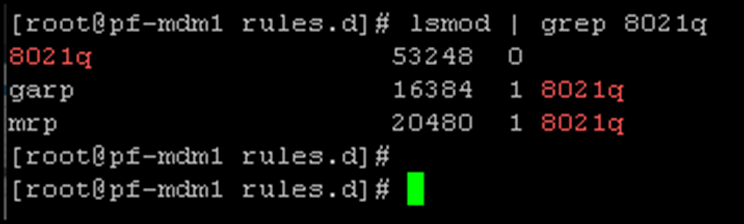

2- We must check if the system module “8021q” is loaded since we will use VLAN interfaces.

If it is not loaded, use the following command to load the module:

modprobe 8021q

3- Setting up the hostname, management IP address, default gateway, DNS, and NTP server:

# Configuring the hostname:

echo "pf-mdm1" > /etc/hostname

# Configuring the management network interface, setting up the IP, network mask, network gateway, and the DNS server:

nmcli connection modify eth0 ipv4.method manual ipv4.addresses 192.168.255.101/24

nmcli connection modify eth0 ipv4.gateway 192.168.255.1

nmcli connection modify eth0 ipv4.dns 192.168.255.3

# Configuring the NTP (replace 192.168.255.3 to your NTP server IP):

sed -e s/"pool 2.centos.pool.ntp.org iburst"/"pool 192.168.255.3 iburst"/g /etc/chrony.conf

systemctl enable chronyd

# Activating the eth0 network interface and reboot the system:

nmcli connection up eth0

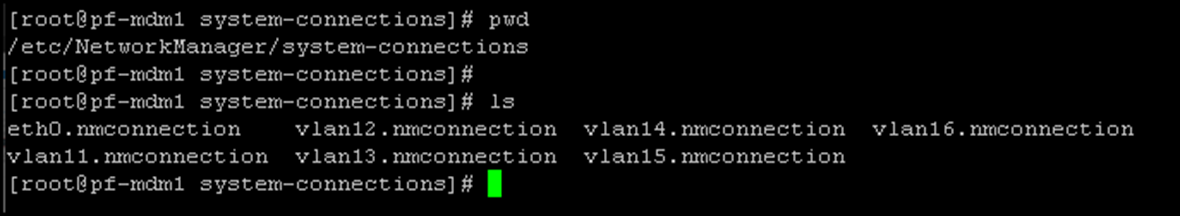

rebootNote: The “nmcli” network utility automatically saves the network config under the directory /etc/NetworkManager/system-connections/, as we can see in the following picture:

This “block” of configuration is for the first node. Do the same for the other nodes, changing the unique values for each one!Configuring the “back-end” Interfaces

4- Configuring the back-end interfaces.

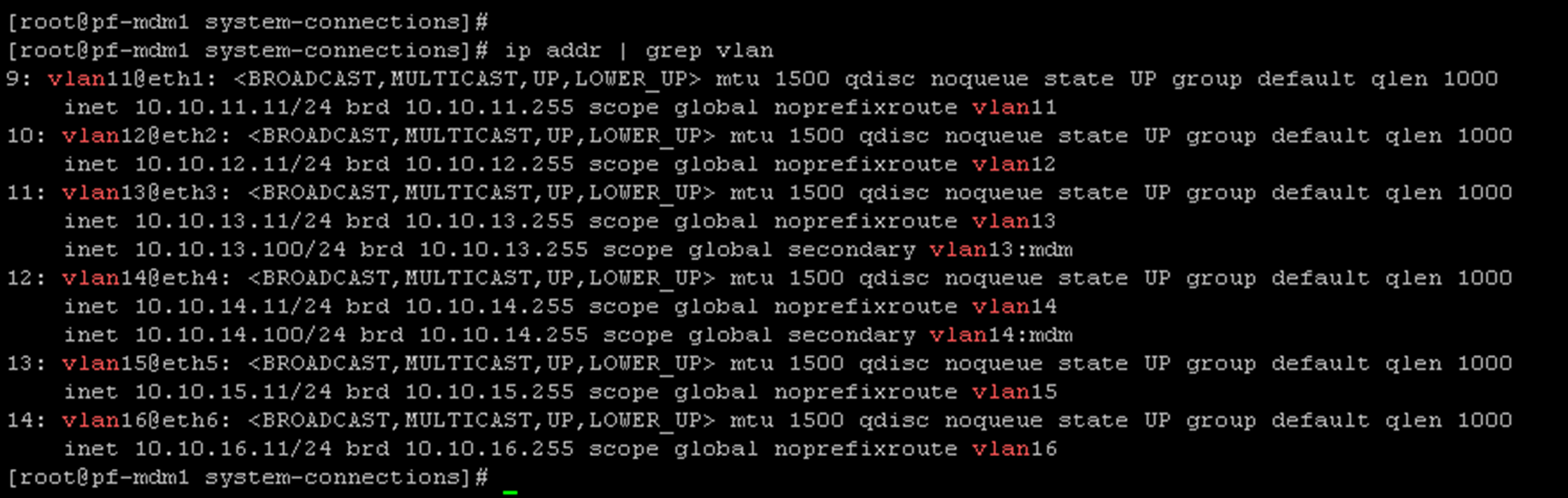

In our case, the physical network interfaces eth1 and eth2 will be used for the back-end traffic. Under each network interface, we will use VLAN 11 and 12, respectively:

eth1 –> vlan11 –> 10.10.11.0/24

eth2 –> vlan12 –> 10.10.12.0/24

# Configuring the vlan11:

nmcli connection add type vlan ifname vlan11 con-name vlan11 dev eth1 id 11

nmcli connection modify vlan11 ipv4.method manual ipv4.addresses 10.10.11.11/24

nmcli connection up vlan11

# Configuring the vlan12:

nmcli connection add type vlan ifname vlan12 con-name vlan12 dev eth2 id 12

nmcli connection modify vlan12 ipv4.method manual ipv4.addresses 10.10.12.11/24

nmcli connection up vlan12Configuring the “front-end” Interfaces

5- Configuring the front-end interfaces.

In our case, the physical network interfaces eth3 and eth4 will be used for the front-end traffic. Under each network interface, we will use VLAN 13 and 14, respectively:

eth3 –> vlan13 –> 10.10.13.0/24

eth4 –> vlan14 –> 10.10.14.0/24

# Configuring the vlan13:

nmcli connection add type vlan ifname vlan13 con-name vlan13 dev eth3 id 13

nmcli connection modify vlan13 ipv4.method manual ipv4.addresses 10.10.13.11/24

nmcli connection up vlan13

# Configuring the vlan14:

nmcli connection add type vlan ifname vlan14 con-name vlan14 dev eth4 id 14

nmcli connection modify vlan14 ipv4.method manual ipv4.addresses 10.10.14.11/24

nmcli connection up vlan14Note: The cluster Virtual Ips are from these networks: 10.10.13.100 and 10.10.14.100.

6- (Optional)

Configuring the external replication (SDR) interfaces.

In our case, the physical network interfaces eth5 and eth6 will be used for the external replication traffic. Under each network interface, we will use VLAN 15 and 16, respectively:

eth5 –> vlan15 –> 10.10.15.0/24

eth6 –> vlan16 –> 10.10.16.0/24

# Configuring the vlan15:

nmcli connection add type vlan ifname vlan15 con-name vlan15 dev eth5 id 15

nmcli connection modify vlan15 ipv4.method manual ipv4.addresses 10.10.15.11/24

nmcli connection up vlan15

# Configuring the vlan16:

nmcli connection add type vlan ifname vlan16 con-name vlan16 dev eth6 id 16

nmcli connection modify vlan16 ipv4.method manual ipv4.addresses 10.10.16.11/24

nmcli connection up vlan167- After setting up all network interfaces, apply the following command to list them:

ip addr | grep vlanNote: The mgmt (eth0) is not showing because it is not a VLAN interface!

8- In our lab, VLANs 13 and 14 are used by the front-end traffic. This means the Storage Data Client (SDC) will consume the volumes from the Storage Data Server (SDS) using these IPs. Since we already have a default gateway (configured through the management interface), we will configure a Policy-Based Route (PBR) for both front-end VLANs. It will guarantee that both front-end VLANs are available on the network using their default gateway (instead of using the management default gateway) – we are doing it because our SDCs are not on the same front-end network – in a real-world implementation; this step is not necessary:

echo "13 vlan13" >> /etc/iproute2/rt_tables

echo "14 vlan14" >> /etc/iproute2/rt_tablesEdit the “/etc/rc.d/rc.local” file and add the following content:

ip rule add from 10.10.13.0/24 table vlan13

ip route add default via 10.10.13.1 dev vlan13 table vlan13

ip rule add from 10.10.14.0/24 table vlan14

ip route add default via 10.10.14.1 dev vlan14 table vlan14Add the execute permission to the file and reboot the system:

chmod +x /etc/rc.d/rc.local

rebootRemember, VLANs 13 and 14 are the VLANs used by the front-end traffic in our lab.

Each VLAN is mapped to 10.10.13.0/24 and 10.10.14.0/24, respectively!

The script “/etc/rc.d/rc.local” is executed on each boot, adding the necessary routes under the custom route tables for each VLAN!

Do the same steps on all five nodes!

Install and Configure the PowerFlex Gateway

The gateway is the PowerFlex Installer, the PowerFlex REST API gateway, and the SNMP trap sender. The PowerFlex Gateway can be installed on Linux or Windows. A PowerFlex Gateway installed on Windows can deploy PowerFlex system components on both Linux and Windows-based servers:

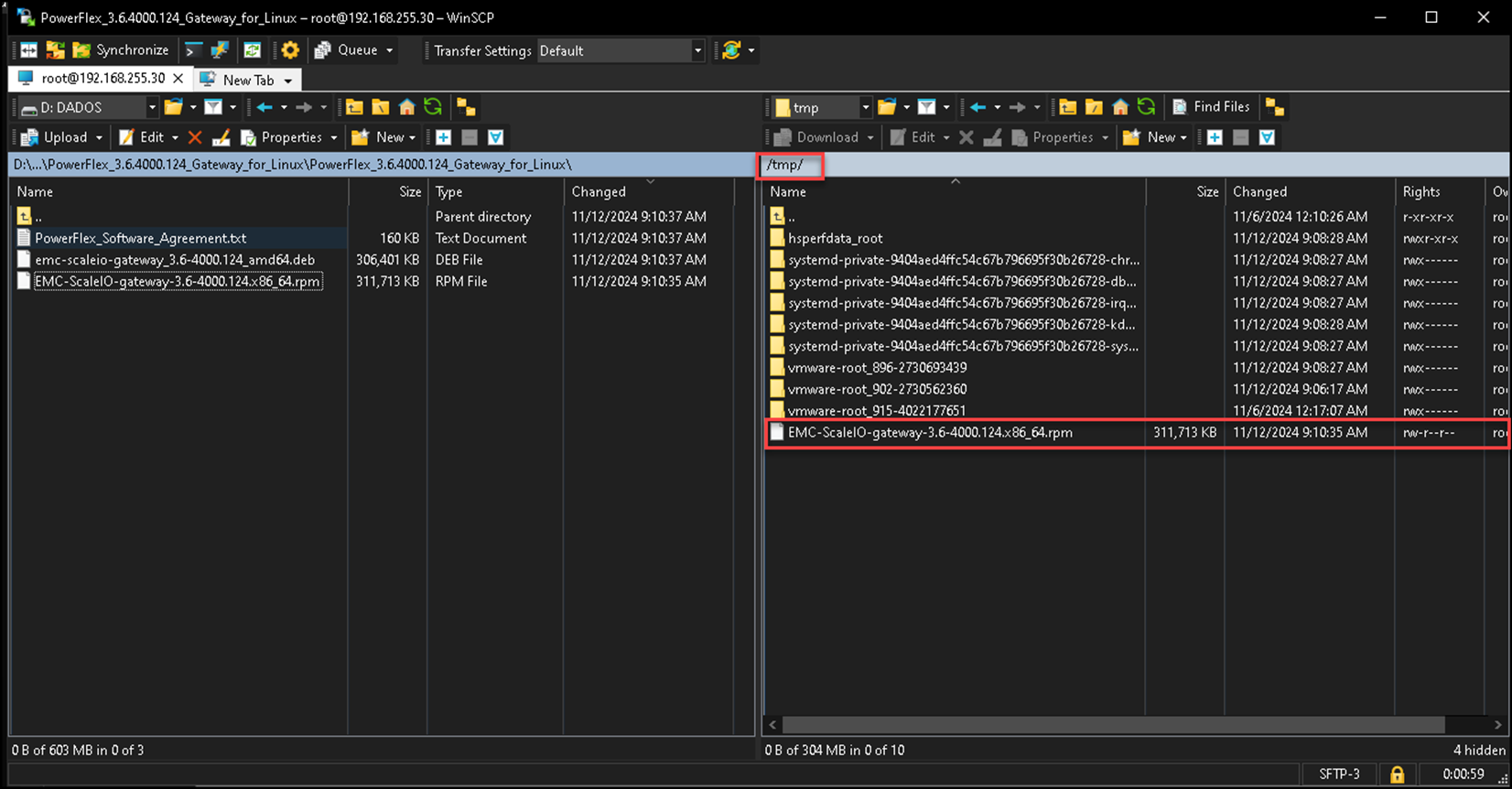

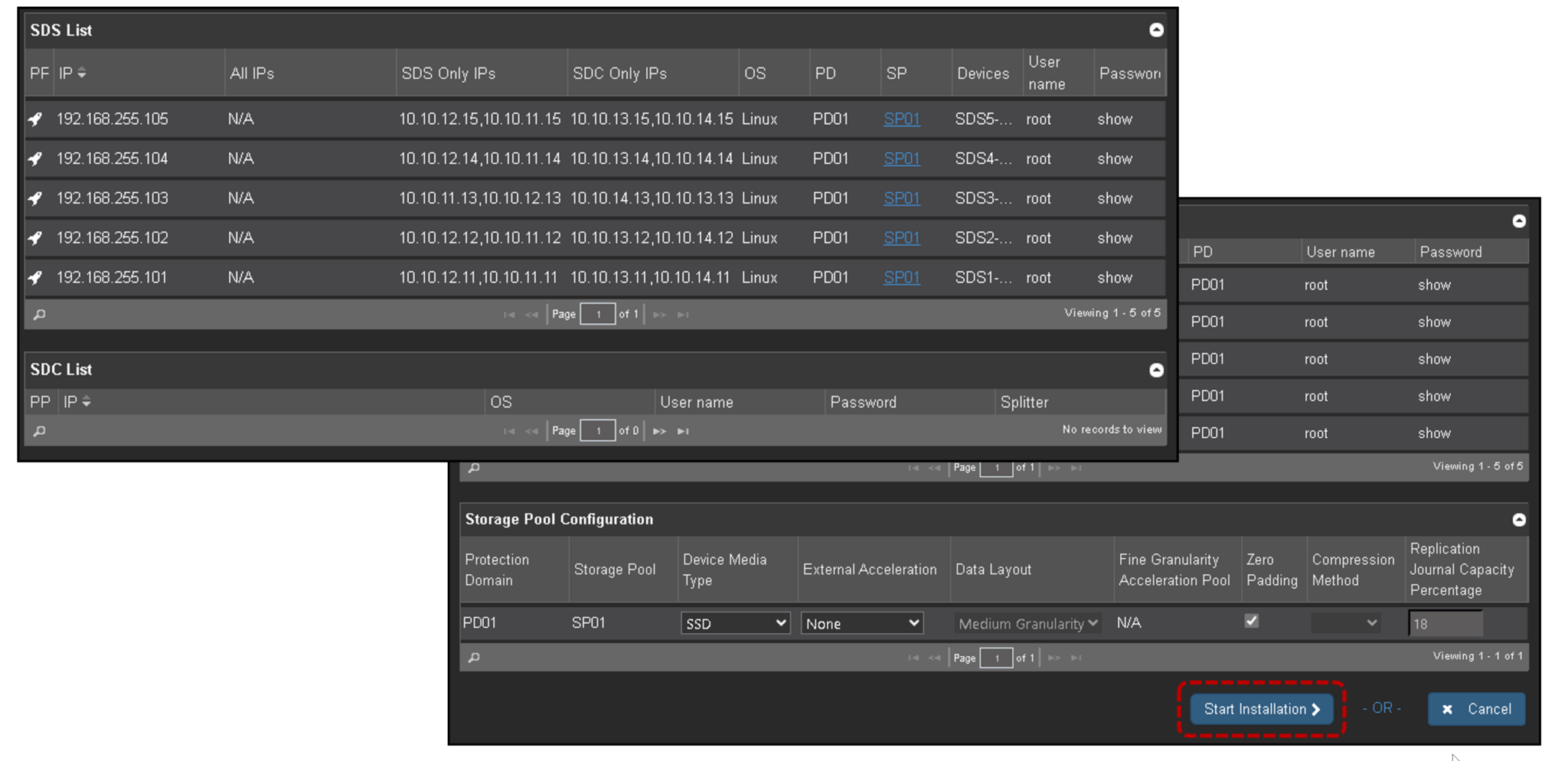

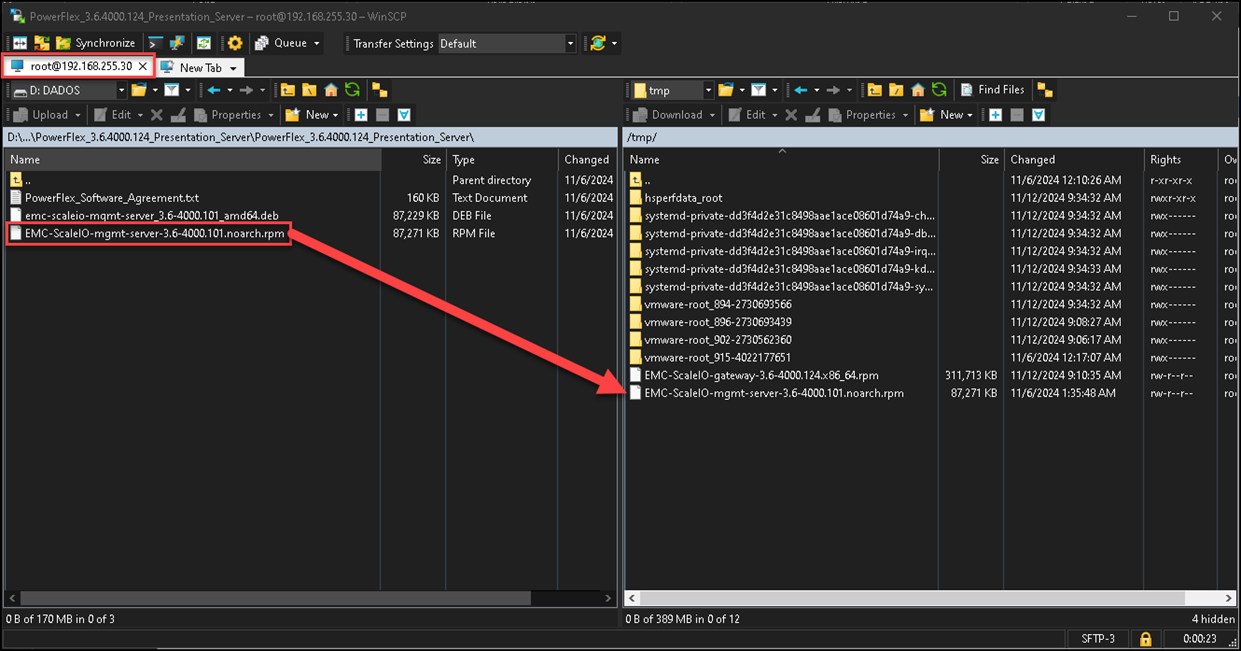

1- Copy the PowerFlex Gateway package from the extracted download file to the server on which you will install the package. The recommended location on the server is the /tmp/ directory;

2- Install the PowerFlex Gateway on the Linux server by running the following command (all on one line) – in this example, we are considering using a CentOS 9 Stream Linux:

# Installing some necessary packages:

dnf update -y

dnf install epel-release -y

dnf install java-1.8.0-openjdk java-1.8.0-openjdk-devel -y

java -version

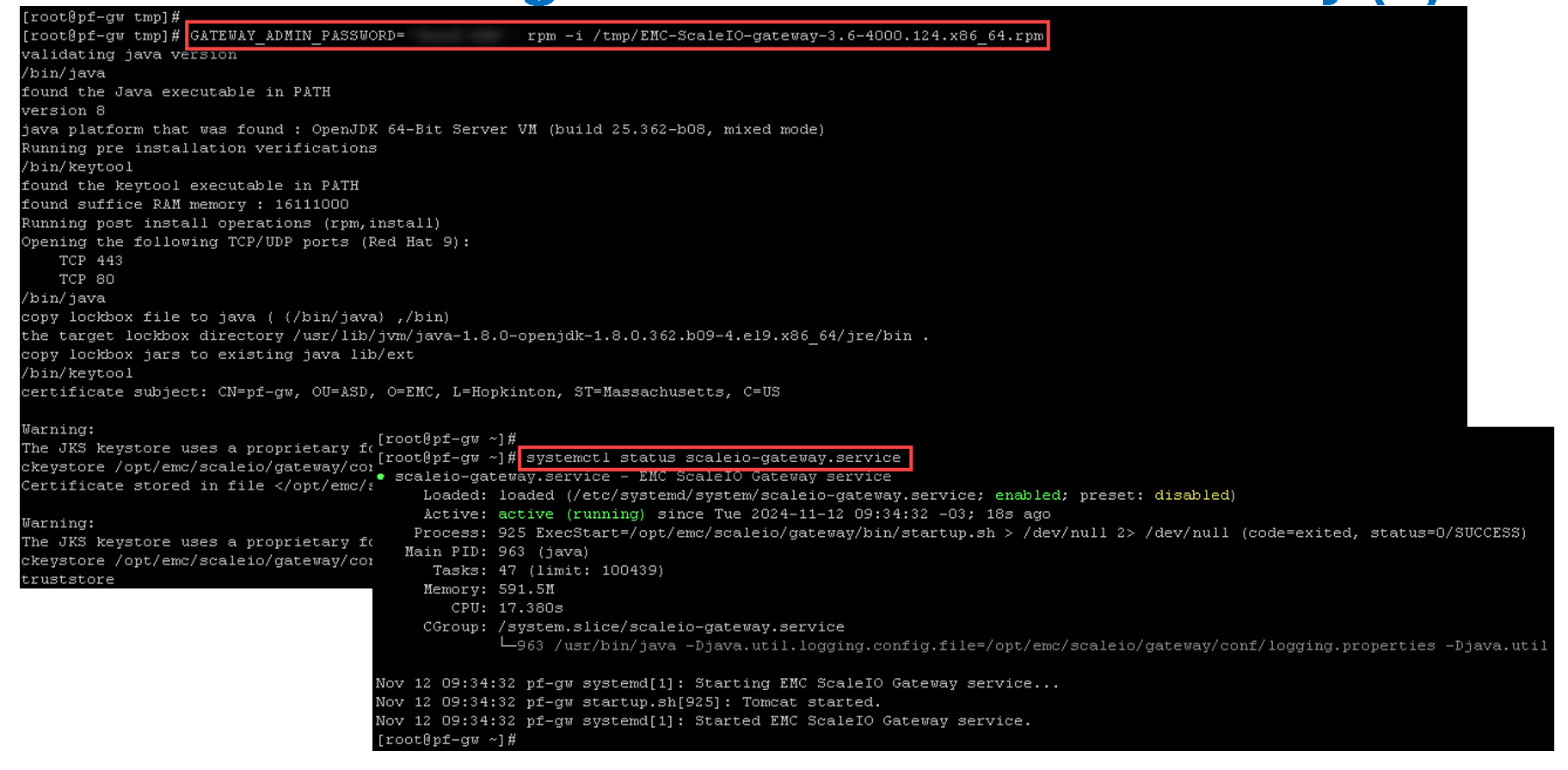

# Installing the Gateway package. Replacing "<new_GW_admin_password>" by the desired admin password:

GATEWAY_ADMIN_PASSWORD=<new_GW_admin_password> rpm -i /tmp/EMC-ScaleIO-gateway-3.6-4000.124.x86_64.rpmCopying the rpm package from the local machine to the PowerFlex Gateway virtual machine:

Installing the rpm package and checking the gateway service status (scaleio-gateway.service):

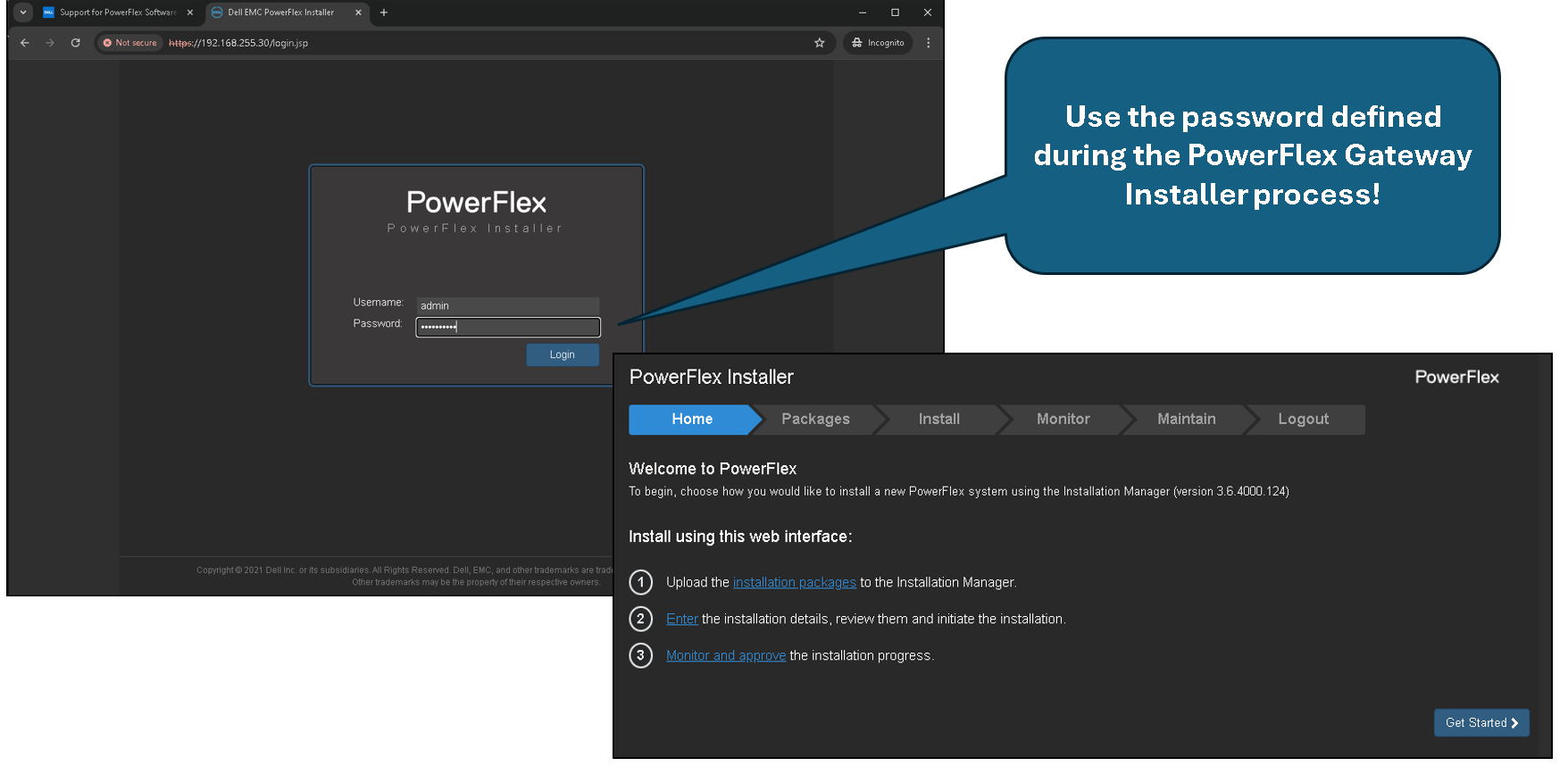

Afterward, we can access the PowerFlex Gateway:

https://IP_POWERFLEX_GW

At this point, the PowerFlex Gateway has been deployed successfully!

Deploy the PowerFlex Cluster

As we explained, we are using the PowerFlex 3.6.4 in this lab.

The first step is to download all packages from support.dell.com.

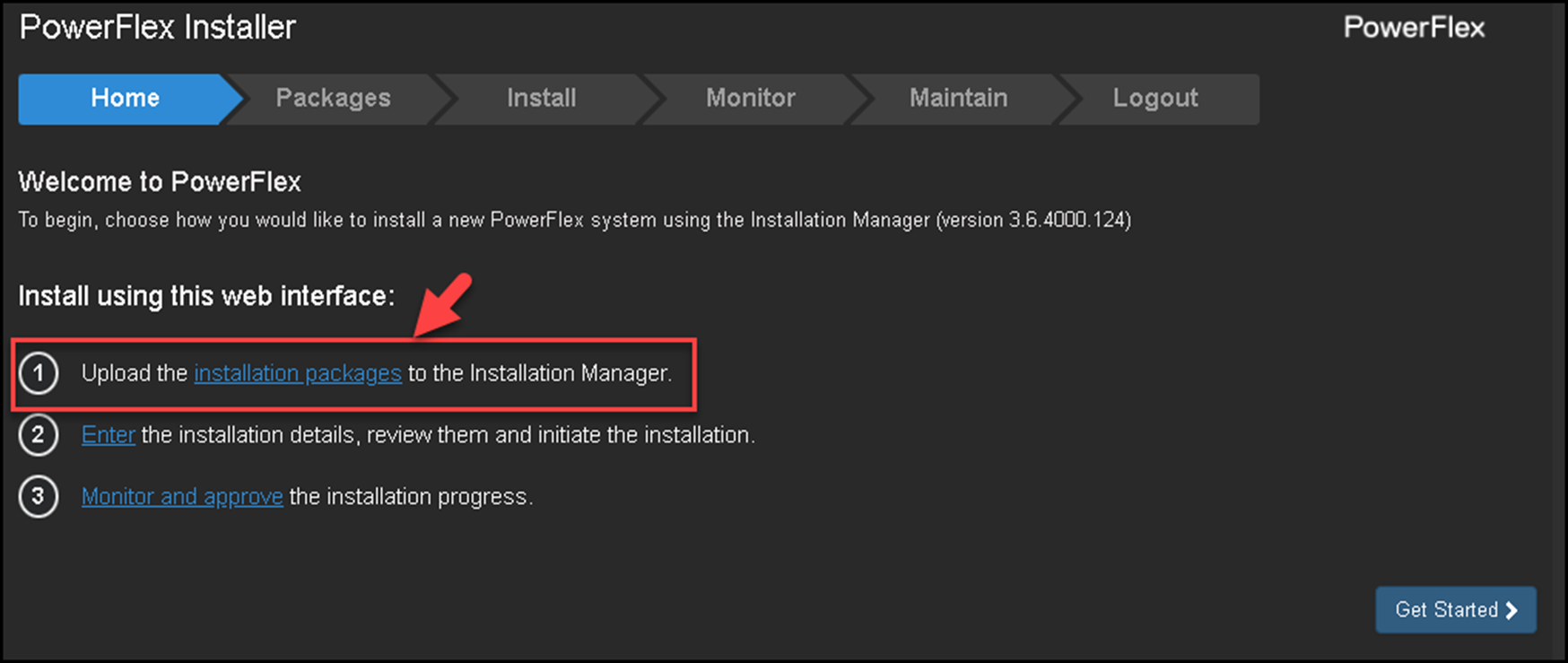

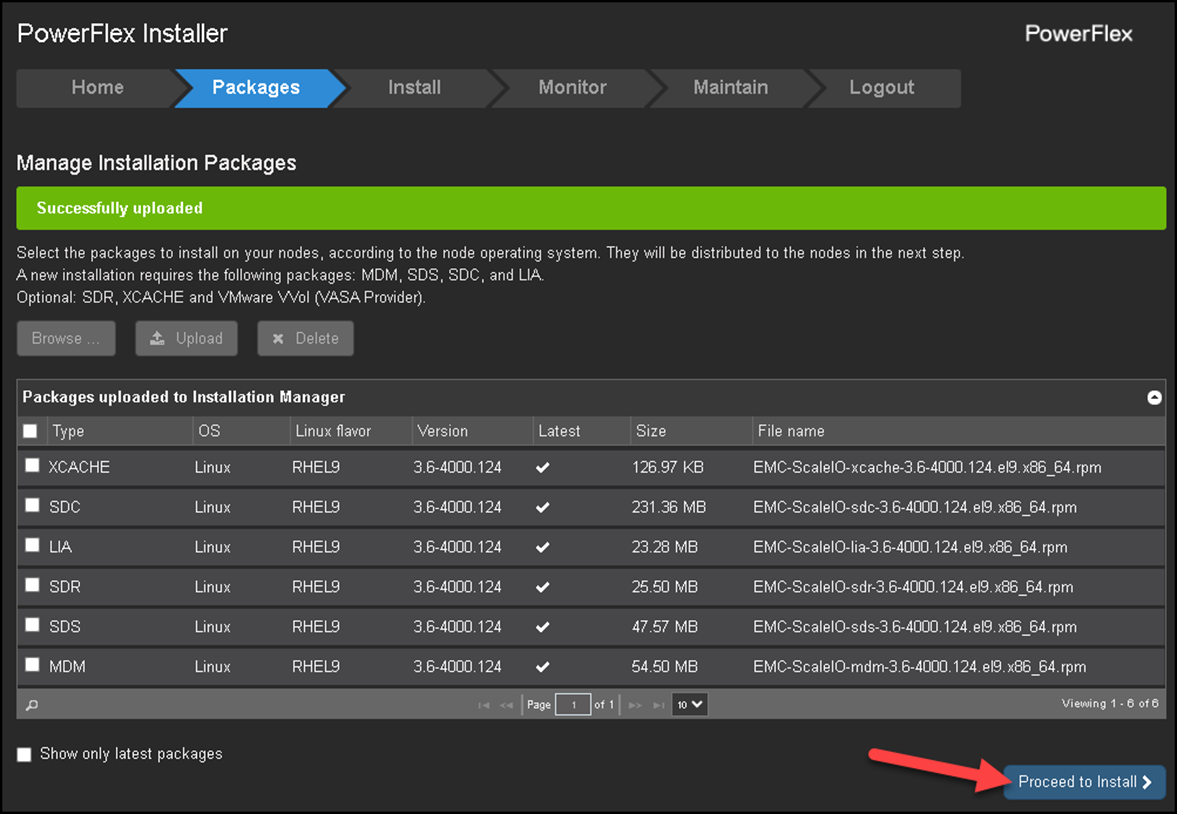

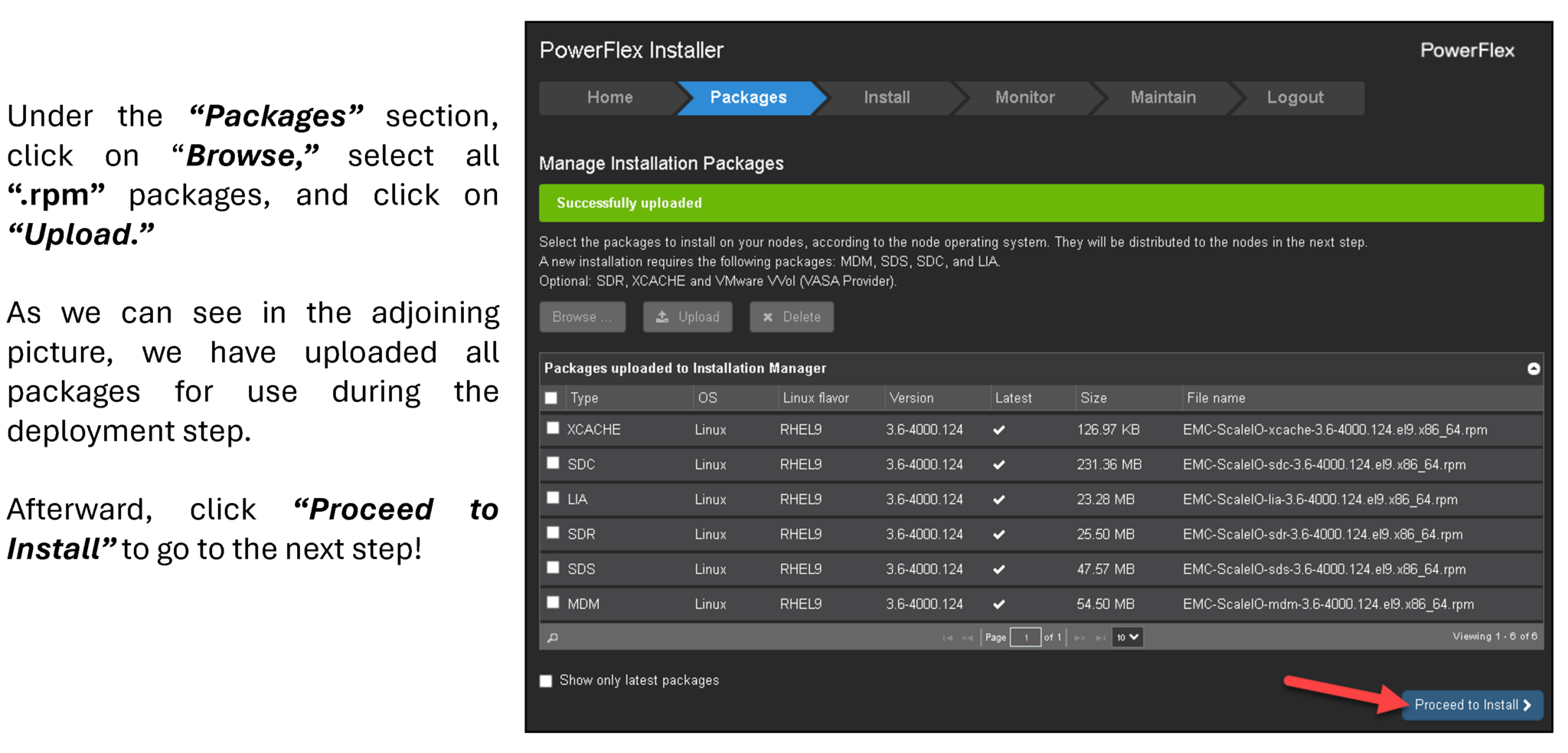

The second step is to access the PowerFlex Gateway interface and upload all installation packages, as we can see in the following figure:

Under the “Packages” section, click on “Browse,” select all “.rpm” packages, and click on “Upload.”

As we can see in the adjoining picture, we have uploaded all packages for use during the deployment step.

Afterward, click “Proceed to Install” to go to the next step:

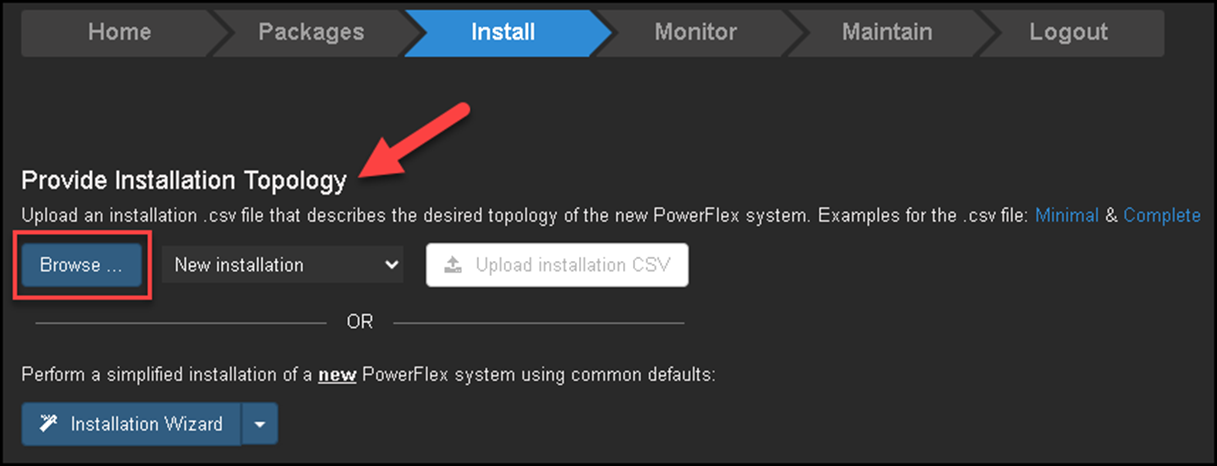

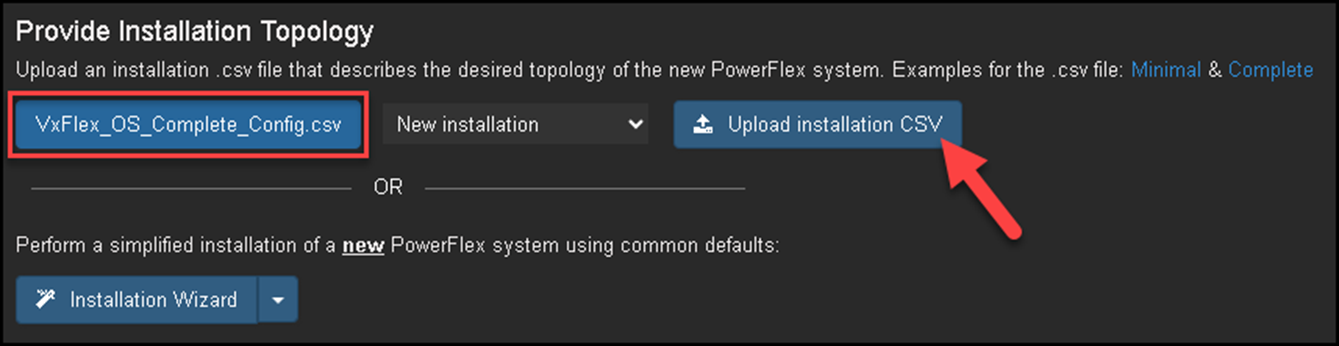

Under the “Install” section, we have both ways to deploy the PowerFlex:

— The first way is to provide an installation topology (CSV) file. This is a custom method to deploy;

— The second way is to use the installation wizard.

We will provide the installation topology file for this deployment.

Access the PowerFlex Gateway:

Under Install, click on Browse and select the CSV file:

Note: I have shared a copy of the CSV file that I used for this deployment:

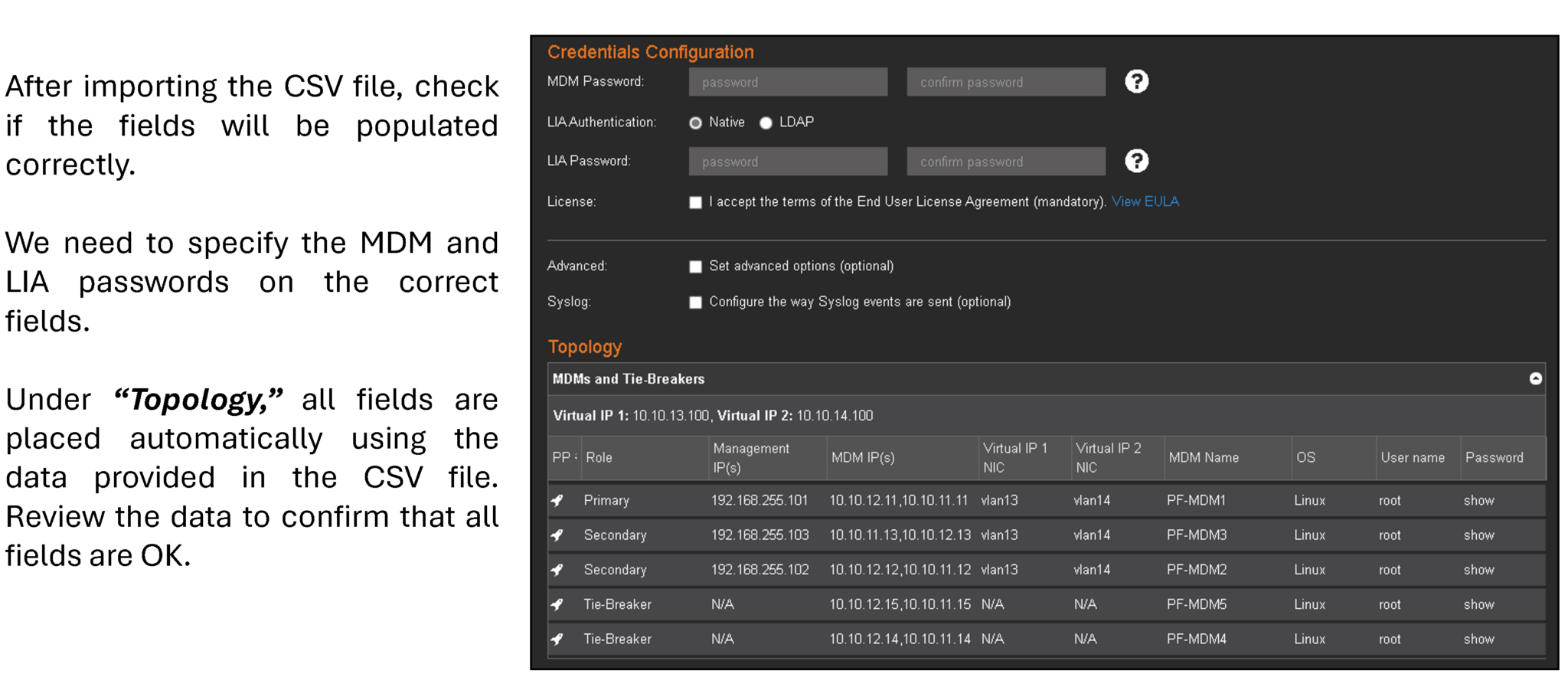

Review all populated details and click on “Start Installation”:

The installation process is a straightforward task. Follow the next steps and wait for the installation to complete!

Afterward, access the primary MDM node and get the cluster details:

scli --login --username admin

scli --query_cluster

Example:

[root@pf-mdm2 ~]# scli --query_cluster

Cluster:

Name: PF5NodeCluster, Mode: 5_node, State: Normal, Active: 5/5, Replicas: 3/3

SDC refresh with MDM IP addresses: disabled

Virtual IP Addresses: 10.10.13.100, 10.10.14.100

Master MDM:

Name: PF-MDM2, ID: 0x0cbf1ddc1448a902

IP Addresses: 10.10.12.12, 10.10.11.12, Management IP Addresses: 192.168.255.102, Port: 9011, Virtual IP interfaces: vlan13, vlan14

Status: Normal, Version: 3.6.4000

Slave MDMs:

Name: PF-MDM1, ID: 0x05313fc85a6b1400

IP Addresses: 10.10.12.11, 10.10.11.11, Management IP Addresses: 192.168.255.101, Port: 9011, Virtual IP interfaces: vlan13, vlan14

Status: Normal, Version: 3.6.4000

Name: PF-MDM3, ID: 0x554d3f32355b8901

IP Addresses: 10.10.11.13, 10.10.12.13, Management IP Addresses: 192.168.255.103, Port: 9011, Virtual IP interfaces: vlan13, vlan14

Status: Normal, Version: 3.6.4000

Tie-Breakers:

Name: PF-MDM4, ID: 0x2bd3d2110b863b04

IP Addresses: 10.10.12.14, 10.10.11.14, Port: 9011

Status: Normal, Version: 3.6.4000

Name: PF-MDM5, ID: 0x4ea9ca512f887103

IP Addresses: 10.10.12.15, 10.10.11.15, Port: 9011

Status: Normal, Version: 3.6.4000Install and Configure the PowerFlex Presentation Server

The Presentation Server is an essential component that serves as the User Interface (UI) and API layer, facilitating management and monitoring of the PowerFlex environment.

It hosts the PowerFlex UI and APIs, allowing administrators to manage resources, configure settings, and view performance metrics.

As a bridge between users and backend services, it ensures a streamlined and centralized point for control and data visualization across storage, compute, and network components in a PowerFlex deployment.

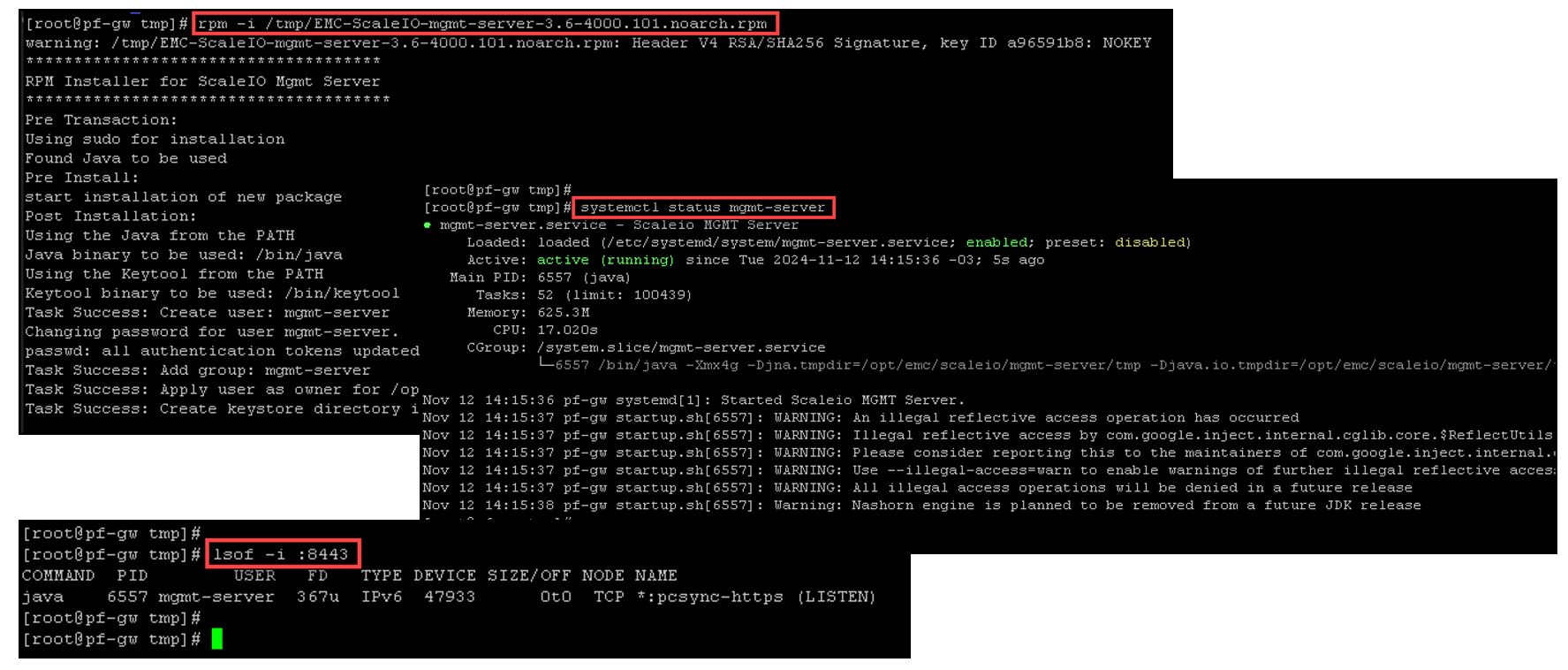

1- Copying the rpm package (mgmt-server) from the local machine to the server:

2- Installing the JAVA package:

yum install -y java-11-openjdk3- Installing the rpm package and checking the service status:

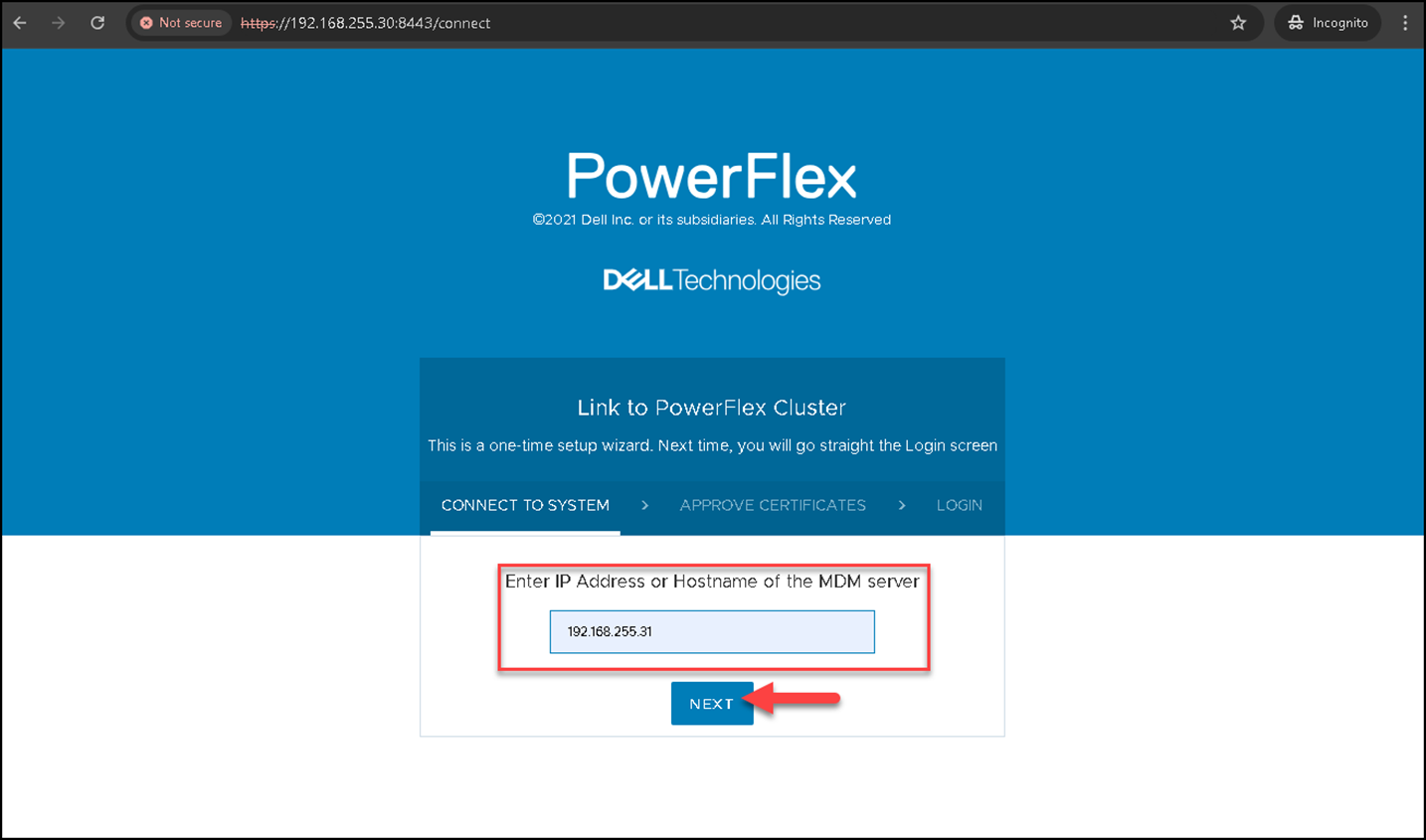

4- To access the PowerFlex Presentation Server, use the following address:

https://IP_PRESENTATION_SERVER:8443

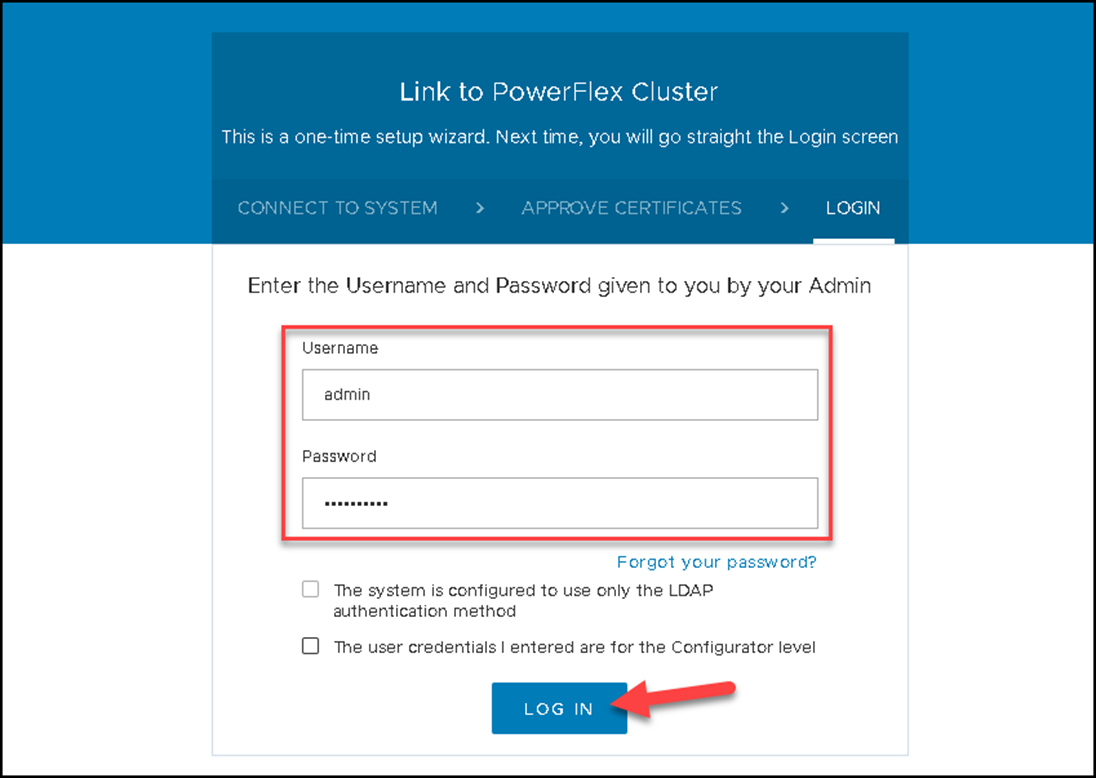

To manage an MDM cluster through the Presentation Server, we must specify the MDM Primary IP and click on NEXT, as we can see in the following example:

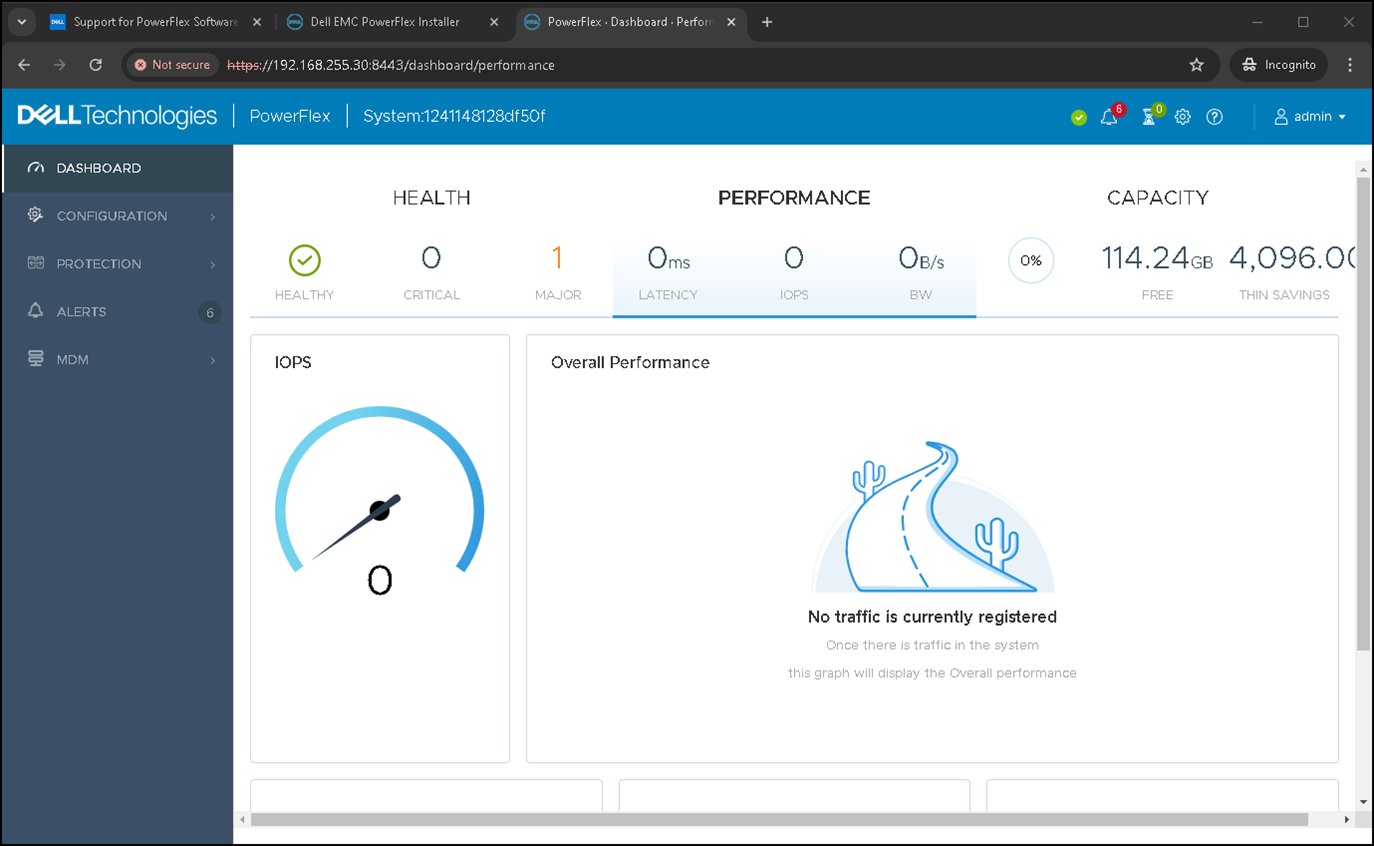

Here we are 🙂

This is the Presentation Server Dashboard. We will explore more details further:

Deploy a Windows-Based Storage Data Client (SDC)

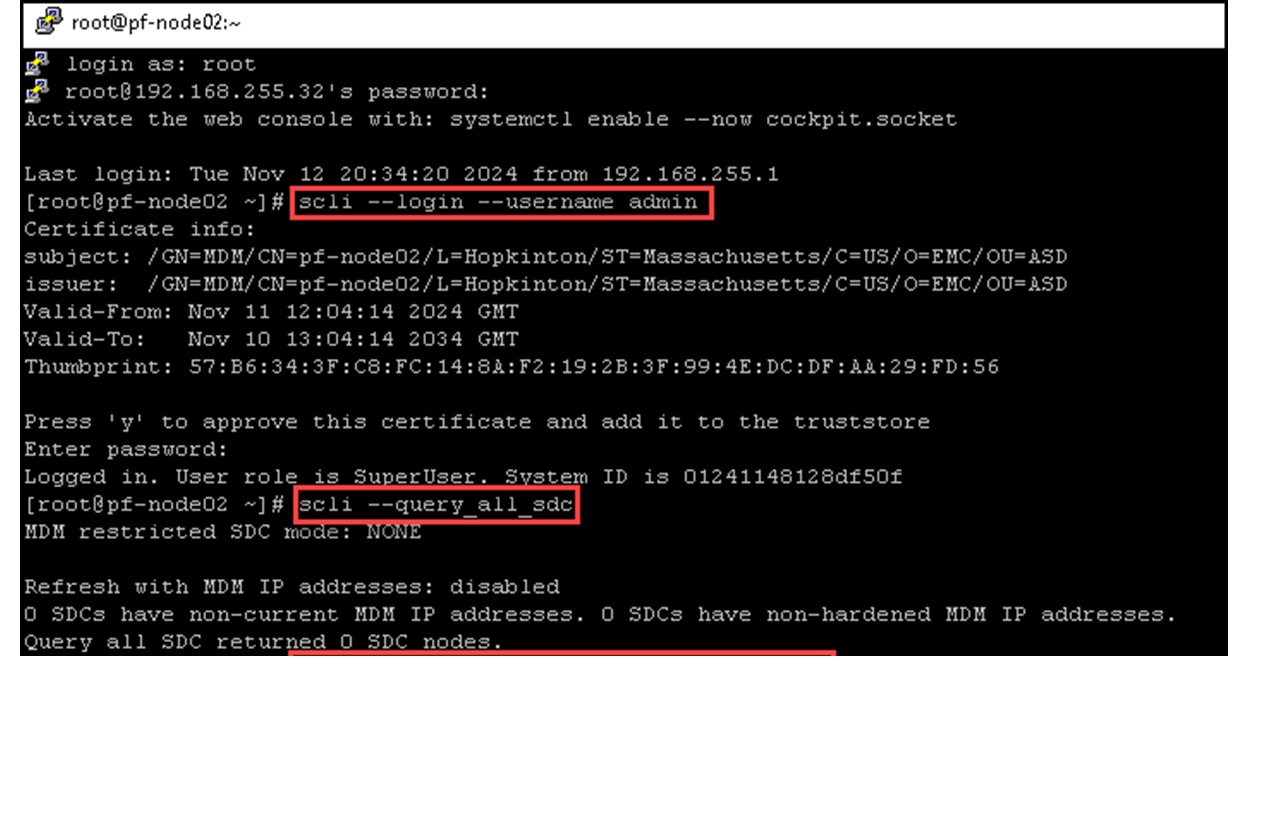

As we can see, we do not have any SDC connected to our PowerFlex cluster:

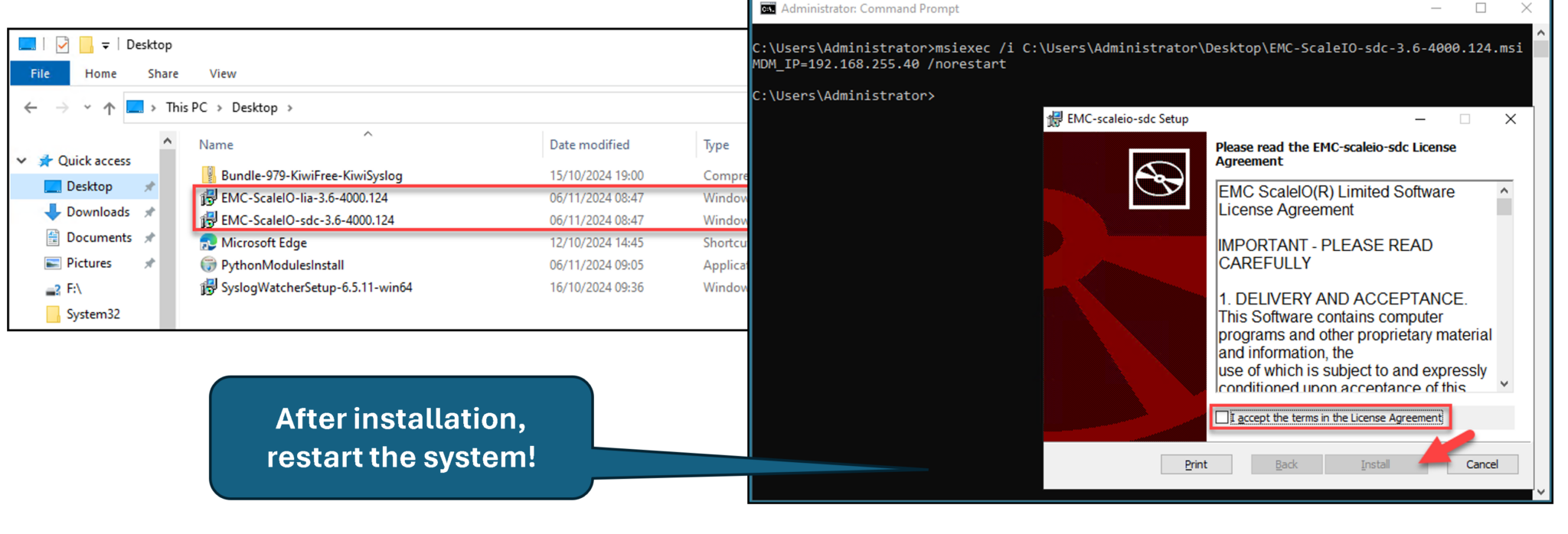

We will install the SDC and LIA packages in a Windows server system. Copy both packages to the Windows system, open the command line (cmd), and run the following commands to install each package:

# Installing the SDC package - change the MDM IP 192.168.255.40 to your MDM IP or Virtual IP

msiexec /i C:\Users\Administrator\Desktop\EMC-ScaleIO-sdc-3.6-4000.124.msi MDM_IP=192.168.255.40 /norestart

# Installing the LIA package - type the LIA password on the variable "TOKEN":

msiexec /i C:\Users\Administrator\Desktop\EMC-ScaleIO-lia-3.6-4000.124.msi TOKEN=‘lia_password’

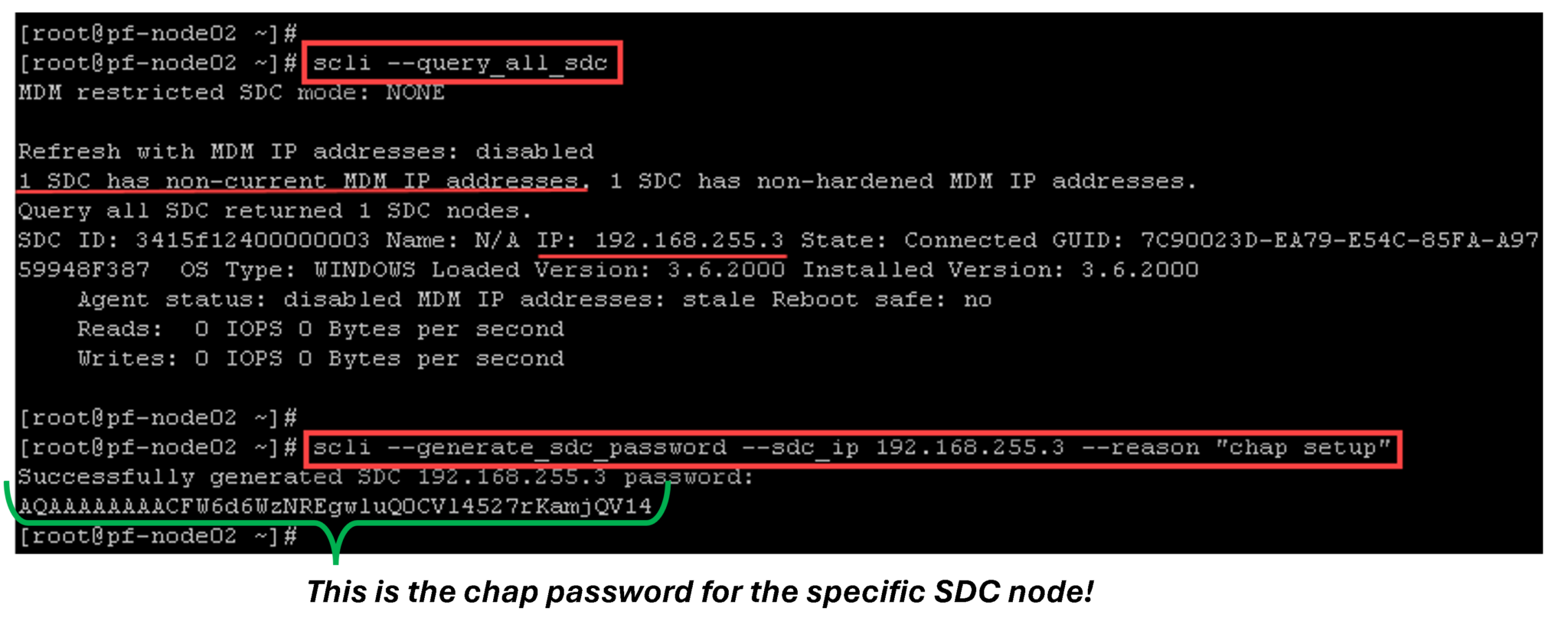

Generating a CHAP password for SDC to MDM authentication:

scli --generate_sdc_password --sdc_ip 192.168.255.3 --reason "chap setup"

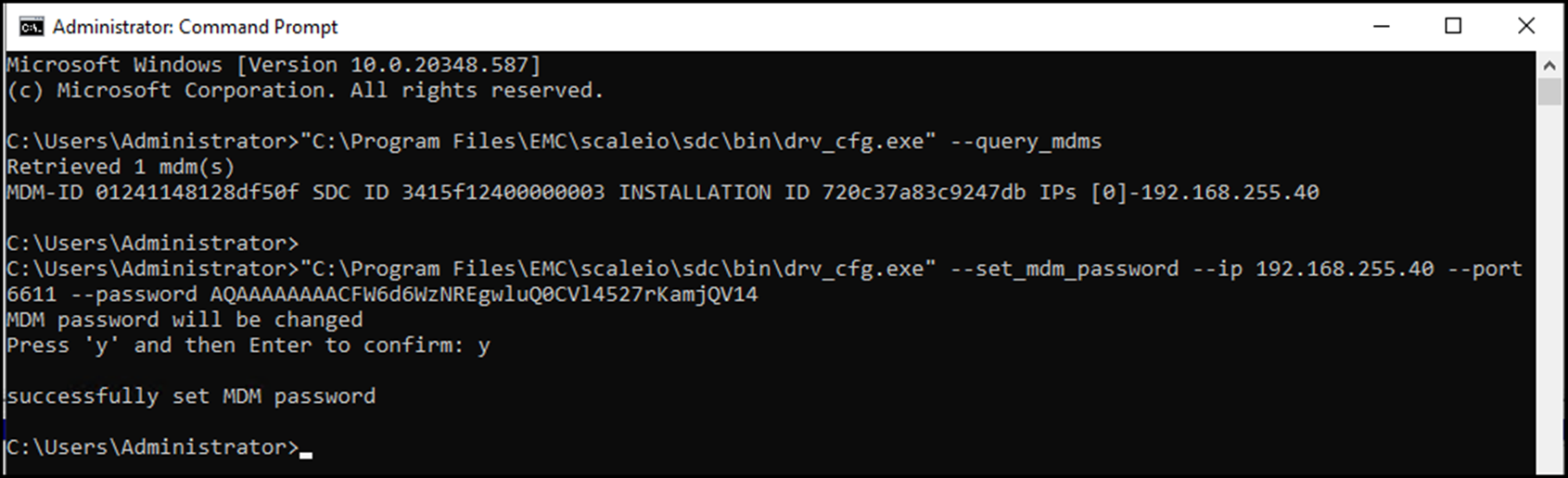

Setting the SDC password up from the Windows SDC. Open the cmd with admin rights and execute the following commands:

"C:\Program Files\EMC\scaleio\sdc\bin\drv_cfg.exe" --query_mdms

"C:\Program Files\EMC\scaleio\sdc\bin\drv_cfg.exe" --set_mdm_password --ip 192.168.255.40 --port 6611 --password AQAAAAAAAACFW6d6WzNREgwluQ0CVl4527rKamjQV14

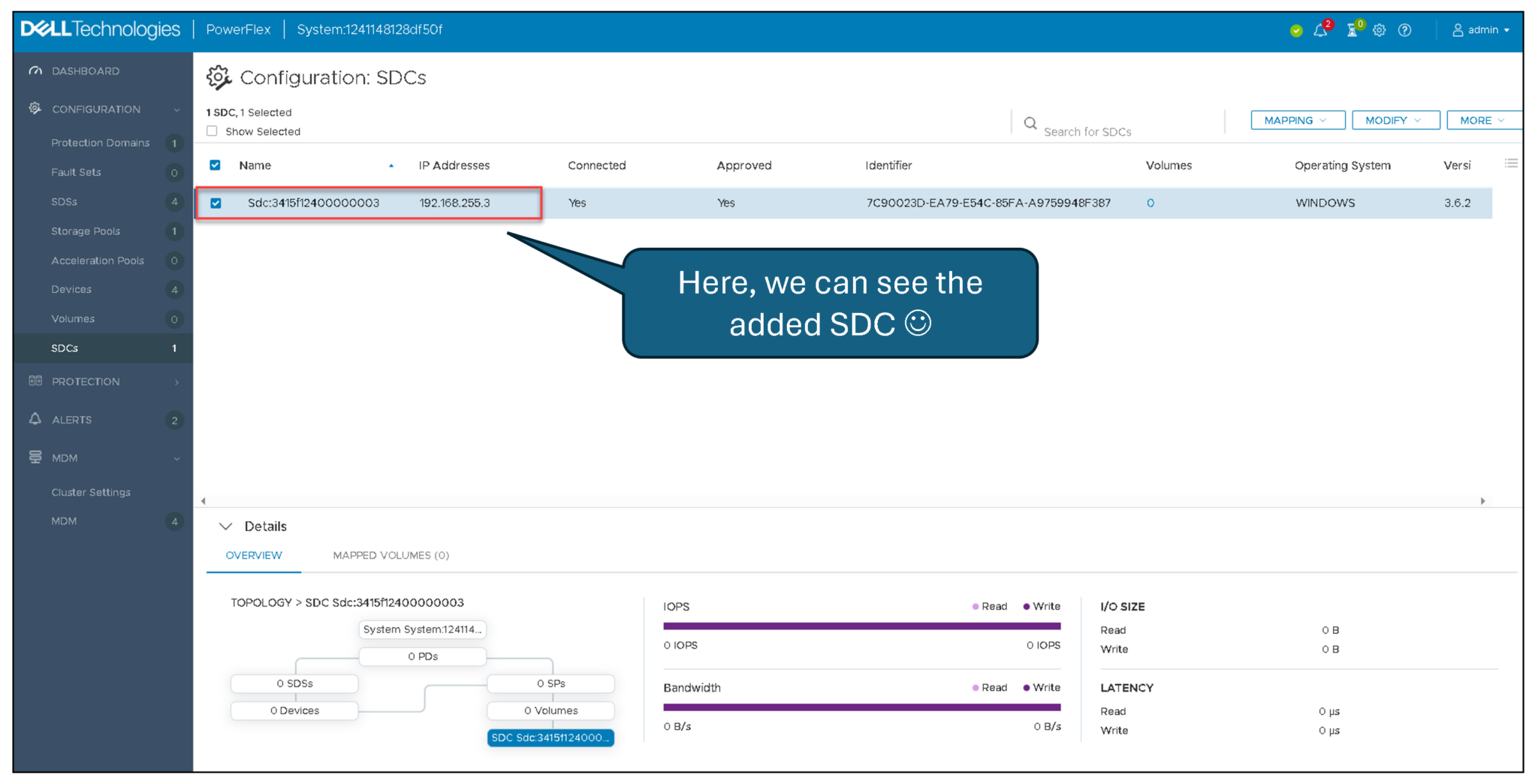

On the Presentation Server, under SDCs, we can see the Windows SDC:

Creating a Volume and Mapping it to a Windows-Based SDC

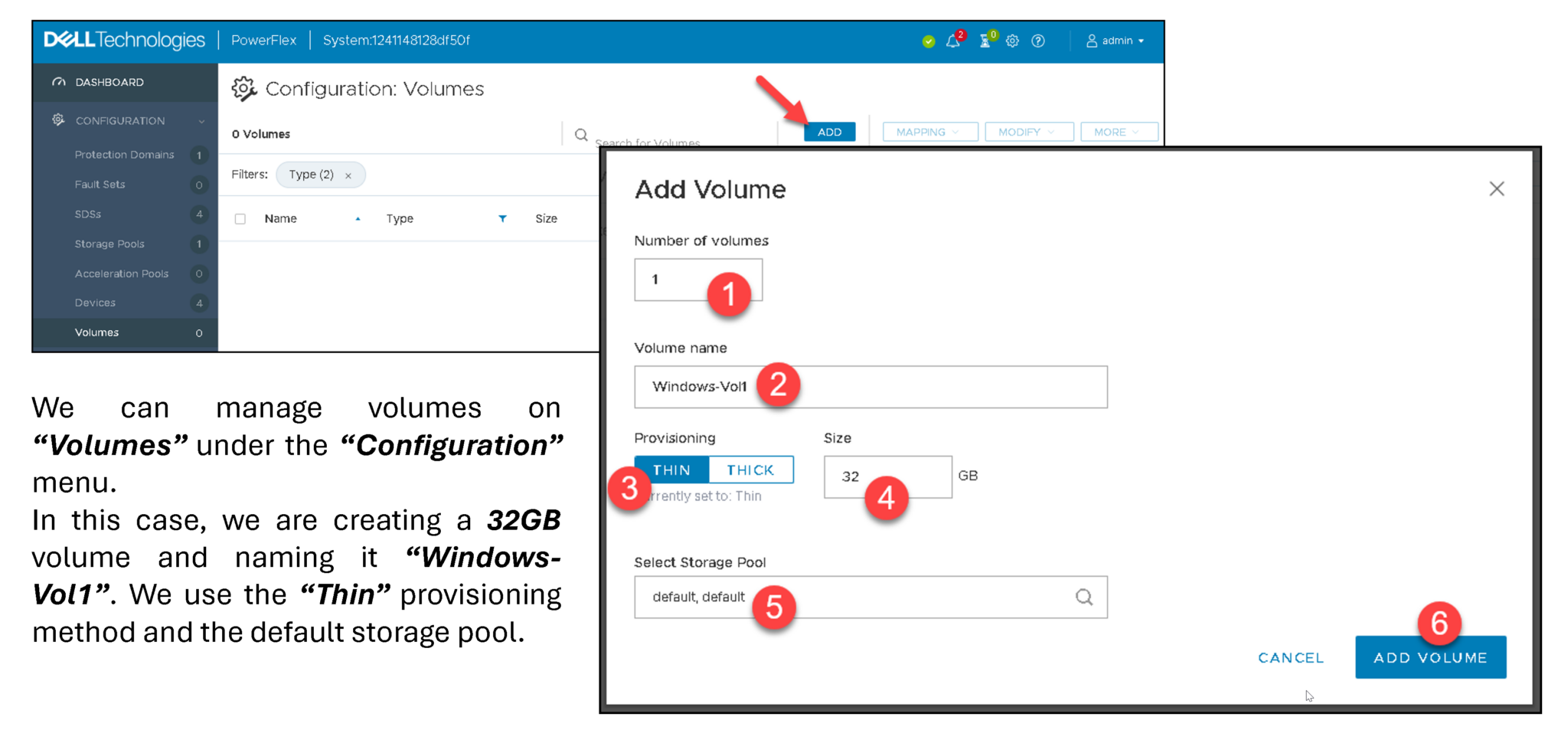

On the Presentation Server, access the Volumes menu under Configuration and follow the steps below:

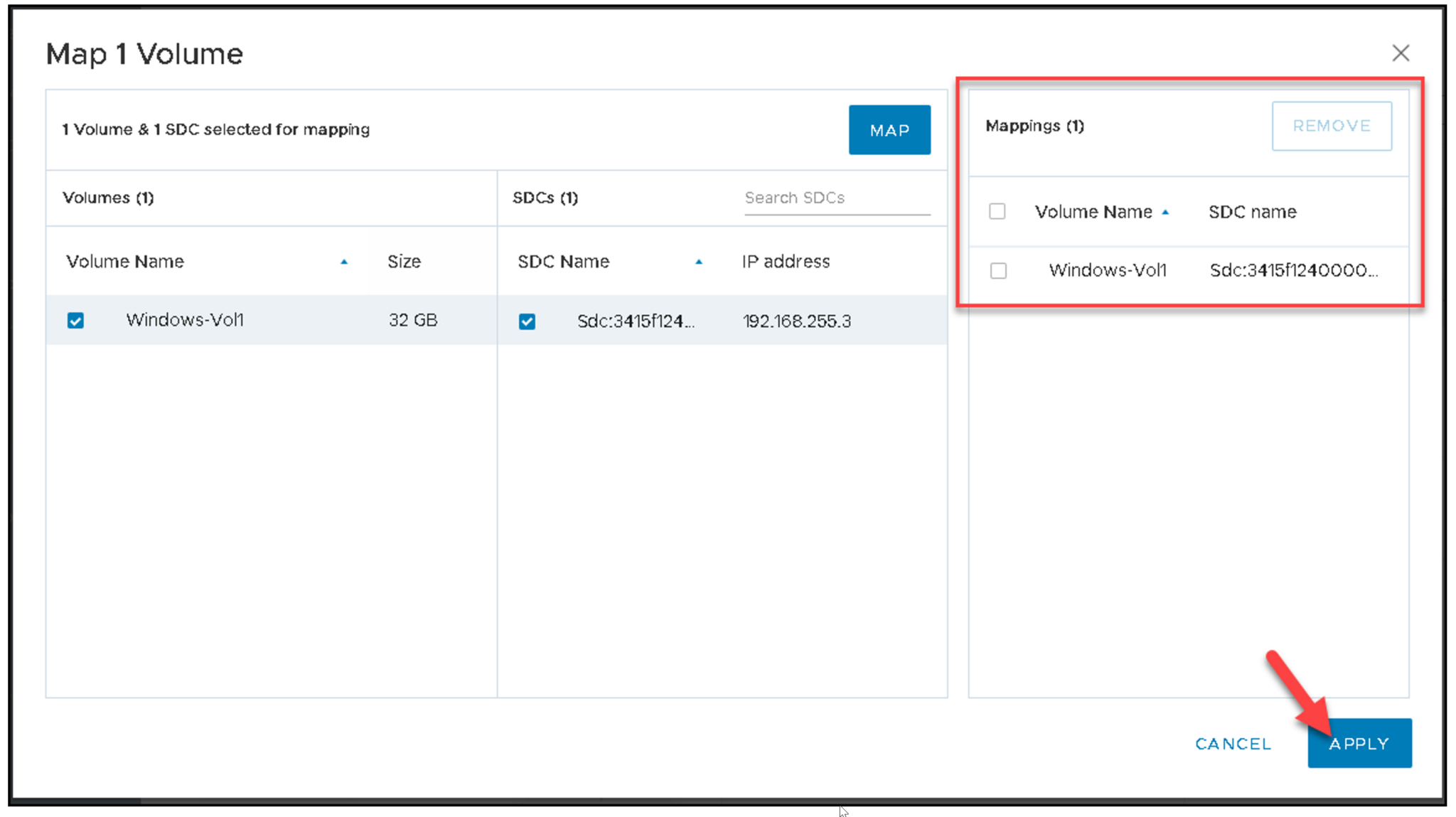

After adding the volume, we must map it to SDC:

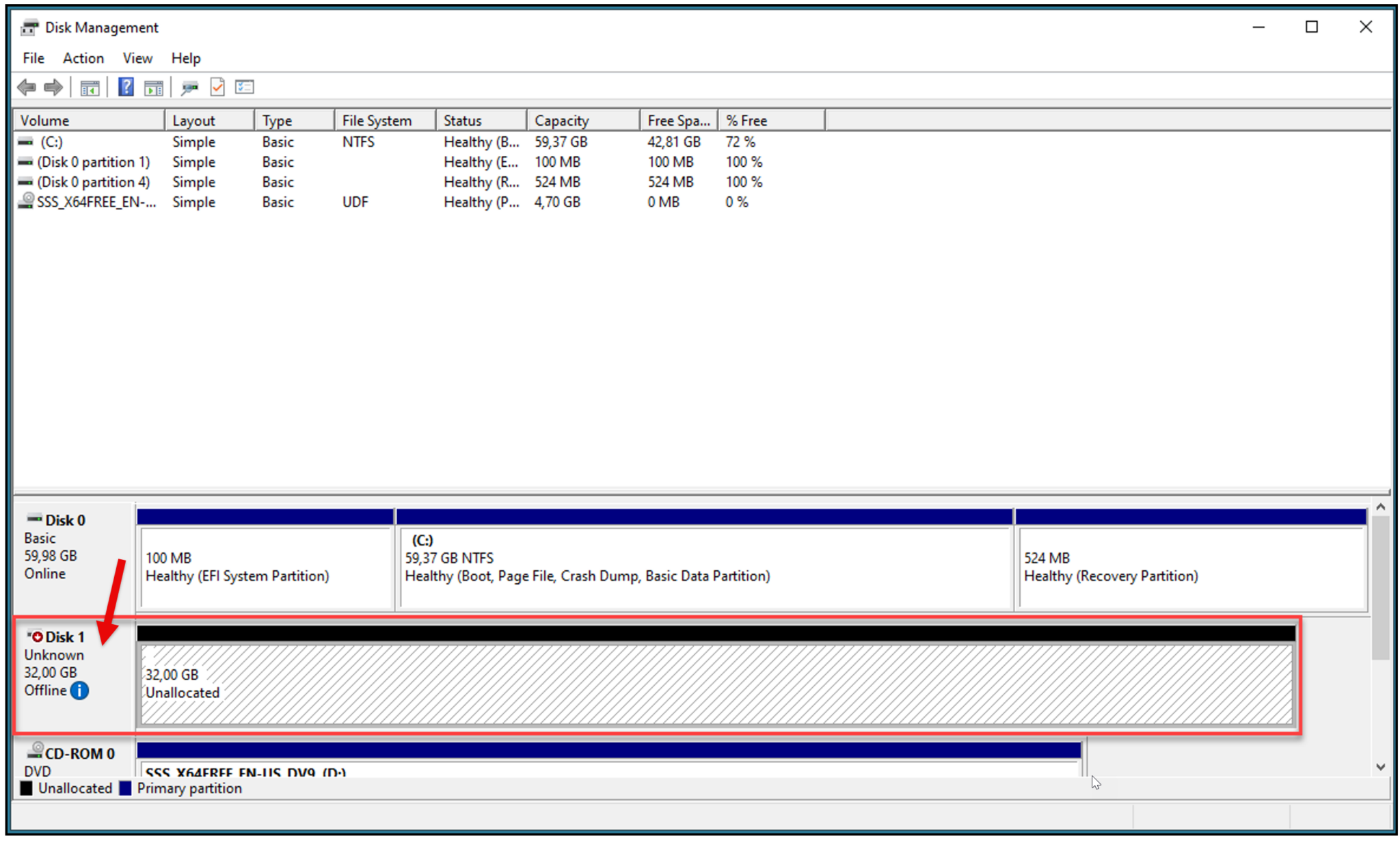

Go to the Windows Server and open the Disk Management. A new disk should appear:

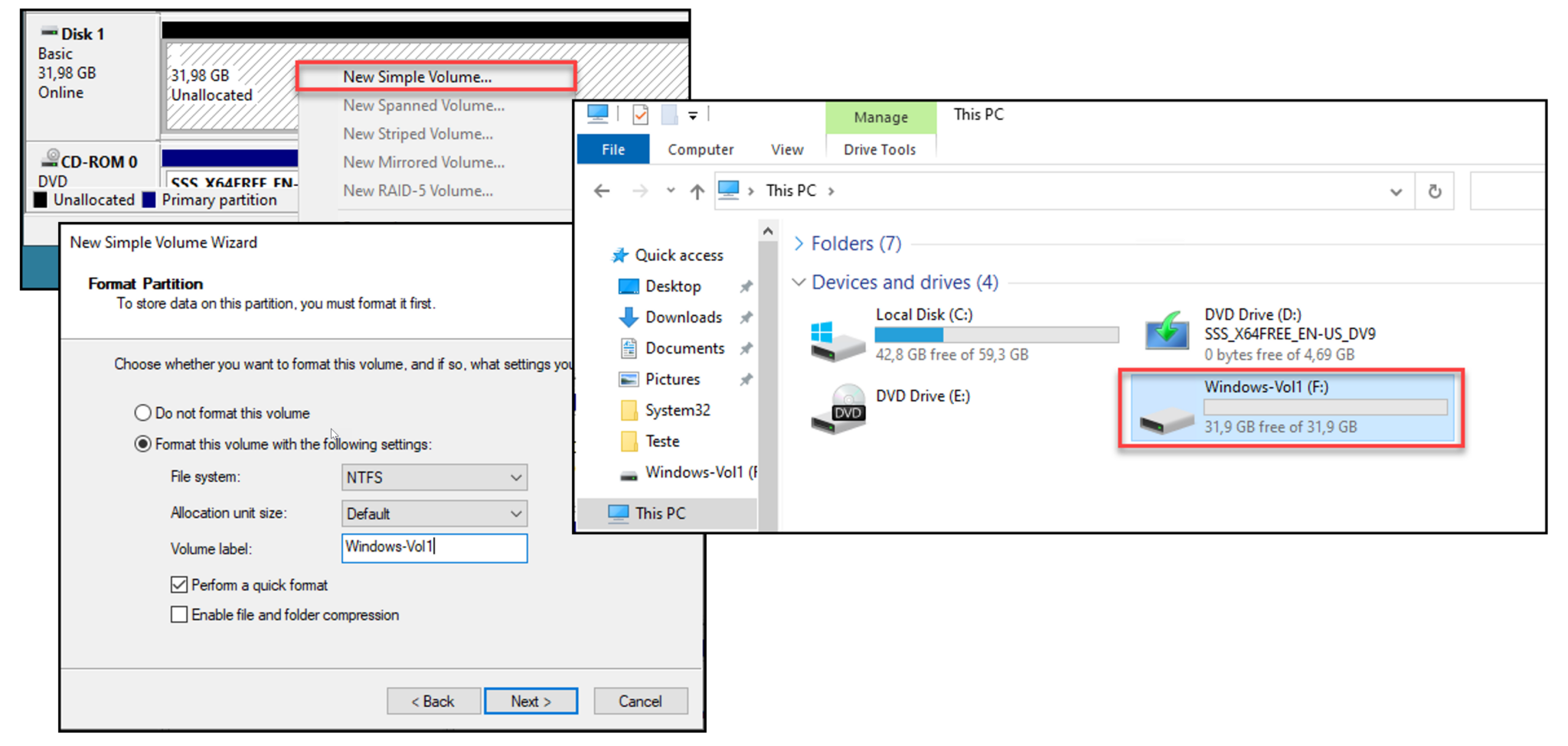

Initialize the new disk and create a simple volume (Next –> Next –> Finish):

That’s it 🙂

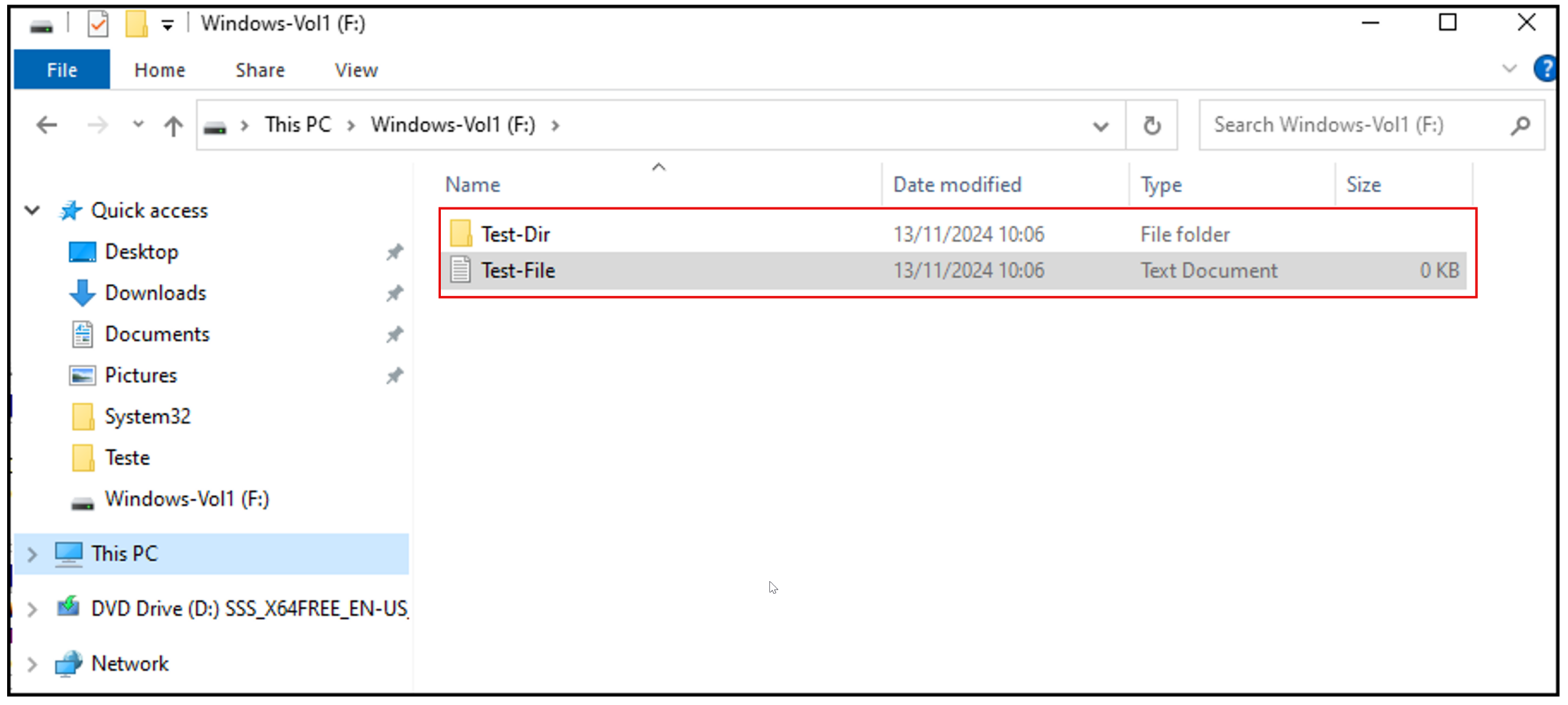

As we can see, we can create files and directories under the new volume:

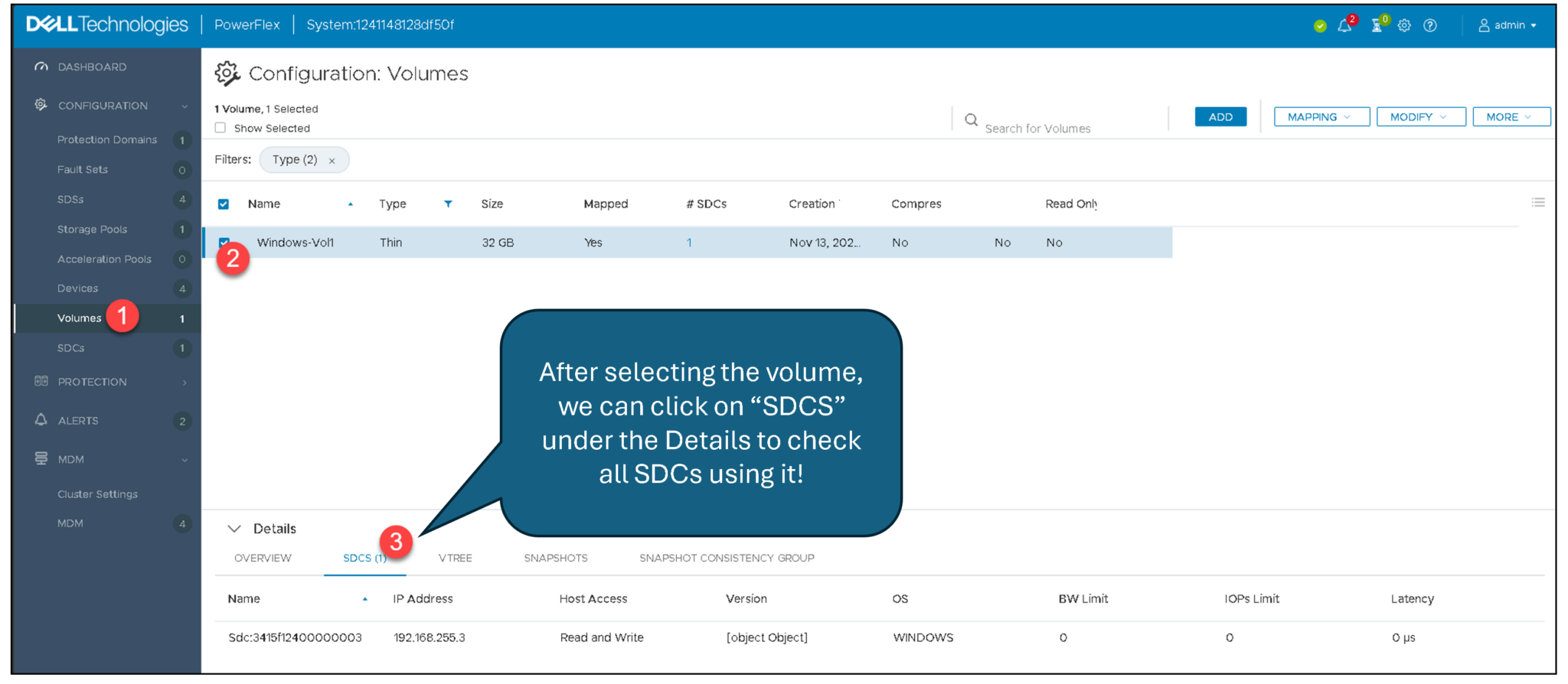

We can select the volume on the Presentation Server and click on the “SDCs” tab. We can see all SDCs that are accessing the volume:

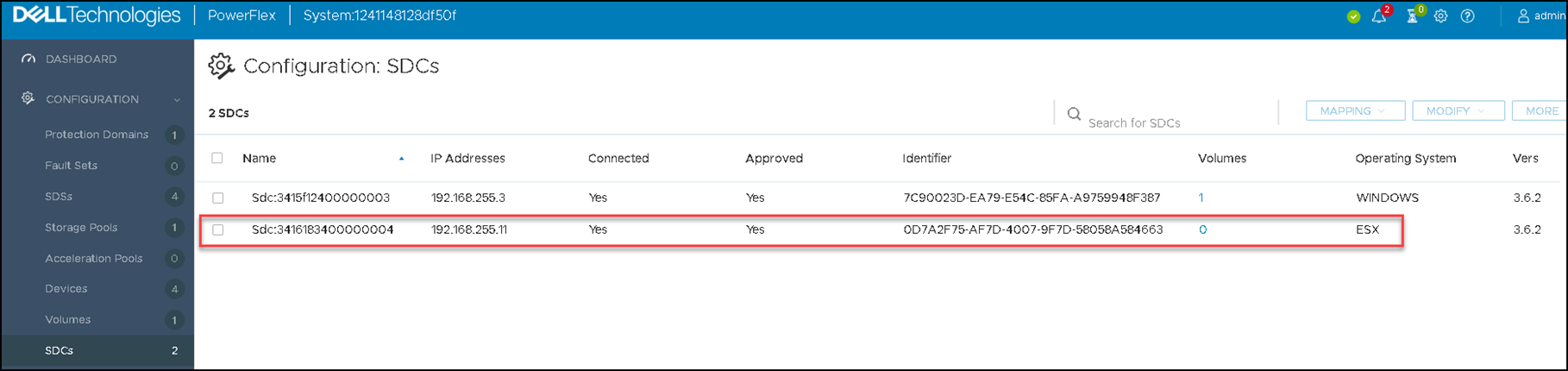

Deploy an ESXi-Based Storage Data Client (SDC)

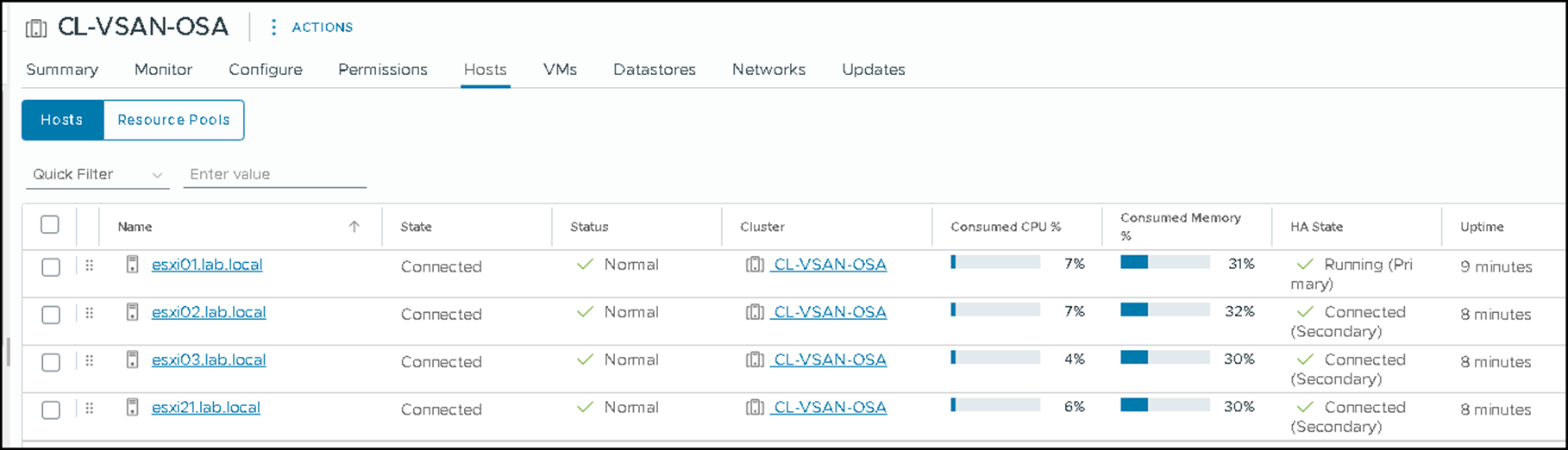

In this case, we have a vSAN cluster with four ESXi hosts. We will manually install the SDC software (VIB) on each ESXi host. Afterward, we will configure the SDC software to “connect” to the MDM cluster:

The first step is to copy the SDC installation package to each ESXi host. For instance, we are copying the SDC package “sdc-3.6.2000.112-esx7.x.zip” to the /tmp directory on each ESXi host. We are using the package for vSphere 7 (choose the package based on your vSphere environment version).

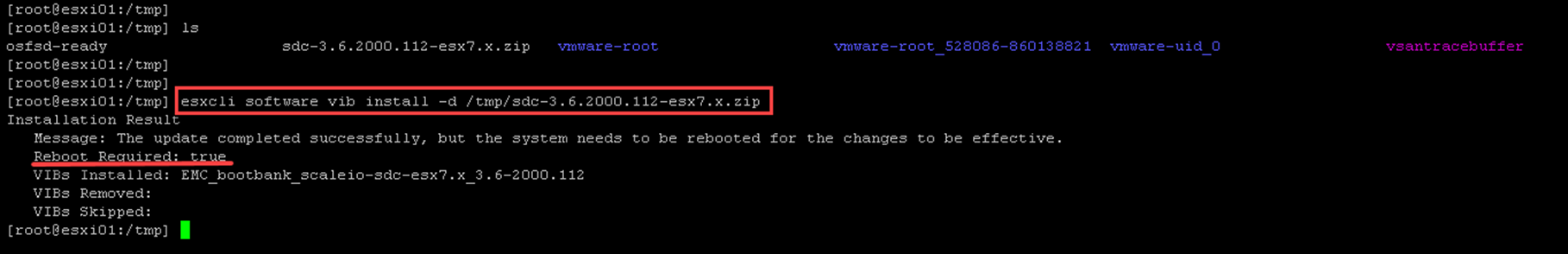

Installing the SDC package on the ESXi host:

esxcli software vib install -d /tmp/sdc-3.6.2000.112-esx7.x.zip

After the SDC package (VIB) installation, we must reboot the ESXi host (put it in maintenance mode and reboot it!). There is a required step to finish the VIB installation!

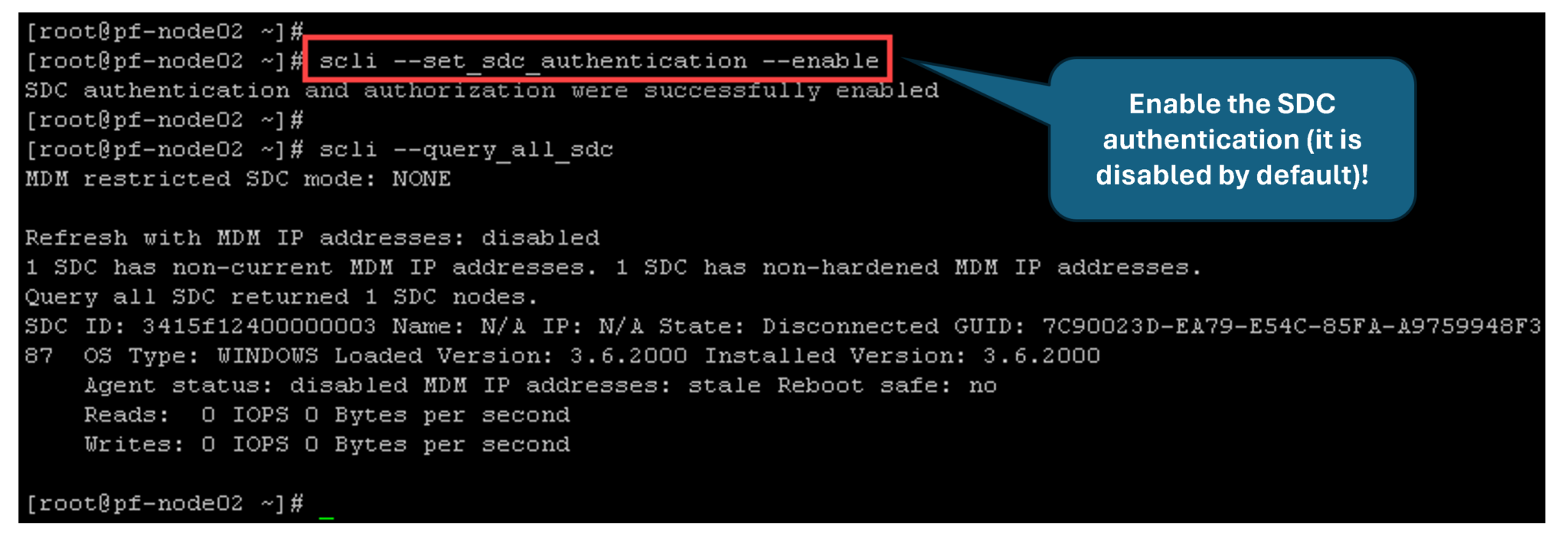

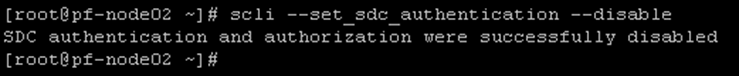

Access the MDM cluster, disable (temporarily) the SDC authentication, and generate an ESXi password for further authentication:

scli --set_sdc_authentication --disable

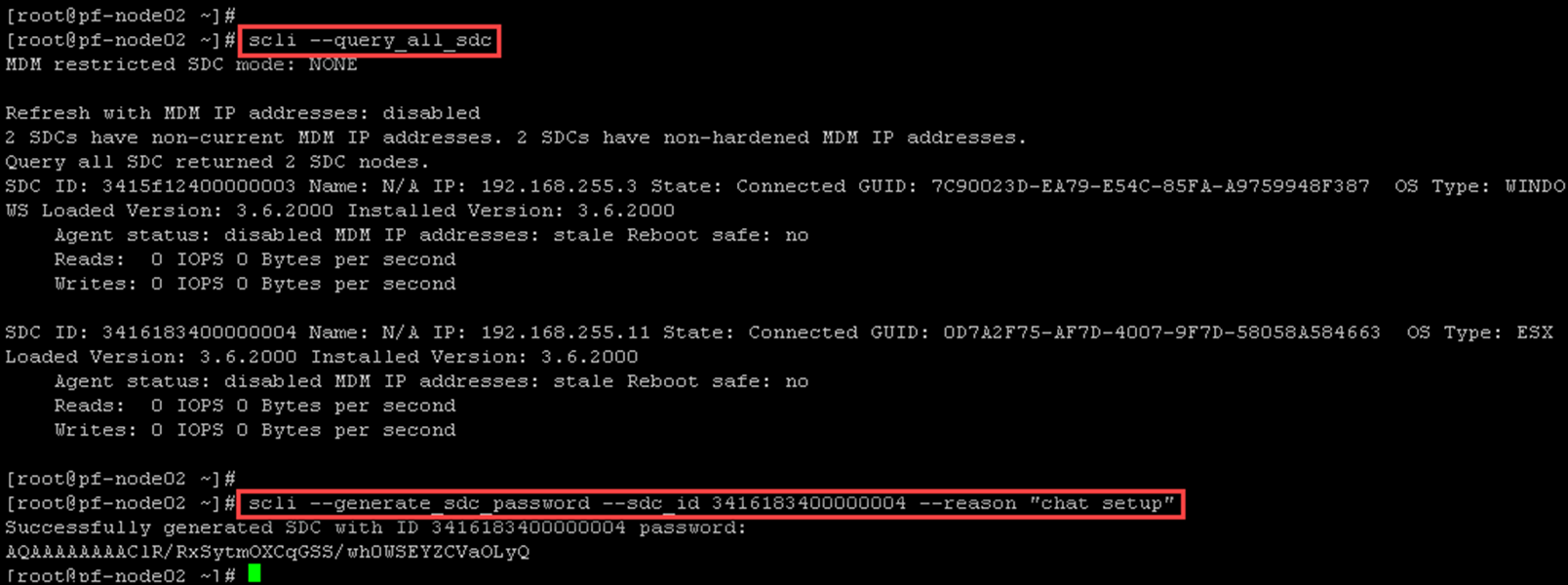

Query all SDC and grab the ESXi SDC ID – we will use this information to generate the SDC password:

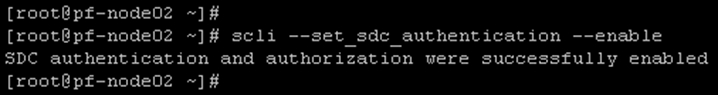

Enable SDC authentication:

scli --set_sdc_authentication --enable

Configure the SDC client to connect to the MDM cluster on the ESXi host (access it by SSH).

To get the IoctlIniGuidStr:

esxcli system module parameters list -m scini | grep IoctlConnect the ESXi to the MDM cluster:

esxcli system module parameters set -m scini -p "IoctlIniGuidStr=0d7a2f75-af7d-4007-9f7d-58058a584663 IoctlMdmIPStr=192.168.255.40 IoctlMdmPasswordStr=192.168.255.40-AQAAAAAAAAClR/RxSytmOXCqGSS/wh0WSEYZCVaOLyQ“Afterward, we must reboot the ESXi host to apply the configuration!

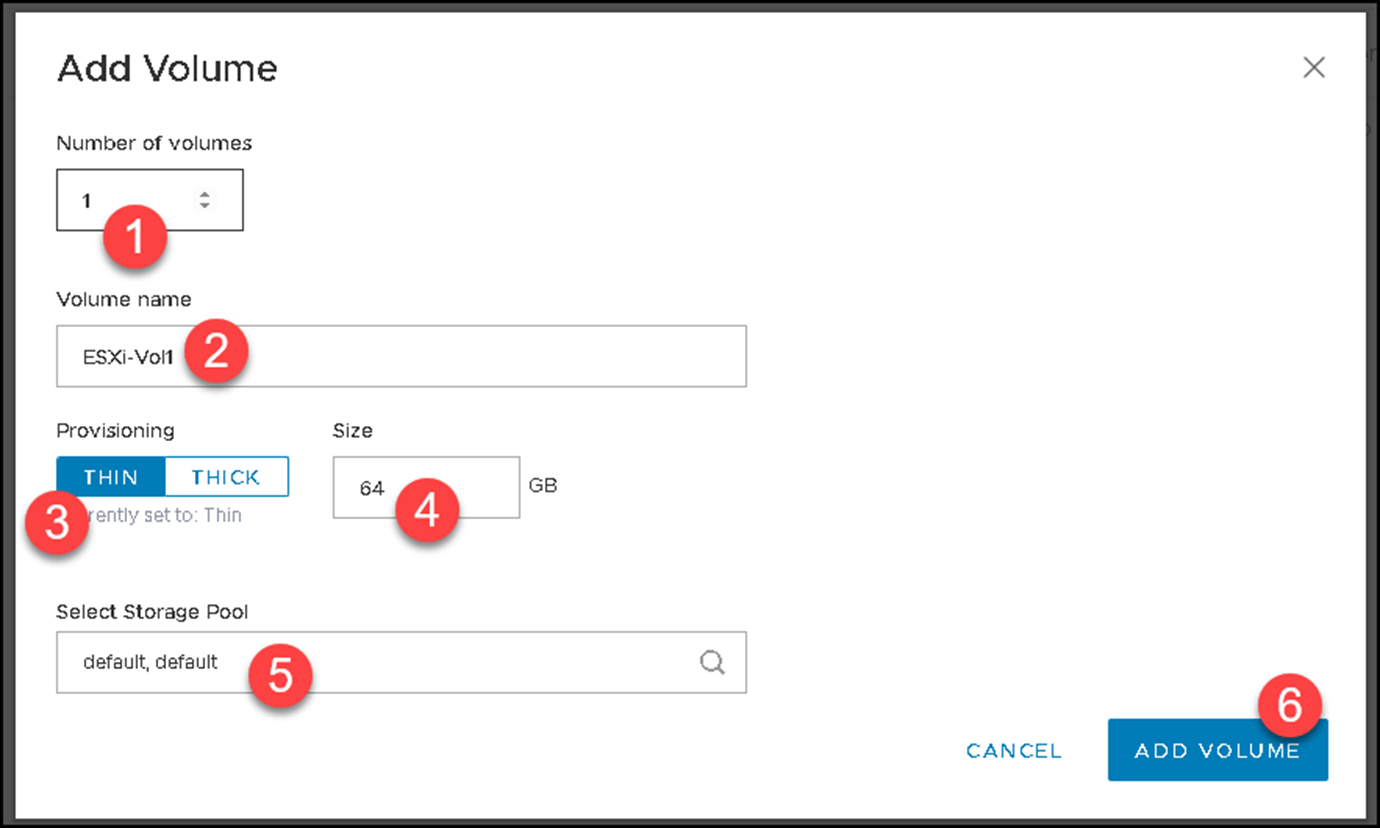

Create a Volume and Map it to an ESXi-Based SDC

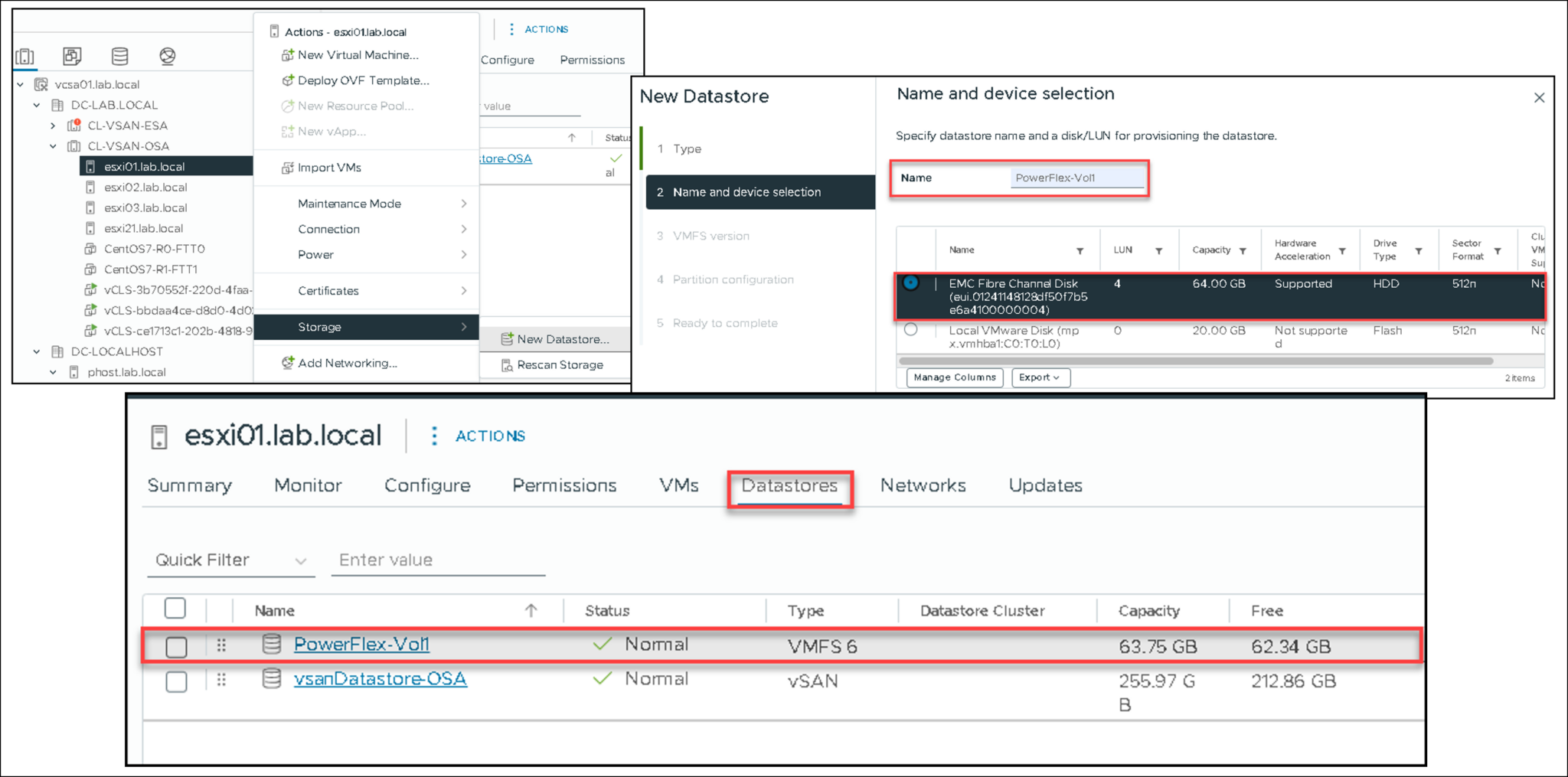

Create a volume named “ESXi-Vol1” with 64GB:

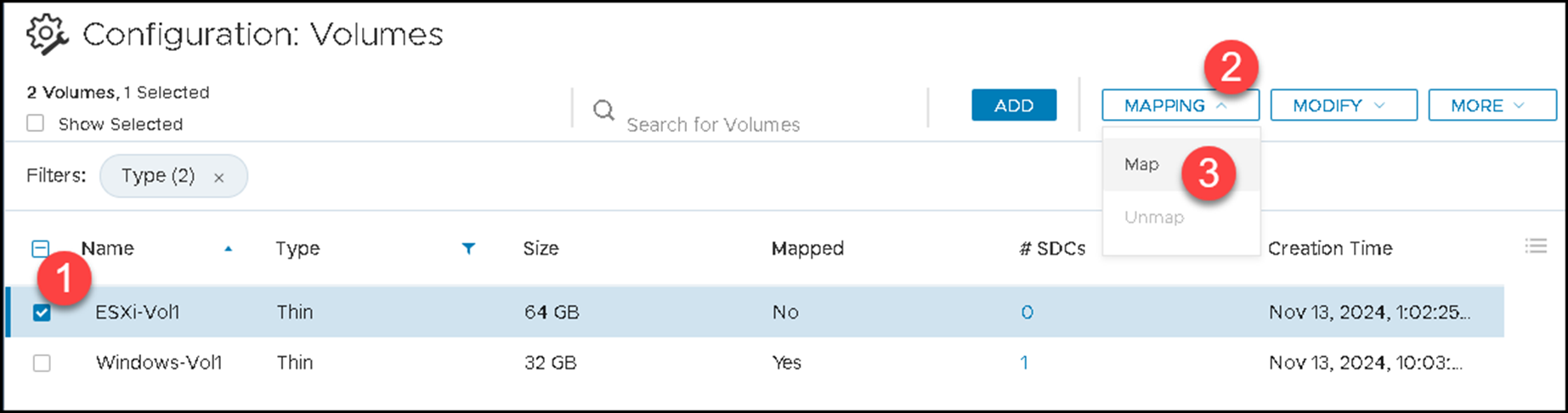

Map the volume to the ESXi host:

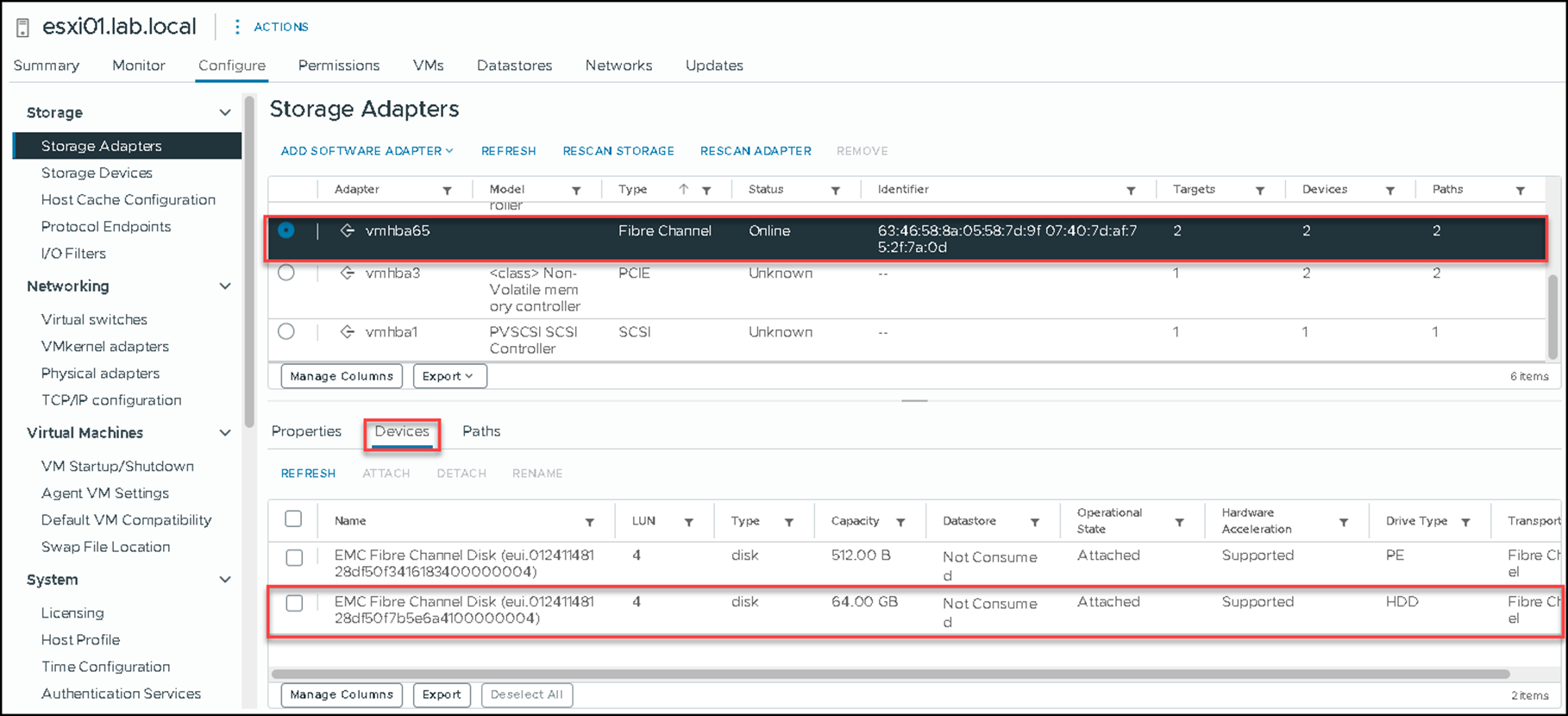

Under Storage Adapters on the ESXi host, click on “RESCAN ADAPTER.”

The “vmhba65” adapter is used to map the PowerFlex volumes:

Since the volume has been mapped to the ESXi host, we can create a Datastore:

That’s it 🙂