How to enable the vSphere HA feature is an article that explains how to enable and configure the HA (High Availability) feature in a vSphere 7 cluster. It is a very important feature for improving the availability of the virtual machines running on the vSphere Cluster.

How vSphere HA works

vSphere HA provides high availability for virtual machines by pooling the virtual machines and the hosts they reside on into a cluster. Hosts in the cluster will monitor and in the event of a failure, the virtual machines on a failed host will restart on alternate hosts.

Note: It is very important here to avoid confusion or mistakes. When the vSphere HA detects a host failure or network partition, for example, all virtual machines running on this host will restart on other hosts on the vSphere Cluster.

When you create a vSphere HA cluster, a single host will elect as a primary host. The primary host communicates with the vCenter Server and monitors the state of all protected virtual machines and of the secondary hosts. Different types of host failures are possible, and the primary host must detect and appropriately deal with the failure.

The primary host must distinguish between a failed host and one that is in a network partition or that has become network isolated. The primary host uses network and datastore heart beating to determine the type of failure.

Basically, there are three “failure” types that vSphere HA handles in unique ways:

- Failed host – host goes down

No heartbeats from the subordinate host, no heartbeats exchange with any datastores, and no pings are alive when sent to the management IP address

- Network Partition = Host isn’t isolated on the network but is not able to communicate over the network to the vSphere HA master host

Datastore heartbeats are alive, but the vSphere HA master cannot see the subordinate host via the network.

- Network Isolated – the host becomes network isolated

The host is still running but no traffic from the vSphere HA agents on the management network. Attempts are made to ping the cluster isolation addresses. If these fail, the host declares that it is isolated from the network.

To get more details about vSphere HA, you can see the links below:

Enabling vSphere HA

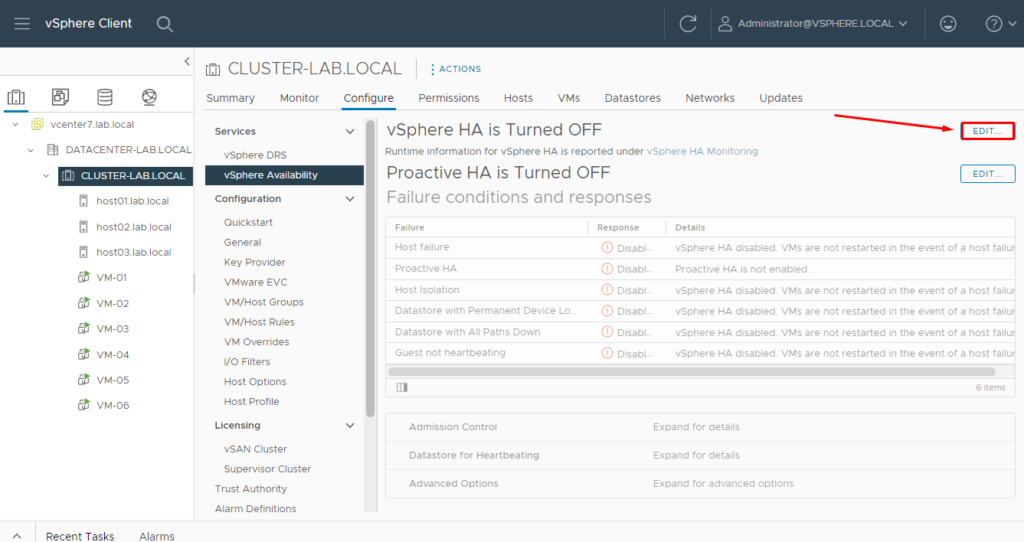

To enable the HA feature on the vSphere Cluster, so, select the cluster, click on Configure –> Services –> vSphere Availability click on “EDIT”:

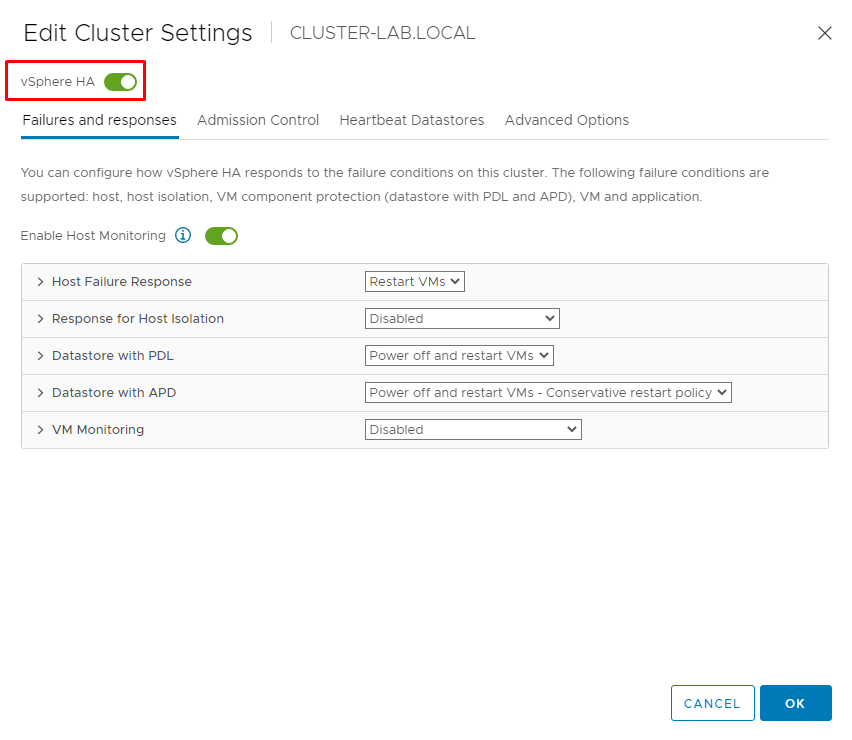

On the vSphere HA, enable the key and then click on OK:

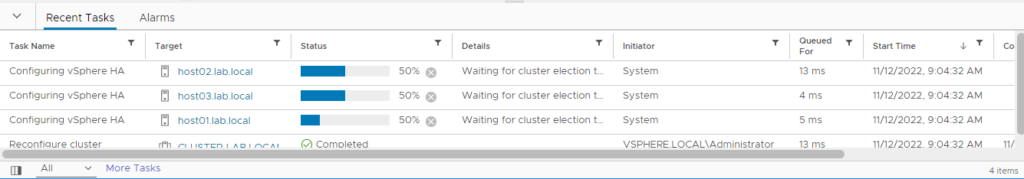

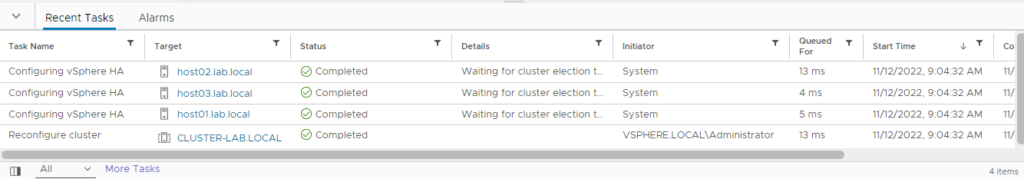

The HA service is starting on all ESXi hosts present in the cluster.

A cluster election is running to select which ESXi host will be the Master or Primary node for the HA context. Keep calm and wait a few seconds:

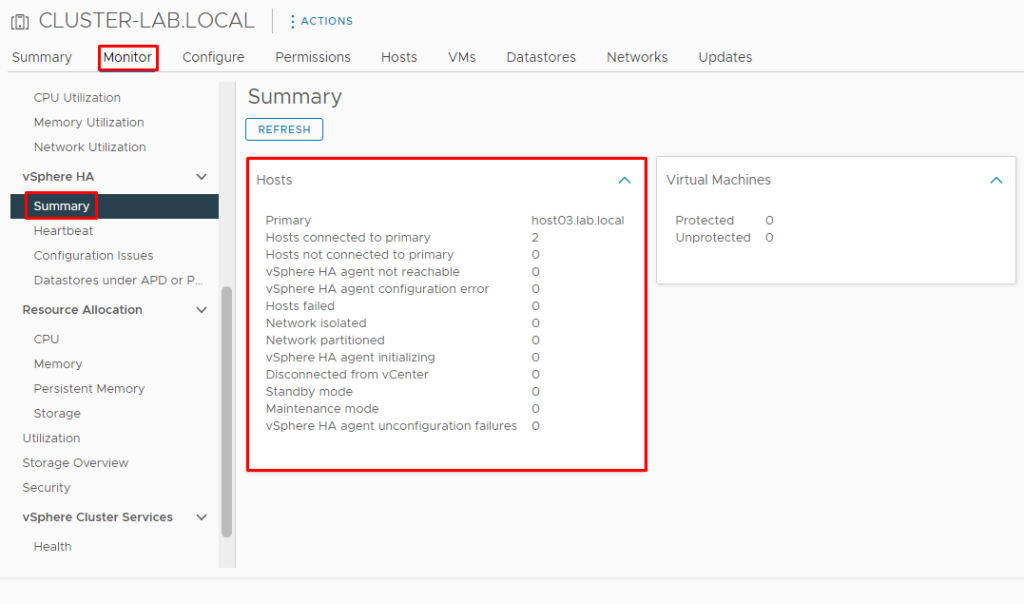

On the Monitor tab, select Summary under the vSphere HA menu. It is possible to see details about the HA process. In this lab, the ESXi host03.lab.local is the Primary host:

HA Failures and Responses

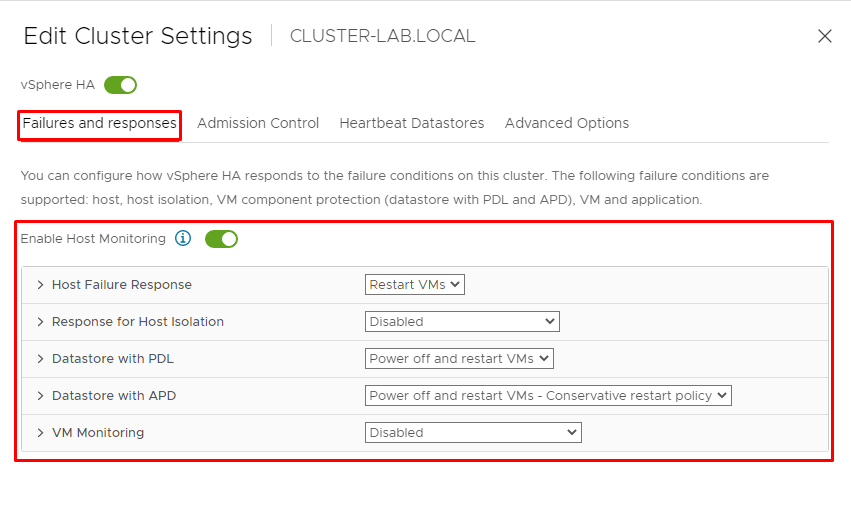

Here, it is possible to configure how vSphere HA responds to the failure conditions on this cluster. The are some failure conditions: host, host isolation, VM component protection (datastore with PDL and APD), VM, and application:

For each failure condition there is a failure response:

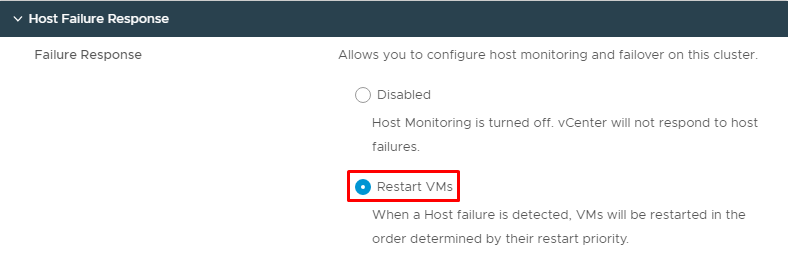

- Host Failure Response: The default value is “Restart VMs”. In this case, we are maintaining this option. When a host failure is detected, VMs will be restarted in the order determined by their restart priority:

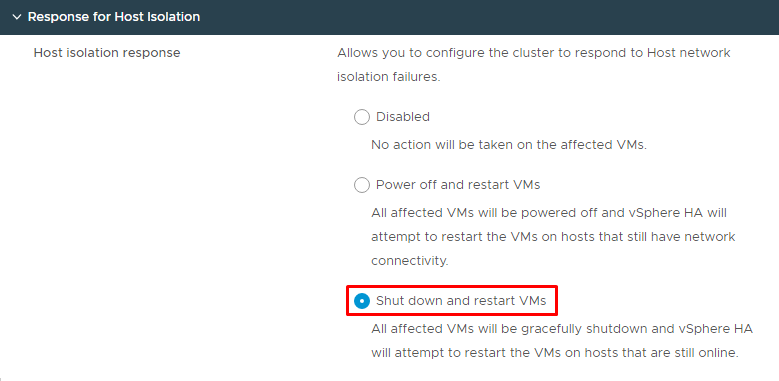

- Response for Host Isolation: The default value is “Disabled”. So, in this case, we are changing to “Shut down and restart VMs”. All affected VMs will be gracefully shutdown and vSphere HA will attempt to restart the VMs on hosts that are still online in the vSphere Cluster:

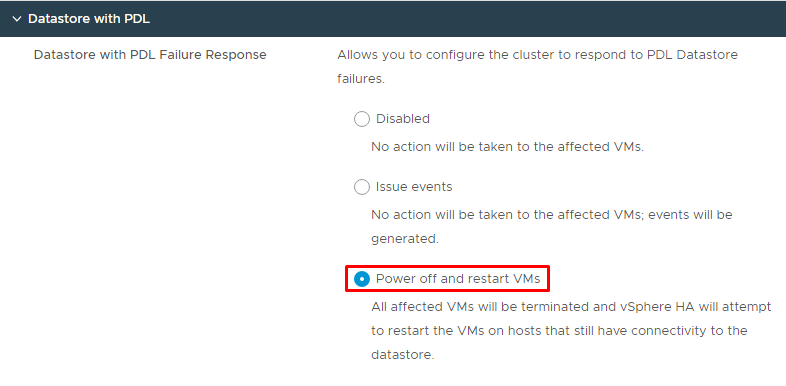

- Datastore with PDL: The default value is “Power off and restart VMs” and we are maintaining this option. All affected VMs will be terminated and vSphere HA will attempt to restart the VMs on hosts that still have connectivity to the datastore. In another word, this type of failure detects if the host lost the connection to the datastore. PDL is the acronym for “Permanent Device Loss”:

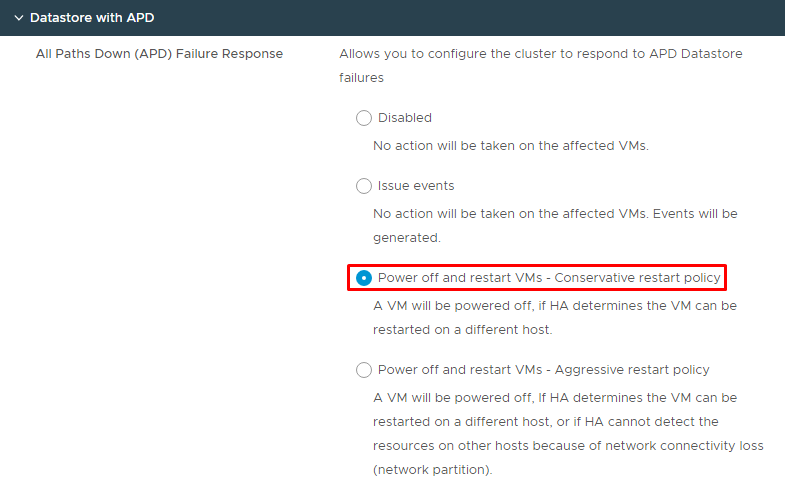

- Datastore with APD: The default value is “Power off and restart VMs – Conservative restart policy”. A VM will be powered off if HA determines the VM can be restarted on a different host in the cluster. APD is the acronym for “All Paths Down”:

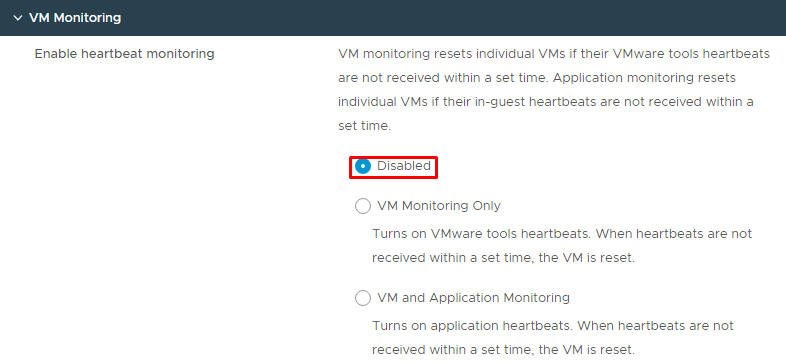

- VM Monitoring: The default value is “Disabled”. This option is not used with frequency and, in my option, be careful to use this option in your vSphere Cluster. There is a “sensible” feature because it monitors the VMware Tools heart beating for each Virtual Machine and takes actions if not receive a response for these heart beating:

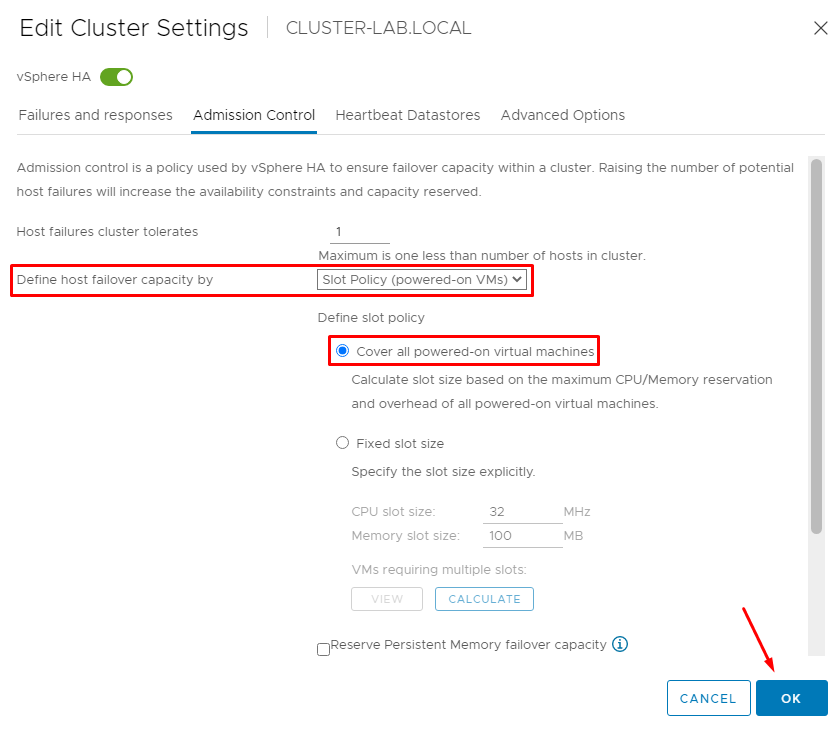

HA Admission Control

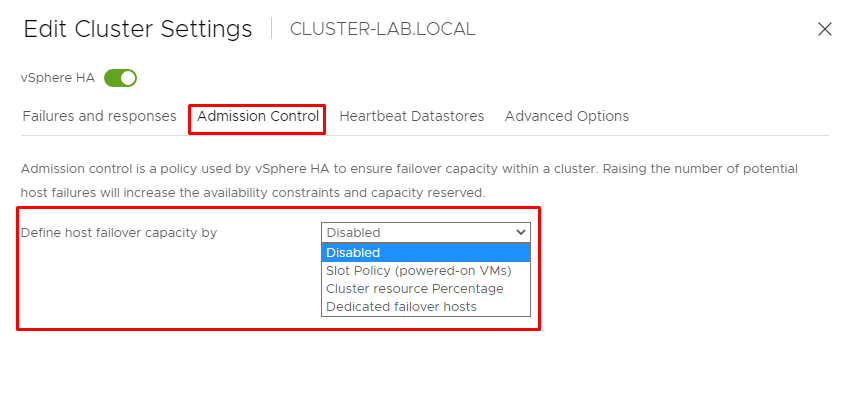

Admission control is a policy that the vSphere HA uses to ensure failover capacity within a cluster. Raising the number of potential host failures will increase the availability constraints and capacity reserved.

Define host failover capacity by Disabled, Slot Policy (powered-on VMs), Cluster resource Percentage, and, Dedicated failover hosts:

In this lab, we choose the “Slot Policy (powered-on VMs)”. On the “slot policy”, choose “Cover all powered-on virtual machines”. This option calculates slot size based on the maximum CPU/Memory reservation and overhead of all powered-on virtual machines

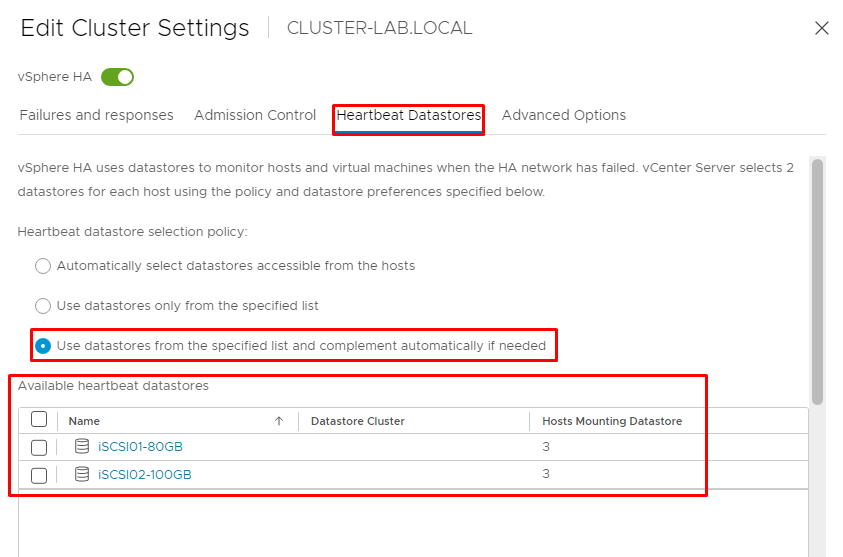

HA Heartbeat Datastores

vSphere HA uses datastores to monitor hosts and virtual machines when the HA network has failed. vCenter Server selects 2 datastores for each host using the policy and datastore preferences specified below.

In our lab, we have two iSCSI datastores:

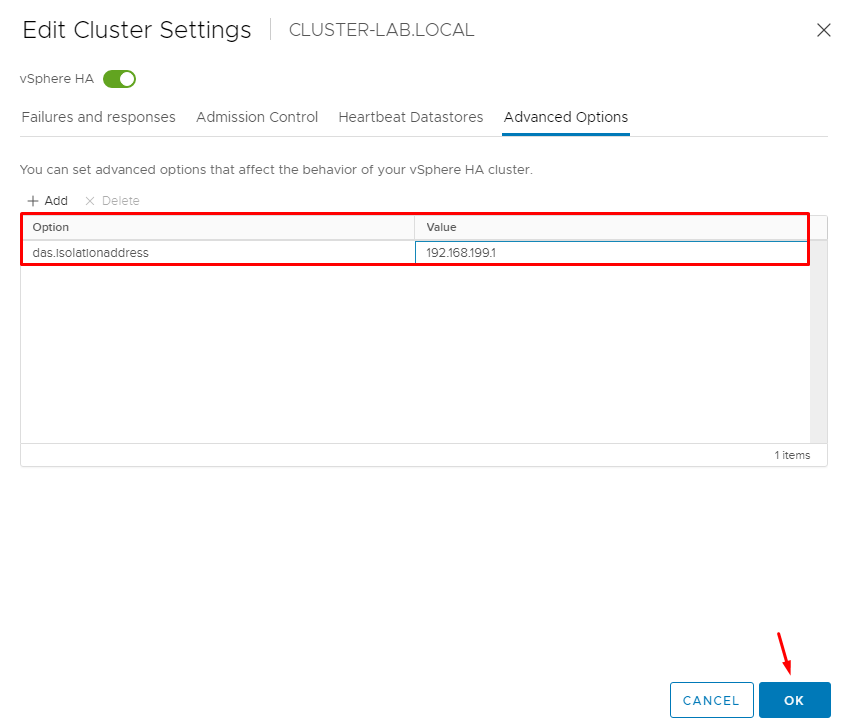

HA Advanced Options

It is possible to set advanced options that affect the behavior of your vSphere HA cluster. Especially, we are talking about an interesting advanced option:

das.isolationaddress

Sets the address that will ping to determine if a host is OK on the network. The address will test only when heartbeats are not received from any other host in the cluster. By default, the gateway of the management network will use for the test. Generally, the default gateway is a reliable address and it is a good idea to use this address for this type of test.

So, you can specify multiple isolation addresses (up to 10) for the cluster:

das.isolationaddressX, where X = 0-9.

Typically you should specify one per management network. Specifying too many addresses makes isolation detection take too long. In our lab, the IP of the default gateway for the Management Network is 192.168.199.1. For that reason, is very to use this configuration in your vSphere Cluster:

Of course, to see more details about all HA Advanced Options, access the link below: