How to Switch the MDM Cluster Mode shows how to switch from a 3_node PowerFlex MDM cluster to a 5_node MDM cluster.

We are running the PowerFlex 4.5.2!

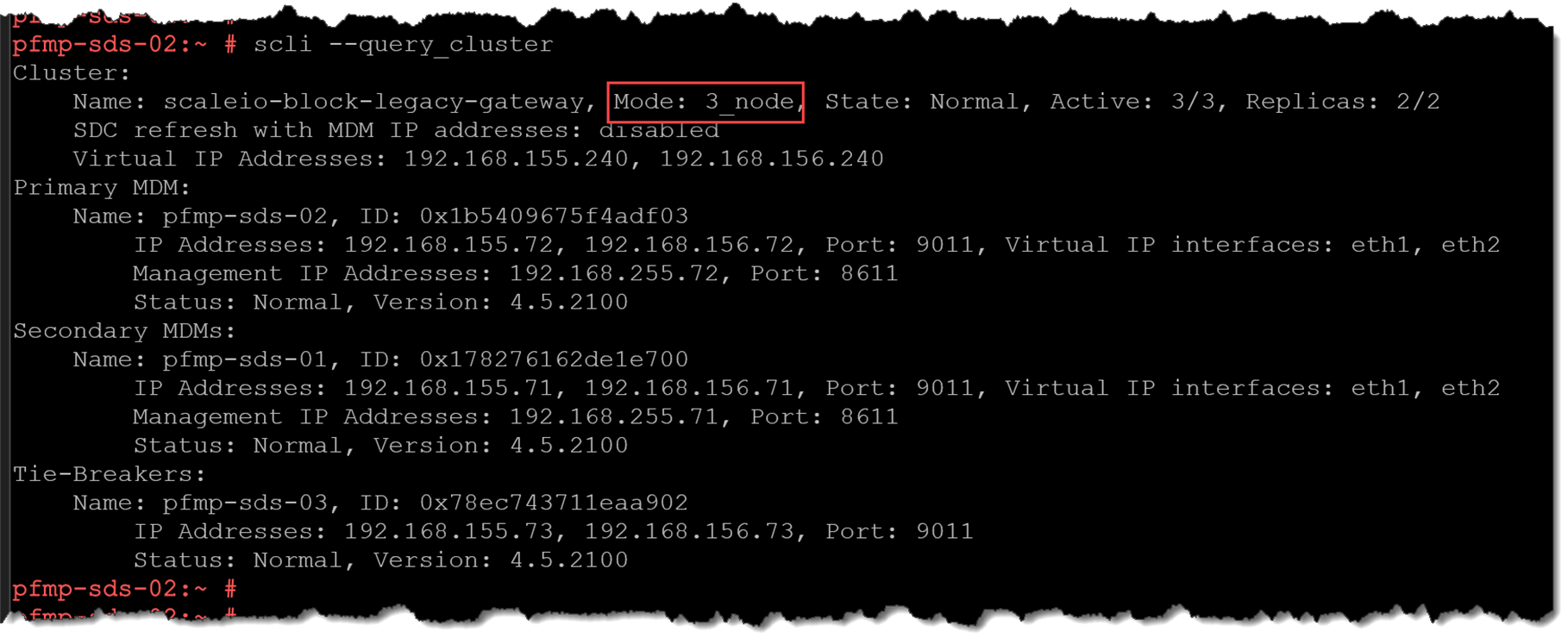

Currently, our MDM cluster has three nodes, as we can see below:

scli --query_cluster

Our goal here is to add two more nodes: pfmp-sds-04 and pfmp-sds-05.

Both nodes (pfmp-sds-04 and pfmp-sds-05) already have the necessary rpm packages installed:

pfmp-sds-04:~ # rpm -qa | grep -i EMC

EMC-ScaleIO-lia-4.5-2100.105.sles15.4.x86_64

EMC-ScaleIO-activemq-5.18.3-68.noarch

EMC-ScaleIO-sds-4.5-2100.105.sles15.4.x86_64

EMC-ScaleIO-mdm-4.5-2100.105.sles15.4.x86_64

pfmp-sds-05:~ # rpm -qa | grep -i EMC

EMC-ScaleIO-lia-4.5-2100.105.sles15.4.x86_64

EMC-ScaleIO-activemq-5.18.3-68.noarch

EMC-ScaleIO-sds-4.5-2100.105.sles15.4.x86_64

EMC-ScaleIO-mdm-4.5-2100.105.sles15.4.x86_64Adding Standby MDM Nodes

Since our MDM cluster is a three-node cluster (3_node), we need to add both additional MDM nodes first.

The following command will add the node “pfmp-sds-04” with the “manager” role. The node will be added as a standby node but with the “manager” role:

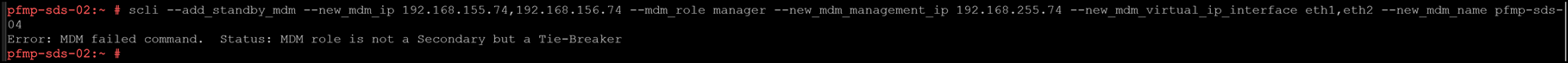

scli --add_standby_mdm --new_mdm_ip 192.168.155.74,192.168.156.74 --mdm_role manager --new_mdm_management_ip 192.168.255.74 --new_mdm_virtual_ip_interface eth1,eth2After executing the command, we had an error:

“Error: MDM failed command. Status: MDM role is not a Secondary but a Tie-Breaker”

In this case, the error means that the MDM node cannot be a manager (Secondary) node.

So, to fix this error, we need to access the node to be added (pfmp-sds-04) and execute the following steps:

1. Edit the file “/opt/emc/scaleio/mdm/cfg/conf.txt” and change the following line:

FROM:

actor_role_is_manager=0

TO:

actor_role_is_manager=1

2. Delete and recreate the MDM service:

/opt/emc/scaleio/mdm/bin/delete_service.sh

/opt/emc/scaleio/mdm/bin/create_service.sh3. Check the MDM service status – it must be running:

systemctl status mdmAfterward, try to add the manager node again:

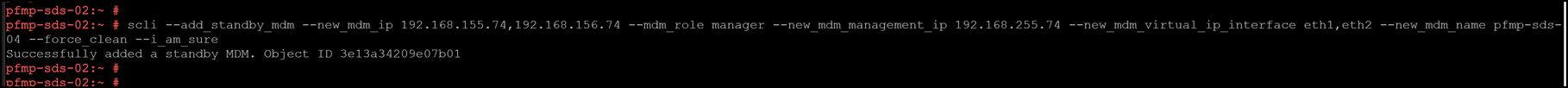

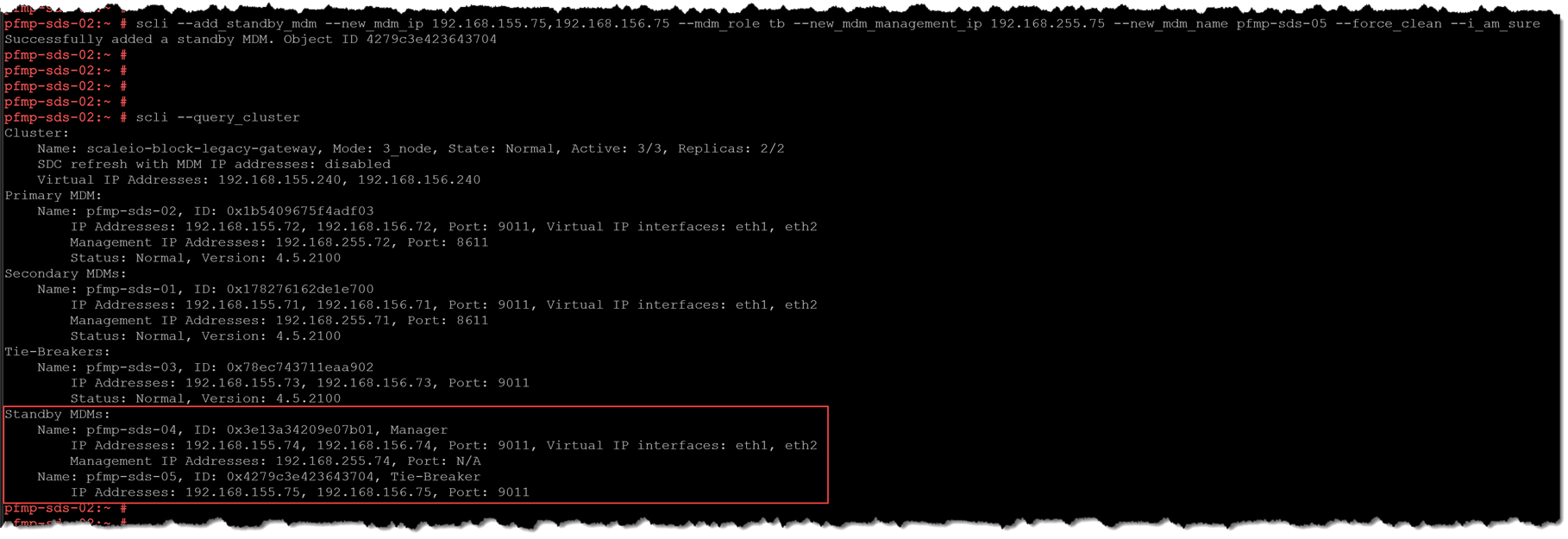

scli --add_standby_mdm --new_mdm_ip 192.168.155.74,192.168.156.74 --mdm_role manager --new_mdm_management_ip 192.168.255.74 --new_mdm_virtual_ip_interface eth1,eth2 --new_mdm_name pfmp-sds-04 --force_clean --i_am_sureAs shown, the node has been added successfully with the manager role:

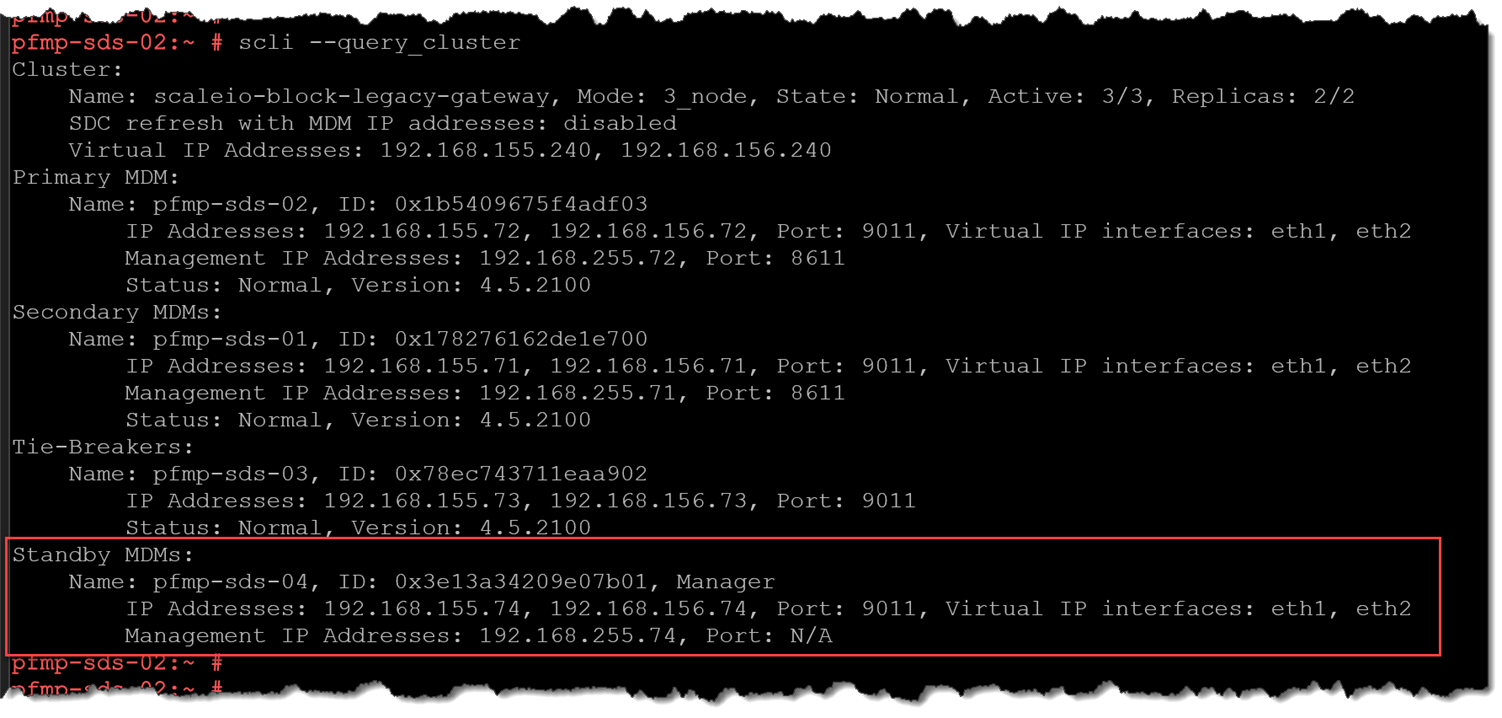

Checking the cluster, we can now see the added node in the Standby MDM section:

scli --query_cluster

The next node will be added with the “Tie-Breaker” role:

scli --add_standby_mdm --new_mdm_ip 192.168.155.75,192.168.156.75 --mdm_role tb --new_mdm_management_ip 192.168.255.75 --new_mdm_name pfmp-sds-05 --force_clean --i_am_sure

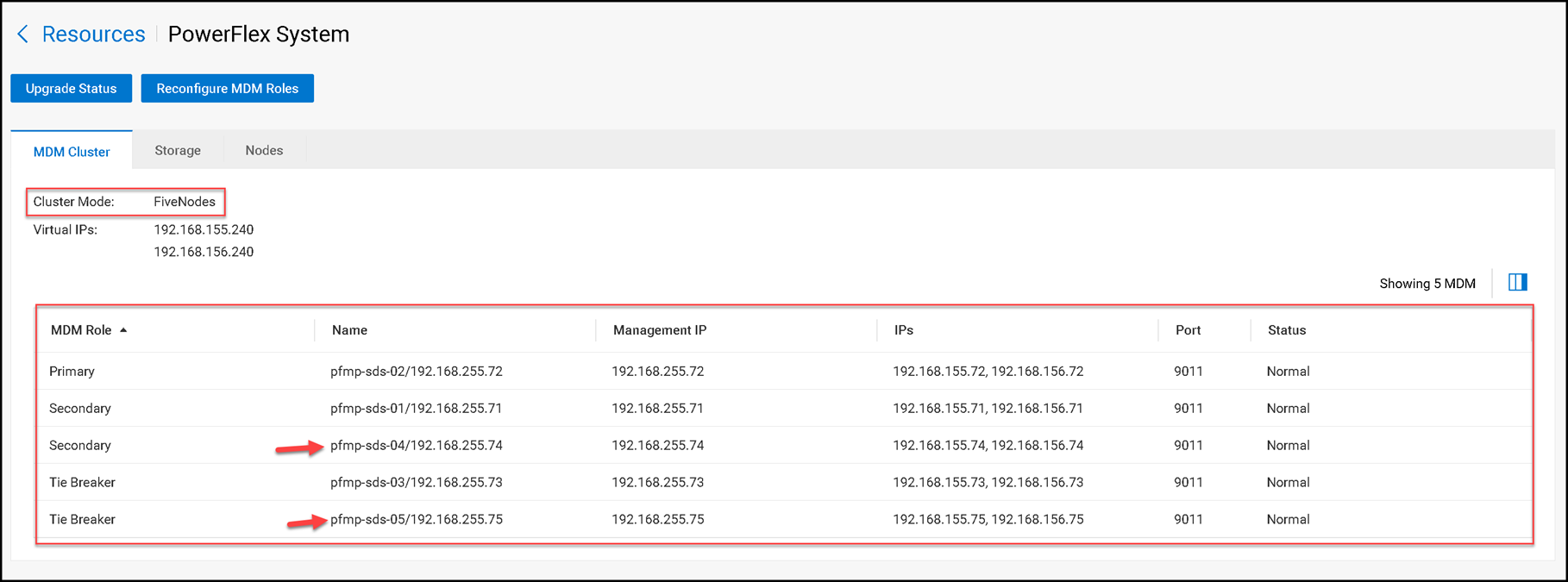

Switching the MDM Cluster Node to 5_node

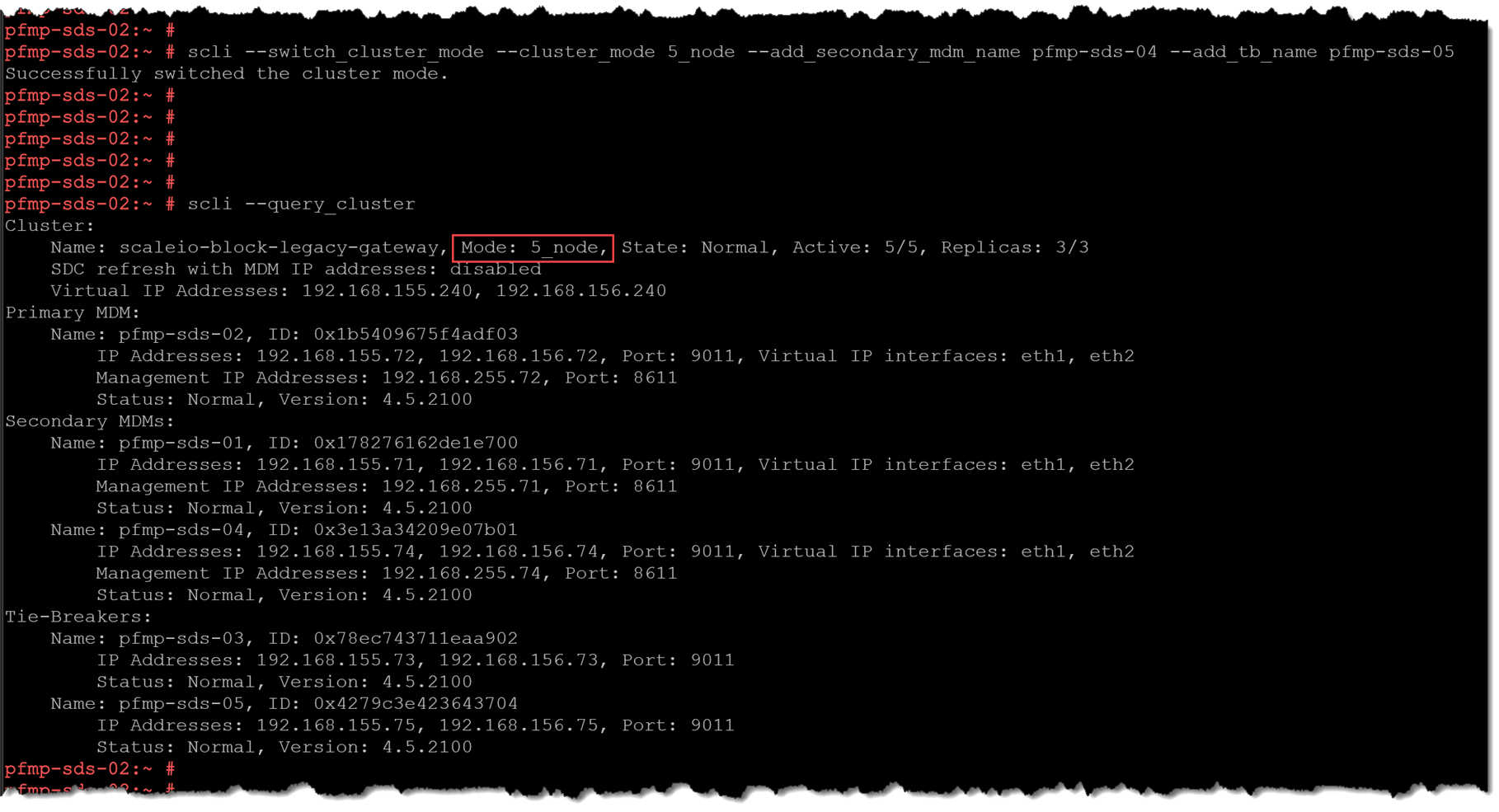

To switch our MDM cluster from 3_node to 5_node, we can use the following command:

scli --switch_cluster_mode --cluster_mode 5_node --add_secondary_mdm_name pfmp-sds-04 --add_tb_name pfmp-sds-05As shown, the switchover process has been executed successfully. Now, our MDM cluster is a five-node cluster:

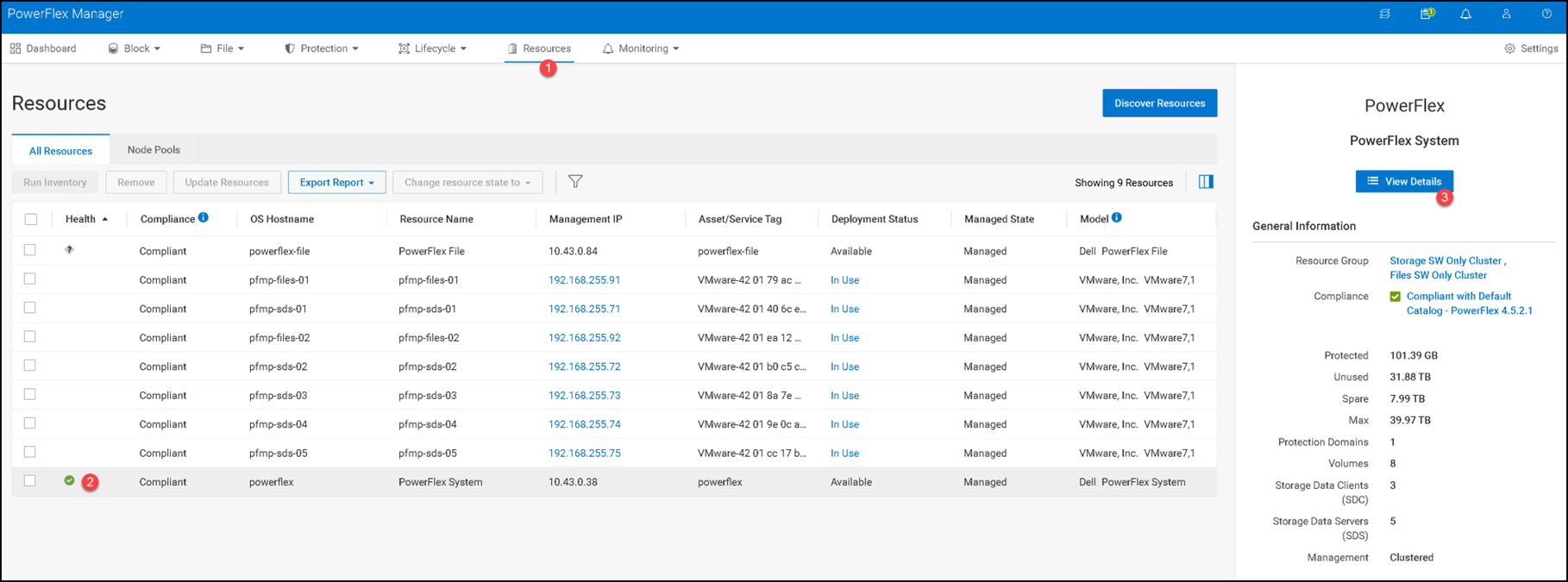

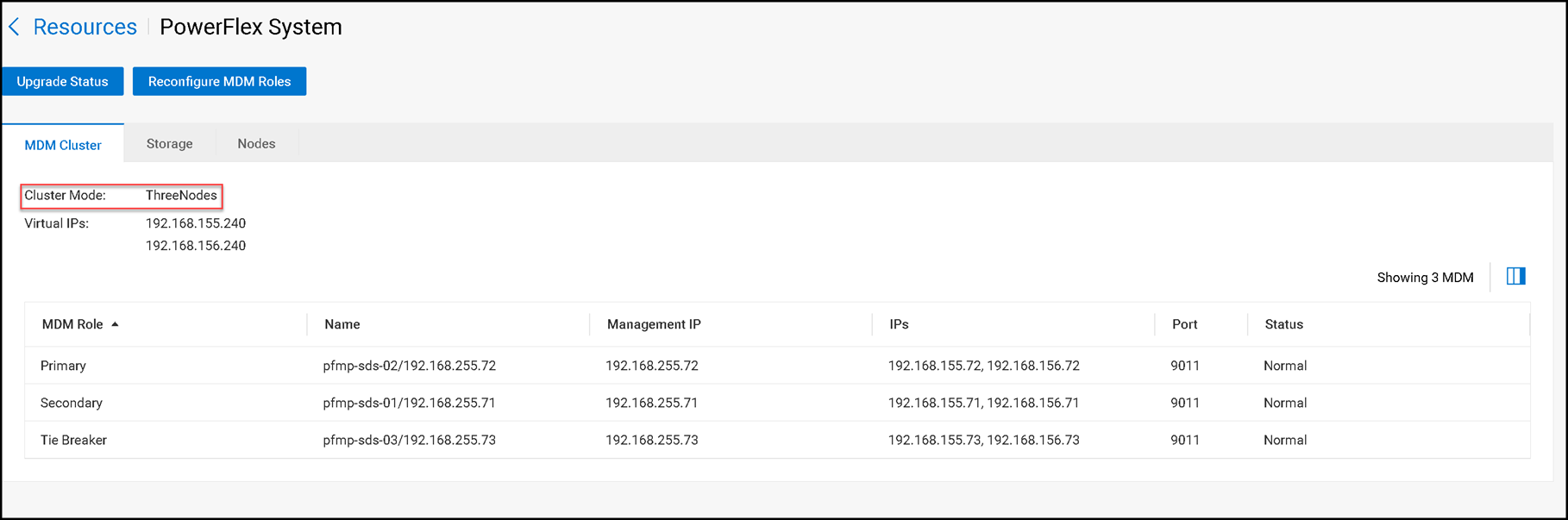

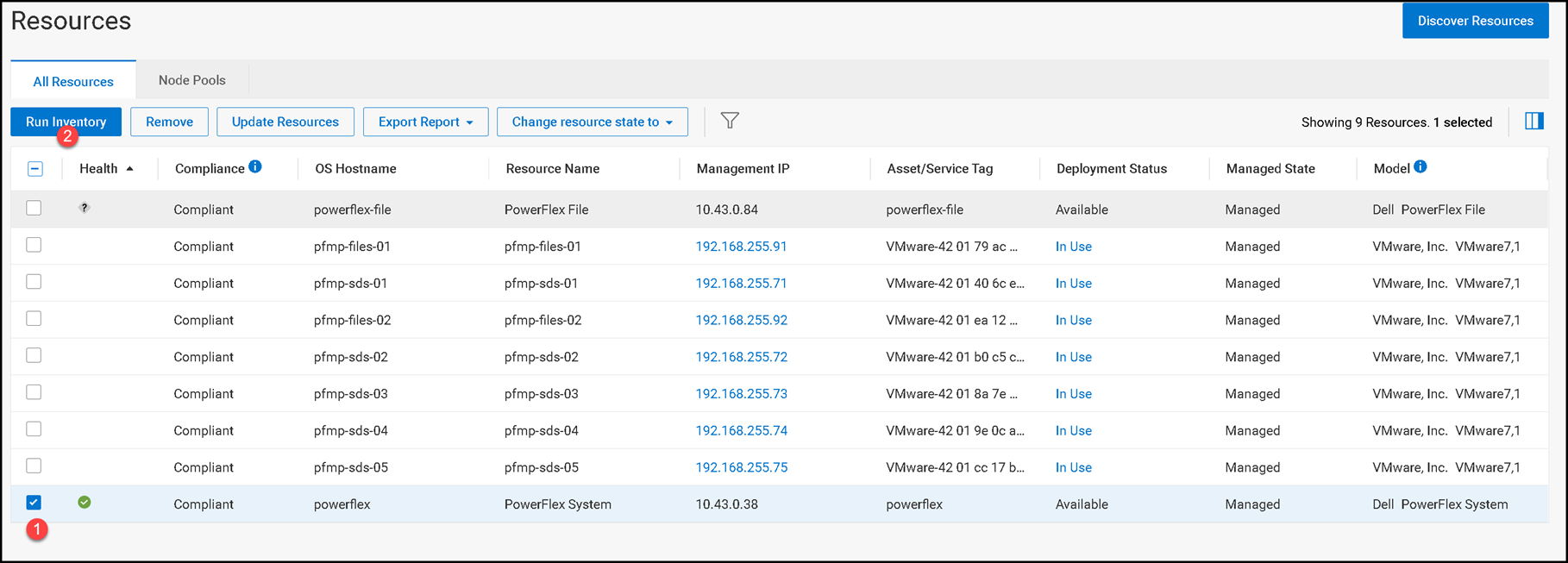

This change cannot be replicated in the PowerFlex Manager UI immediately. On the Resources page, we continue to see a three-node cluster:

To fix it immediately, we can run the inventory for the PowerFlex System resource, as we can see in the following pictures:

That’s it 🙂