Useful RVC Commands for Troubleshooting vSAN is an article that provides some RVC commands to help the vSAN troubleshooting process.

Firstly, What is RVC?

RVC is an acronym for Ruby vSphere Console. It is an interactive command-line console accessible by the vCenter Server. The RVC is based on the popular RbVmomi Ruby Interface. When the vCenter Server is deployed, the RVC console “is embedded” – we do not need to install any additional package or something like that. We can use RVC for troubleshooting several things on the vSphere environment, and for vSAN clusters, RVC can be a primary tool for that.

I would like to share an excellent PDF document about RVC on vSAN. Feel free to access this document – Some examples that we will provide here are extracted from this document:

Note: I heard that RVC will be discontinued by VMware in a short period, and all functions can be executed by “esxcli”, for example. Independently, we will provide some interesting commands for troubleshooting vSAN.

How to access the RVC console?

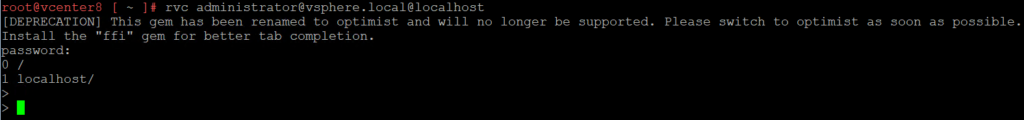

So, we need to access the vCenter Server by SSH and then open an RVC connection with the below command:

rvc administrator@vsphere.local@localhostIn this example, we are considering that the user is “administrator@vsphere.local”. You can change it to match with the user of your environment if necessary. Here is an example of an RVC console access:

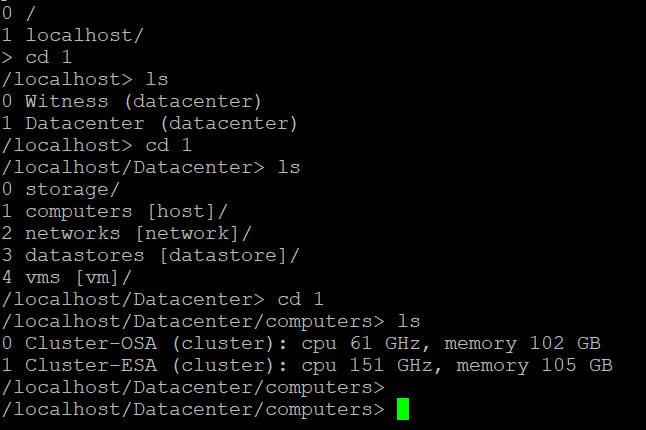

The RVC console has a command line similar to some file system command lines. We can “navigate” into the RVC console. For example, as we log in, we can apply the command “ls” to list and apply the command “cd” to change the level that we are in the RVC console.

In the below picture, we have accessed the “computers” level into the RVC console.

We have both clusters and we can see both at this level:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

Note: Some RVC vSAN commands are executed at the cluster level, host level, VM level, etc. With the help of each command, we will see the correct syntax.

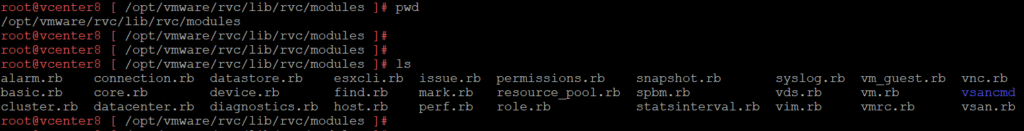

Just out of curiosity, the RVC modules can be found in the directory /opt/vmware/rvc/lib/rvc/modules on the vCenter Server:

Below, is a summary of all the commands that we will use here:

vsan.check_state

vsan.cluster_info

vsan.disks_info

vsan.disks_stats

vsan.health.cluster_status

vsan.health.health_summary

vsan.host_info

vsan.obj_status_report

vsan.object_info

vsan.resync_dashboard

vsan.vm_object_info

vsan.what_if_host_failures

vsan.check_state (command list)

The RVC command “vsan.check_state” checks if VMs and vSAN objects are valid and accessible. We need to specify the cluster that we need to see the status:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers> vsan.check_state 1 -r

2023-08-16 13:10:06 +0000: Step 1: Check for inaccessible vSAN objects

2023-08-16 13:10:11 +0000: Step 1b: Check for inaccessible vSAN objects, again

2023-08-16 13:10:12 +0000: Step 2: Check for invalid/inaccessible VMs

2023-08-16 13:10:12 +0000: Step 2b: Check for invalid/inaccessible VMs again

2023-08-16 13:10:12 +0000: Step 3: Check for VMs for which VC/hostd/vmx are out of sync

Did not find VMs for which VC/hostd/vmx are out of sync

/localhost/Datacenter/computers>

vsan.cluster_info (command list)

The command “vsan.cluster_info” prints cluster, storage, and network information from all hosts in the cluster. Due to the output being very extensive, we only show the output for the first ESXi host:

/localhost/Datacenter/computers> vsan.cluster_info 1

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-02.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-03.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-01.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-09.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-08.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-10.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-04.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-07.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from witness8.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-06.vxrail.local (may take a moment) ...

2023-08-16 13:13:15 +0000: Fetching host info from esxi8-05.vxrail.local (may take a moment) ...

Host: esxi8-01.vxrail.local

Product: VMware ESXi 8.0.1 build-21495797

vSAN enabled: yes

Cluster info:

Cluster role: agent

Cluster UUID: 522233f7-745e-dc1b-62b0-6a03c897f168

Node UUID: 64232a5e-78b3-3dce-fff2-005056817346

Member UUIDs: ["64d4ec9c-aceb-8a0e-852e-005056811316", "64232a60-8ff7-c42b-a79e-00505681cd1e", "64232a69-83e9-77b6-9bb6-005056812316", "64232a62-67eb-bee8-5510-00505681abf3", "64d51071-c180-ebef-e851-00505681912c", "642477cb-f176-efef-ae47-0050568122ed", "64232a66-5cd4-452f-1cc9-00505681f504", "64d4eb80-0bc0-f743-88af-005056815638", "64232a5e-78b3-3dce-fff2-005056817346", "64d5106d-a1ee-bef9-5273-00505681df3c", "64232a50-80a7-4fff-eab8-00505681ac87"] (11)

Node evacuated: no

Storage info:

Auto claim: no

Disk Mappings:

None

FaultDomainInfo:

Preferred

NetworkInfo:

Adapter: vmk3 (10.10.10.11)

Adapter: vmk1 (172.31.200.11)

Data efficiency enabled: no

Encryption enabled: no

vsan.disks_info (command list)

The command “vsan.disks_info” displays information about the disks resident on an ESXi host.

Look that we need to specify the host that we want to see the disks with this command. In this example, the host is esxi8-01.vxrail.local:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers> cd 1

/localhost/Datacenter/computers/Cluster-ESA> ls

0 hosts/

1 resourcePool [Resources]: cpu 151.91/151.91/normal, mem 104.24/104.24/normal

/localhost/Datacenter/computers/Cluster-ESA> cd 0

/localhost/Datacenter/computers/Cluster-ESA/hosts> ls

0 esxi8-01.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

1 esxi8-03.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

2 esxi8-02.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

3 esxi8-07.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

4 esxi8-08.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

5 esxi8-09.vxrail.local (host): cpu 1*8*2.39 GHz, memory 34.00 GB

6 esxi8-10.vxrail.local (host): cpu 1*8*2.39 GHz, memory 34.00 GB

7 esxi8-04.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

8 esxi8-05.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

9 esxi8-06.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

/localhost/Datacenter/computers/Cluster-ESA/hosts> vsan.disks_info 0

2023-08-16 13:16:53 +0000: Gathering disk information for host esxi8-01.vxrail.local

2023-08-16 13:16:53 +0000: Done gathering disk information

Disks on host esxi8-01.vxrail.local:

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| DisplayName | isSSD | Size | State |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local NVMe Disk (eui.56bb4b8893c63885000c296810a5618f) | SSD | 64 GB | ineligible (General vSAN error.) |

| NVMe VMware Virtual NVMe Disk | | | |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local VMware Disk (mpx.vmhba0:C0:T1:L0) | SSD | 64 GB | ineligible (General vSAN error.) |

| VMware Virtual disk | | | |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local NVMe Disk (eui.1979d3b3acbcb7da000c2960a6dda8b2) | SSD | 64 GB | ineligible (General vSAN error.) |

| NVMe VMware Virtual NVMe Disk | | | |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local VMware Disk (mpx.vmhba0:C0:T0:L0) | MD | 20 GB | ineligible (Existing partitions found on disk 'mpx.vmhba0:C0:T0:L0'.) |

| VMware Virtual disk | | | |

| | | | Partition table: |

| | | | 5: 1.00 GB, type = vfat |

| | | | 6: 1.00 GB, type = vfat |

| | | | 7: 17.90 GB, type = 248 |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local NVMe Disk (eui.cb897812bfa54e4b000c296fd965bc7c) | SSD | 10 GB | ineligible (Existing partitions found on disk 'eui.cb897812bfa54e4b000c296fd965bc7c'.) |

| NVMe VMware Virtual NVMe Disk | | | |

| | | | Partition table: |

| | | | 1: 10.00 GB, type = vmfs ('esxi8-01-Datastore') |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local NVMe Disk (eui.81228f6c77a38151000c29661ea389b0) | SSD | 64 GB | ineligible (General vSAN error.) |

| NVMe VMware Virtual NVMe Disk | | | |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

Note: In the above example, we have 4 disks (each one with 64 GB) in our vSAN ESA cluster. I do not know the reason for these disks are being classified as “ineligible (General vSAN error.)”.

But if we apply the same command in a host in a vSAN OSA cluster, we can see the information “inUse”, indicating that the disk is being used in the vSAN cluster:

/localhost/Datacenter/computers/Cluster-OSA> cd 0

/localhost/Datacenter/computers/Cluster-OSA/hosts> ls

0 esxi8-11.vxrail.local (host): cpu 1*8*2.39 GHz, memory 42.00 GB

1 esxi8-12.vxrail.local (host): cpu 1*8*2.39 GHz, memory 42.00 GB

2 esxi8-13.vxrail.local (host): cpu 1*8*2.39 GHz, memory 42.00 GB

3 esxi8-14.vxrail.local (host): cpu 1*8*2.39 GHz, memory 42.00 GB

/localhost/Datacenter/computers/Cluster-OSA/hosts> vsan.disks_info 0

2023-08-16 13:24:58 +0000: Gathering disk information for host esxi8-11.vxrail.local

2023-08-16 13:24:59 +0000: Done gathering disk information

Disks on host esxi8-11.vxrail.local:

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| DisplayName | isSSD | Size | State |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local NVMe Disk (eui.420c1fc6b033881f000c29624f3f5cc9) | SSD | 64 GB | inUse |

| NVMe VMware Virtual N | | | vSAN Format Version: v17 |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local NVMe Disk (eui.1fbe6f9c40b8902f000c296e2f5f0478) | SSD | 10 GB | ineligible (Existing partitions found on disk 'eui.1fbe6f9c40b8902f000c296e2f5f0478'.) |

| NVMe VMware Virtual N | | | |

| | | | Partition table: |

| | | | 1: 10.00 GB, type = vmfs ('esxi8-11-Datastore') |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local NVMe Disk (eui.19bfa55a0413dea8000c296688e10a8a) | SSD | 32 GB | inUse |

| NVMe VMware Virtual N | | | vSAN Format Version: v17 |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

| Local VMware Disk (mpx.vmhba0:C0:T0:L0) | MD | 20 GB | ineligible (Existing partitions found on disk 'mpx.vmhba0:C0:T0:L0'.) |

| VMware Virtual disk | | | |

| | | | Partition table: |

| | | | 5: 1.00 GB, type = vfat |

| | | | 6: 1.00 GB, type = vfat |

| | | | 7: 17.90 GB, type = 248 |

+--------------------------------------------------------+-------+-------+----------------------------------------------------------------------------------------+

vsan.disks_stats (command list)

The command “vsan.disks_stats” shows stats on all disks in the vSAN cluster. In this example, we can see all disks of all ESXi hosts under the cluster “Cluster-OSA”:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers>

/localhost/Datacenter/computers> vsan.disks_stats 0

2023-08-16 13:44:55 +0000: Fetching vSAN disk info from esxi8-11.vxrail.local (may take a moment) ...

2023-08-16 13:44:55 +0000: Fetching vSAN disk info from esxi8-12.vxrail.local (may take a moment) ...

2023-08-16 13:44:55 +0000: Fetching vSAN disk info from esxi8-14.vxrail.local (may take a moment) ...

2023-08-16 13:44:55 +0000: Fetching vSAN disk info from esxi8-13.vxrail.local (may take a moment) ...

2023-08-16 13:44:56 +0000: Done fetching vSAN disk infos

+--------------------------------------+-----------------------+----------+------+----------+--------+----------+----------+----------+----------+----------+---------+----------+----------+

| | | | Num | Capacity | | | Physical | Physical | Physical | Logical | Logical | Logical | Status |

| DisplayName | Host | DiskTier | Comp | Total | Used | Reserved | Capacity | Used | Reserved | Capacity | Used | Reserved | Health |

+--------------------------------------+-----------------------+----------+------+----------+--------+----------+----------+----------+----------+----------+---------+----------+----------+

| eui.19bfa55a0413dea8000c296688e10a8a | esxi8-11.vxrail.local | Cache | 0 | 32.00 GB | 0.00 % | 0.00 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

| eui.420c1fc6b033881f000c29624f3f5cc9 | esxi8-11.vxrail.local | Capacity | 7 | 63.99 GB | 2.65 % | 0.23 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

+--------------------------------------+-----------------------+----------+------+----------+--------+----------+----------+----------+----------+----------+---------+----------+----------+

| eui.149646f30aae05e8000c296ff5f57f0f | esxi8-12.vxrail.local | Cache | 0 | 32.00 GB | 0.00 % | 0.00 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

| eui.6f2d9d4049d803a5000c29675ca28b6e | esxi8-12.vxrail.local | Capacity | 7 | 63.99 GB | 2.60 % | 0.23 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

+--------------------------------------+-----------------------+----------+------+----------+--------+----------+----------+----------+----------+----------+---------+----------+----------+

| eui.0fe645c2abbeeab1000c2960a6a069af | esxi8-13.vxrail.local | Cache | 0 | 32.00 GB | 0.00 % | 0.00 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

| eui.002b8c45347d2c54000c296b20b2e70e | esxi8-13.vxrail.local | Capacity | 7 | 63.99 GB | 2.62 % | 0.23 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

+--------------------------------------+-----------------------+----------+------+----------+--------+----------+----------+----------+----------+----------+---------+----------+----------+

| eui.cd75aedcd23fd914000c29662e30f59c | esxi8-14.vxrail.local | Cache | 0 | 32.00 GB | 0.00 % | 0.00 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

| eui.505fad9c9a598739000c296e5205ee96 | esxi8-14.vxrail.local | Capacity | 7 | 63.99 GB | 2.65 % | 0.23 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v17) |

+--------------------------------------+-----------------------+----------+------+----------+--------+----------+----------+----------+----------+----------+---------+----------+----------+

/localhost/Datacenter/computers>

vsan.health.cluster_status (command list)

The command “vsan.health.cluster_status” basically checks if the vSAN extension is installed on all ESXi hosts in the cluster and shows the version of the vSAN:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers>

/localhost/Datacenter/computers> vsan.health.cluster_status 1

Configuration of ESX vSAN Health Extension: installed (OK)

Host 'esxi8-10.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-08.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-01.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-06.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-05.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-02.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-03.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-09.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-04.vxrail.local' has health system version '8.0.201' installed

Host 'esxi8-07.vxrail.local' has health system version '8.0.201' installed

vCenter Server has health system version '8.0.201' installed

/localhost/Datacenter/computers>

vsan.health.health_summary (command list)

The command “vsan.health.health_summary” does several tests in the vSAN cluster. In my personal opinion, this command shows similar information that we can see under the vSAN Skyline Health through the vSphere Client Interface:

/localhost/Datacenter/computers> vsan.health.health_summary 1

Overall health findings: green (OK)

+------------------------------------------------------+---------+

| Health check | Result |

+------------------------------------------------------+---------+

| Cluster | Passed |

| Time is synchronized across hosts and VC | Passed |

| vSphere Lifecycle Manager (vLCM) configuration | Passed |

| Advanced vSAN configuration in sync | Passed |

| vSAN daemon liveness | Passed |

| vSAN Disk Balance | Passed |

| Resync operations throttling | Passed |

| vSAN Direct homogeneous disk claiming | Passed |

| vCenter state is authoritative | Passed |

| vSAN cluster configuration consistency | Passed |

| vSphere cluster members match vSAN cluster members | Passed |

| Software version compatibility | Passed |

| Disk format version | Passed |

| vSAN extended configuration in sync | Passed |

+------------------------------------------------------+---------+

| Network | Passed |

| Hosts with connectivity issues | Passed |

| vSAN cluster partition | Passed |

| All hosts have a vSAN vmknic configured | Passed |

| Hosts disconnected from VC | Passed |

| vSAN: Basic (unicast) connectivity check | Passed |

| vSAN: MTU check (ping with large packet size) | Passed |

| vMotion: Basic (unicast) connectivity check | Passed |

| vMotion: MTU check (ping with large packet size) | Passed |

| Hosts with duplicate IP addresses | Passed |

| Hosts with pNIC TSO issues | Passed |

| Hosts with LACP issues | Passed |

| Physical network adapter link speed consistency | Passed |

+------------------------------------------------------+---------+

| Data | Passed |

| vSAN object health | Passed |

| vSAN object format health | Passed |

+------------------------------------------------------+---------+

| Stretched cluster | Passed |

| Witness host not found | Passed |

| Unexpected number of fault domains | Passed |

| Unicast agent configuration inconsistent | Passed |

| Invalid preferred fault domain on witness host | Passed |

| Preferred fault domain unset | Passed |

| Witness host within vCenter cluster | Passed |

| Witness host fault domain misconfigured | Passed |

| Unicast agent not configured | Passed |

| No disk claimed on witness host | Passed |

| Unsupported host version | Passed |

| Invalid unicast agent | Passed |

| Site latency health | Passed |

+------------------------------------------------------+---------+

| Capacity utilization | Passed |

| Storage space | Passed |

| Component | Passed |

| What if the most consumed host fails | Passed |

+------------------------------------------------------+---------+

| Physical disk | Passed |

| Operation health | Passed |

| Congestion | Passed |

| Component limit health | Passed |

| Memory pools (heaps) | Passed |

| Memory pools (slabs) | Passed |

| Disk capacity | Passed |

+------------------------------------------------------+---------+

| Hardware compatibility | Passed |

| vSAN HCL DB up-to-date | skipped |

| vSAN HCL DB Auto Update | skipped |

| SCSI controller is VMware certified | skipped |

| NVMe device can be identified | Passed |

| NVMe device is VMware certified | skipped |

| Controller is VMware certified for ESXi release | Passed |

| Controller driver is VMware certified | Passed |

| Controller firmware is VMware certified | Passed |

| Physical NIC link speed meets requirements | skipped |

| Host physical memory compliance check | Passed |

+------------------------------------------------------+---------+

| Online health (Disabled) | skipped |

| Advisor | skipped |

| vSAN Support Insight | skipped |

+------------------------------------------------------+---------+

| Performance service | Passed |

| Stats DB object | Passed |

| Stats primary election | Passed |

| Performance data collection | Passed |

| All hosts contributing stats | Passed |

| Stats DB object conflicts | Passed |

+------------------------------------------------------+---------+

Details about any failed test below ...

Hardware compatibility - vSAN HCL DB up-to-date: skipped

+-------------------+--------------------------------+------------------------+-------------------------------------------+

| Current time | Local HCL DB copy last updated | Days since last update | All ESXi versions on cluster are included |

+-------------------+--------------------------------+------------------------+-------------------------------------------+

| 1692194102.577976 | 1671729840.0 | 236 | Warning |

+-------------------+--------------------------------+------------------------+-------------------------------------------+

Hardware compatibility - SCSI controller is VMware certified: skipped

+-----------------------+--------+------------------------------------+---------------------+----------------------+

| Host | Device | Current controller | PCI ID | Controller certified |

+-----------------------+--------+------------------------------------+---------------------+----------------------+

| esxi8-01.vxrail.local | vmhba0 | VMware Inc. PVSCSI SCSI Controller | 15ad,07c0,15ad,07c0 | Warning |

+-----------------------+--------+------------------------------------+---------------------+----------------------+

Hardware compatibility - NVMe device is VMware certified: skipped

+-----------------------+--------+--------------------------------------+--------------------+---------------------+----------+------------------------------+

| Host | Device | Device name | Device certified | PCI ID | Use for | Supported vSAN Configuration |

+-----------------------+--------+--------------------------------------+--------------------+---------------------+----------+------------------------------+

| esxi8-09.vxrail.local | vmhba2 | eui.3c5094e80482e942000c296ed5a52b91 | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-10.vxrail.local | vmhba2 | eui.ba107ebc1b0e9eff000c2965eaa650c1 | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-04.vxrail.local | vmhba2 | eui.0cea7cd7924a7392000c29662cda280b | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-03.vxrail.local | vmhba2 | eui.ac51f28137a2315c000c2968e87becc5 | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-06.vxrail.local | vmhba2 | eui.55f959f842040289000c296ecbaa0dbd | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-07.vxrail.local | vmhba2 | eui.e97de4c6e6363607000c296bc5377734 | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-01.vxrail.local | vmhba2 | eui.81228f6c77a38151000c29661ea389b0 | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-02.vxrail.local | vmhba2 | eui.876191a8b76e6208000c2965a1a7d14c | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-05.vxrail.local | vmhba2 | eui.f0d3672a972529ba000c29664583bfc1 | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

| esxi8-08.vxrail.local | vmhba2 | eui.4269aff017217298000c29665b72c458 | yellow#Uncertified | 15ad,07f0,15ad,07f0 | vSAN ESA | |

+-----------------------+--------+--------------------------------------+--------------------+---------------------+----------+------------------------------+

Hardware compatibility - Physical NIC link speed meets requirements: skipped

+-----------------------+-----------------------+---------------------------+-------------------------+------------------------------------+

| Host | vSAN VMkernel adapter | Physical network adapters | Total Link Speed (Mbps) | Minimum required link speed (Mbps) |

+-----------------------+-----------------------+---------------------------+-------------------------+------------------------------------+

| esxi8-09.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-10.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-04.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-03.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-06.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-07.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-01.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-02.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-05.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

| esxi8-08.vxrail.local | vmk3 | vmnic0, vmnic1 | info#20000 | 25000 |

+-----------------------+-----------------------+---------------------------+-------------------------+------------------------------------+

Online health (Disabled) - vSAN Support Insight: skipped

+------------------------------------------------+--------+-------------------------------------------------------------------------------------------------------------------------------------+

| Name | Status | Description |

+------------------------------------------------+--------+-------------------------------------------------------------------------------------------------------------------------------------+

| Customer Experience Improvement Program status | Info | Not joined the Customer Experience Improvement Program (CEIP). Join CEIP to get better support. |

| Online notification | Info | Failed to receive vSAN notifications from VMware cloud due to network connectivity issue. Click Ask VMware button for more details. |

+------------------------------------------------+--------+-------------------------------------------------------------------------------------------------------------------------------------+

[[0.168195497, "initial connect"],

[13.815901494, "cluster-health"],

[0.027540411, "table-render"]]

/localhost/Datacenter/computers>

Note: As it is a lab environment (nested), it is normal we are not using certified hardware to run the vSAN ESA. A lot of alerts under the vSAN Skyline Health were silent (for example Hardware compatibility – NVMe device is VMware certified: skipped).

vsan.host_info (command list)

The command “vsan.host_info” displays information about a specific ESXi host. This command needs a host as a parameter.

In this example, we are seeing the details of the ESXi host esxi8-01.vxrail.local:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers> cd 1

/localhost/Datacenter/computers/Cluster-ESA> ls

0 hosts/

1 resourcePool [Resources]: cpu 151.91/151.91/normal, mem 104.17/104.17/normal

/localhost/Datacenter/computers/Cluster-ESA> cd 0

/localhost/Datacenter/computers/Cluster-ESA/hosts> ls

0 esxi8-01.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

1 esxi8-03.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

2 esxi8-02.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

3 esxi8-07.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

4 esxi8-08.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

5 esxi8-09.vxrail.local (host): cpu 1*8*2.39 GHz, memory 34.00 GB

6 esxi8-10.vxrail.local (host): cpu 1*8*2.39 GHz, memory 34.00 GB

7 esxi8-04.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

8 esxi8-05.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

9 esxi8-06.vxrail.local (host): cpu 1*8*2.39 GHz, memory 51.00 GB

/localhost/Datacenter/computers/Cluster-ESA/hosts>

/localhost/Datacenter/computers/Cluster-ESA/hosts> vsan.host_info 0

2023-08-16 14:34:44 +0000: Fetching host info from esxi8-01.vxrail.local (may take a moment) ...

Product: VMware ESXi 8.0.1 build-21495797

vSAN enabled: yes

Cluster info:

Cluster role: agent

Cluster UUID: 522233f7-745e-dc1b-62b0-6a03c897f168

Node UUID: 64232a5e-78b3-3dce-fff2-005056817346

Member UUIDs: ["64d4ec9c-aceb-8a0e-852e-005056811316", "64232a60-8ff7-c42b-a79e-00505681cd1e", "64232a69-83e9-77b6-9bb6-005056812316", "64232a62-67eb-bee8-5510-00505681abf3", "64d51071-c180-ebef-e851-00505681912c", "642477cb-f176-efef-ae47-0050568122ed", "64232a66-5cd4-452f-1cc9-00505681f504", "64d4eb80-0bc0-f743-88af-005056815638", "64232a5e-78b3-3dce-fff2-005056817346", "64d5106d-a1ee-bef9-5273-00505681df3c", "64232a50-80a7-4fff-eab8-00505681ac87"] (11)

Node evacuated: no

Storage info:

Auto claim: no

Disk Mappings:

None

FaultDomainInfo:

Preferred

NetworkInfo:

Adapter: vmk3 (10.10.10.11)

Adapter: vmk1 (172.31.200.11)

Data efficiency enabled: no

Encryption enabled: no

/localhost/Datacenter/computers/Cluster-ESA/hosts>vsan.obj_status_report (command list)

The command “vsan.obj_status_report” prints component status for objects in a vSAN cluster or a specific ESXi host.

In this example, we are executing the command at the cluster level, so we can see details about the entire vSAN cluster:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers>

/localhost/Datacenter/computers>

/localhost/Datacenter/computers> vsan.obj_status_report 1

2023-08-16 14:38:51 +0000: Querying all VMs on vSAN ...

2023-08-16 14:38:51 +0000: Querying DOM_OBJECT in the system from esxi8-01.vxrail.local ...

2023-08-16 14:38:51 +0000: Querying DOM_OBJECT in the system from esxi8-03.vxrail.local ...

2023-08-16 14:38:51 +0000: Querying DOM_OBJECT in the system from esxi8-02.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying DOM_OBJECT in the system from esxi8-07.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying DOM_OBJECT in the system from esxi8-08.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying DOM_OBJECT in the system from esxi8-09.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying DOM_OBJECT in the system from esxi8-10.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying DOM_OBJECT in the system from esxi8-04.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying DOM_OBJECT in the system from esxi8-05.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying DOM_OBJECT in the system from esxi8-06.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying all disks in the system from esxi8-01.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-01.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-03.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-02.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-07.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-08.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-09.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-10.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-04.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-05.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying LSOM_OBJECT in the system from esxi8-06.vxrail.local ...

2023-08-16 14:38:52 +0000: Querying all object versions in the system ...

2023-08-16 14:38:52 +0000: Got all the info, computing table ...

Histogram of component health for non-orphaned objects

+-------------------------------------+------------------------------+

| Num Healthy Comps / Total Num Comps | Num objects with such status |

+-------------------------------------+------------------------------+

| 8/8 (OK) | 6 |

| 17/17 (OK) | 24 |

| 9/9 (OK) | 4 |

| 41/41 (OK) | 1 |

+-------------------------------------+------------------------------+

Total non-orphans: 35

Histogram of component health for possibly orphaned objects

+-------------------------------------+------------------------------+

| Num Healthy Comps / Total Num Comps | Num objects with such status |

+-------------------------------------+------------------------------+

+-------------------------------------+------------------------------+

Total orphans: 0

Total v18 objects: 35

/localhost/Datacenter/computers>Note: With the option “-t”, we can list each object at the VM level, for example:

/localhost/Datacenter/computers> vsan.obj_status_report 1 -t

2023-08-16 14:43:33 +0000: Querying all VMs on vSAN ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-01.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-03.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-02.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-07.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-08.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-09.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-10.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-04.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-05.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying DOM_OBJECT in the system from esxi8-06.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying all disks in the system from esxi8-01.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-01.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-03.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-02.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-07.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-08.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-09.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-10.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-04.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-05.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying LSOM_OBJECT in the system from esxi8-06.vxrail.local ...

2023-08-16 14:43:34 +0000: Querying all object versions in the system ...

2023-08-16 14:43:34 +0000: Got all the info, computing table ...

Histogram of component health for non-orphaned objects

+-------------------------------------+------------------------------+

| Num Healthy Comps / Total Num Comps | Num objects with such status |

+-------------------------------------+------------------------------+

| 8/8 (OK) | 6 |

| 17/17 (OK) | 24 |

| 9/9 (OK) | 4 |

| 41/41 (OK) | 1 |

+-------------------------------------+------------------------------+

Total non-orphans: 35

Histogram of component health for possibly orphaned objects

+-------------------------------------+------------------------------+

| Num Healthy Comps / Total Num Comps | Num objects with such status |

+-------------------------------------+------------------------------+

+-------------------------------------+------------------------------+

Total orphans: 0

Total v18 objects: 35

+--------------------------------------------------------------------------------------------------------------------------+---------+---------------------------+

| VM/Object | objects | num healthy / total comps |

+--------------------------------------------------------------------------------------------------------------------------+---------+---------------------------+

| vCLS-35120ff8-7baa-47de-83f8-b0d037e28b35 | 3 | |

| [vsanDatastore-ClusterA] e7357264-1c60-0df9-ffaa-00505681cd1e/vCLS-35120ff8-7baa-47de-83f8-b0d037e28b35.vmx | | 17/17 |

| [vsanDatastore-ClusterA] e7357264-1c60-0df9-ffaa-00505681cd1e/vCLS-35120ff8-7baa-47de-83f8-b0d037e28b35.vmdk | | 17/17 |

| [vsanDatastore-ClusterA] e7357264-1c60-0df9-ffaa-00505681cd1e/vCLS-35120ff8-7baa-47de-83f8-b0d037e28b35-6b853f54.vswp | | 17/17 |

| vCLS-c51004db-d627-41e3-a64c-975a693cbc88 | 3 | |

| [vsanDatastore-ClusterA] 10367264-a49f-95b8-2a26-00505681abf3/vCLS-c51004db-d627-41e3-a64c-975a693cbc88.vmx | | 17/17 |

| [vsanDatastore-ClusterA] 10367264-a49f-95b8-2a26-00505681abf3/vCLS-c51004db-d627-41e3-a64c-975a693cbc88.vmdk | | 17/17 |

| [vsanDatastore-ClusterA] 10367264-a49f-95b8-2a26-00505681abf3/vCLS-c51004db-d627-41e3-a64c-975a693cbc88-a370a8f5.vswp | | 17/17 |

| vCLS-c957ecb1-53ba-4784-be8d-740a79387c59 | 3 | |

| [vsanDatastore-ClusterA] 2d367264-4c5f-7a97-8e23-005056817346/vCLS-c957ecb1-53ba-4784-be8d-740a79387c59.vmx | | 17/17 |

| [vsanDatastore-ClusterA] 2d367264-4c5f-7a97-8e23-005056817346/vCLS-c957ecb1-53ba-4784-be8d-740a79387c59.vmdk | | 17/17 |

| [vsanDatastore-ClusterA] 2d367264-4c5f-7a97-8e23-005056817346/vCLS-c957ecb1-53ba-4784-be8d-740a79387c59-6cd8aca7.vswp | | 17/17 |

| slax-VM | 3 | |

| [vsanDatastore-ClusterA] 4ae47864-a715-5ea8-5df3-005056817346/slax-VM.vmx | | 17/17 |

| [vsanDatastore-ClusterA] 4ae47864-a715-5ea8-5df3-005056817346/slax-VM.vmdk | | 17/17 |

| [vsanDatastore-ClusterA] 4ae47864-a715-5ea8-5df3-005056817346/slax-VM-c34252c3.vswp | | 17/17 |

| SRV-STORAGE | 4 | |

| [vsanDatastore-ClusterA] 9014ac64-5bb5-0ec2-d686-00505681abf3/SRV-STORAGE.vmx | | 8/8 |

| [vsanDatastore-ClusterA] 9014ac64-5bb5-0ec2-d686-00505681abf3/SRV-STORAGE.vmdk | | 8/8 |

| [vsanDatastore-ClusterA] 9014ac64-5bb5-0ec2-d686-00505681abf3/SRV-STORAGE_1.vmdk | | 8/8 |

| [vsanDatastore-ClusterA] 9014ac64-5bb5-0ec2-d686-00505681abf3/SRV-STORAGE_2.vmdk | | 8/8 |

| SRV-AD | 2 | |

| [vsanDatastore-ClusterA] 7218ac64-3b82-d76f-e4ec-005056817346/SRV-AD.vmx | | 8/8 |

| [vsanDatastore-ClusterA] 7218ac64-3b82-d76f-e4ec-005056817346/SRV-AD.vmdk | | 8/8 |

| SLAX-VM-TEMPLATE | 2 | |

| [vsanDatastore-ClusterA] bc73d664-2fff-d38e-b9c9-00505681cd1e/SLAX-VM-TEMPLATE.vmtx | | 17/17 |

| [vsanDatastore-ClusterA] bc73d664-2fff-d38e-b9c9-00505681cd1e/SLAX-VM-TEMPLATE.vmdk | | 17/17 |

+--------------------------------------------------------------------------------------------------------------------------+---------+---------------------------+

| Unassociated objects | 15 | |

| b825a364-337a-dc24-e1c5-00505681abf3 | | 17/17 |

| d4727264-5a98-dc40-9e43-00505681cd1e | | 17/17 |

| 0926a364-acd8-2474-eb7a-00505681abf3 | | 17/17 |

| c2a27264-b4e7-7495-e7a6-00505681cd1e | | 9/9 |

| fa8bad64-5dd4-9e14-fcf9-00505681abf3 | | 17/17 |

| 18cfa064-8253-1d20-af74-005056812316 | | 9/9 |

| f6347264-4a9e-4aab-a74e-00505681cd1e | | 41/41 |

| b925a364-e695-e6ad-9cf5-00505681abf3 | | 17/17 |

| 31f57064-7605-4fb6-cba8-00505681cd1e | | 9/9 |

| 898cad64-0419-96d7-9f9a-00505681abf3 | | 17/17 |

| 0826a364-6091-40f8-a2e7-00505681abf3 | | 17/17 |

| d6727264-cc30-37fb-aaca-00505681cd1e | | 17/17 |

| e0737264-1c17-543a-1e2a-005056817346 | | 9/9 |

| 882ca364-31ff-a665-1e67-00505681abf3 | | 17/17 |

| d5727264-8c33-889e-392f-00505681cd1e | | 17/17 |

+--------------------------------------------------------------------------------------------------------------------------+---------+---------------------------+

WARNING: Unassociated does NOT necessarily mean unused/unneeded.

Deleting unassociated objects may cause data loss!!!

You must read the following KB before you delete any unassociated objects!!!

https://kb.vmware.com/s/article/70726

+------------------------------------------------------------------+

| Legend: * = all unhealthy comps were deleted (disks present) |

| - = some unhealthy comps deleted, some not or can't tell |

| no symbol = We cannot conclude any comps were deleted |

+------------------------------------------------------------------+

/localhost/Datacenter/computers>vsan.object_info (command list)

The command “vsan.object_info” displays details about a specific vSAN object.

For this command, we need to provide the cluster and UUID. We can see important things with this command such as the existing components, the status of each component, and the UUID of each component:

/localhost/Datacenter/computers> vsan.object_info 1 7218ac64-3b82-d76f-e4ec-005056817346

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-03.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-09.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-07.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-10.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-02.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-08.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-05.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-01.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-06.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from esxi8-04.vxrail.local (may take a moment) ...

2023-08-16 14:52:27 +0000: Fetching vSAN disk info from witness8.vxrail.local (may take a moment) ...

2023-08-16 14:52:31 +0000: Done fetching vSAN disk infos

DOM Object: 7218ac64-3b82-d76f-e4ec-005056817346 (v18, owner: esxi8-03.vxrail.local, proxy owner: None, policy: stripeWidth = 1, cacheReservation = 0, proportionalCapacity = [0, 100], hostFailuresToTolerate = 0, affinity = ["a054ccb4-ff68-4c73-cbc2-d272d45e32df"], forceProvisioning = 0, affinityMandatory = 1, spbmProfileId = 2182d9aa-292d-4e96-b77c-9a64b93c4d3a, spbmProfileGenerationNumber = 0, replicaPreference = Performance, iopsLimit = 0, checksumDisabled = 0, subFailuresToTolerate = 1, CSN = 1574, SCSN = 1544, spbmProfileName = vSAN Policy Preffered Site, locality = Preferred)

Concatenation

RAID_1

Component: 7218ac64-8196-5d71-bec1-005056817346 (state: ACTIVE (5), host: esxi8-03.vxrail.local, capacity: 52d3055e-4135-4e86-caa6-648c449efb0d, cache: ,

votes: 5, usage: 0.0 GB, proxy component: false)

Component: 4422d664-f026-decf-0814-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 524ee935-a046-d7d7-9469-2117d7169e15, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

RAID_1

RAID_0

Component: 4a22d664-84bb-19ea-f776-00505681cd1e (state: ACTIVE (5), host: esxi8-07.vxrail.local, capacity: 52b0d39f-d8db-0b17-baad-5bbd16971a6a, cache: ,

votes: 4, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-ba0c-1fea-8f57-00505681cd1e (state: ACTIVE (5), host: esxi8-05.vxrail.local, capacity: 52177016-47e3-4d17-6cb3-a68ff57e760b, cache: ,

votes: 2, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-d070-21ea-d47b-00505681cd1e (state: ACTIVE (5), host: esxi8-05.vxrail.local, capacity: 52347b4d-beaf-1c54-cd4c-3743bf1f7544, cache: ,

votes: 2, usage: 0.0 GB, proxy component: false)

RAID_0

Component: 4a22d664-4c52-24ea-02d6-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 52e31d64-16f6-1dd3-5c83-32c4dd1110e6, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-9e23-29ea-3340-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 5259bd1e-c22a-706d-aa3c-9b349cadf512, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-5e0f-2dea-6806-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 523ada0a-9fe2-0769-0bf3-47ebb2cbcfd4, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

Extended attributes:

Address space: 273804165120B (255.00 GB)

Object class: vmnamespace

Object path: /vmfs/volumes/vsan:522233f7745edc1b-62b06a03c897f168/SRV-AD

Object capabilities: NONE

/localhost/Datacenter/computers>Note: In the above example, the 7218ac64-3b82-d76f-e4ec-005056817346 is the UUID of the vSAN Home Directory for the VM “SRV-AD”.

vsan.resync_dashboard (command list)

The command “vsan.resync_dashbord” shows the resynchronization dashboard. Here, if we have an active vSAN resync, we can see details with this command.

In this example, the output table is empty because we did not have any vSAN resync in progress:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers>

/localhost/Datacenter/computers> vsan.resync_dashboard 1

2023-08-16 15:17:19 +0000: Querying all VMs on vSAN ...

2023-08-16 15:17:19 +0000: Querying all objects in the system from esxi8-01.vxrail.local ...

2023-08-16 15:17:19 +0000: Fetching resync result from resync improvement api: QuerySyncingVsanObjectsSummary

2023-08-16 15:17:19 +0000: Got all the info, computing table ...

+-----------+----------------------+---------------+-----+-------------+

| VM/Object | Objects/Host to sync | Bytes to sync | ETA | Sync reason |

+-----------+----------------------+---------------+-----+-------------+

+-----------+----------------------+---------------+-----+-------------+

| Total | 0 | 0.00 GB | N/A | |

+-----------+----------------------+---------------+-----+-------------+

/localhost/Datacenter/computers>

vsan.vm_object_info (command list)

The command “vsan.vm_object_info” shows details of all objects about a specific VM.

In this example, we are seeing details of the VM “5 – SRV-AD”:

/localhost/Datacenter/vms> ls

0 Discovered virtual machine/

1 Templates/

2 vCLS/

3 slax-VM: poweredOn

4 SRV-STORAGE: poweredOff

5 SRV-AD: poweredOff

6 New Virtual Machine: poweredOn

7 New Virtual Machine - 2: poweredOn

/localhost/Datacenter/vms>

/localhost/Datacenter/vms> vsan.vm_object_info 5

VM SRV-AD:

Disk backing:

[vsanDatastore-ClusterA] 7218ac64-3b82-d76f-e4ec-005056817346/SRV-AD.vmdk

[vsanDatastore-ClusterA] 7218ac64-3b82-d76f-e4ec-005056817346/SRV-AD.vmx

DOM Object: 7218ac64-3b82-d76f-e4ec-005056817346 (v18, owner: esxi8-03.vxrail.local, proxy owner: None, policy: stripeWidth = 1, cacheReservation = 0, proportionalCapacity = [0, 100], hostFailuresToTolerate = 0, affinity = ["a054ccb4-ff68-4c73-cbc2-d272d45e32df"], forceProvisioning = 0, affinityMandatory = 1, spbmProfileId = 2182d9aa-292d-4e96-b77c-9a64b93c4d3a, spbmProfileGenerationNumber = 0, replicaPreference = Performance, iopsLimit = 0, checksumDisabled = 0, subFailuresToTolerate = 1, CSN = 1574, SCSN = 1544, spbmProfileName = vSAN Policy Preffered Site, locality = Preferred)

Concatenation

RAID_1

Component: 7218ac64-8196-5d71-bec1-005056817346 (state: ACTIVE (5), host: esxi8-03.vxrail.local, capacity: 52d3055e-4135-4e86-caa6-648c449efb0d, cache: ,

votes: 5, usage: 0.0 GB, proxy component: false)

Component: 4422d664-f026-decf-0814-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 524ee935-a046-d7d7-9469-2117d7169e15, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

RAID_1

RAID_0

Component: 4a22d664-84bb-19ea-f776-00505681cd1e (state: ACTIVE (5), host: esxi8-07.vxrail.local, capacity: 52b0d39f-d8db-0b17-baad-5bbd16971a6a, cache: ,

votes: 4, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-ba0c-1fea-8f57-00505681cd1e (state: ACTIVE (5), host: esxi8-05.vxrail.local, capacity: 52177016-47e3-4d17-6cb3-a68ff57e760b, cache: ,

votes: 2, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-d070-21ea-d47b-00505681cd1e (state: ACTIVE (5), host: esxi8-05.vxrail.local, capacity: 52347b4d-beaf-1c54-cd4c-3743bf1f7544, cache: ,

votes: 2, usage: 0.0 GB, proxy component: false)

RAID_0

Component: 4a22d664-4c52-24ea-02d6-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 52e31d64-16f6-1dd3-5c83-32c4dd1110e6, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-9e23-29ea-3340-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 5259bd1e-c22a-706d-aa3c-9b349cadf512, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

Component: 4a22d664-5e0f-2dea-6806-00505681cd1e (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 523ada0a-9fe2-0769-0bf3-47ebb2cbcfd4, cache: ,

votes: 1, usage: 0.0 GB, proxy component: false)

[vsanDatastore-ClusterA] 7218ac64-3b82-d76f-e4ec-005056817346/SRV-AD.vmdk

DOM Object: 7318ac64-1e35-8804-2370-005056817346 (v18, owner: esxi8-03.vxrail.local, proxy owner: None, policy: stripeWidth = 1, cacheReservation = 0, proportionalCapacity = 0, hostFailuresToTolerate = 0, affinity = ["a054ccb4-ff68-4c73-cbc2-d272d45e32df"], forceProvisioning = 0, affinityMandatory = 1, spbmProfileId = 2182d9aa-292d-4e96-b77c-9a64b93c4d3a, spbmProfileGenerationNumber = 0, replicaPreference = Performance, iopsLimit = 0, checksumDisabled = 0, subFailuresToTolerate = 1, CSN = 5739, SCSN = 5112, spbmProfileName = vSAN Policy Preffered Site, locality = Preferred)

Concatenation

RAID_1

Component: 9b9cd364-abf5-a87f-63a5-00505681abf3 (state: ACTIVE (5), host: esxi8-03.vxrail.local, capacity: 52b7f26e-35c7-ffb5-04e3-d934b0f5ae79, cache: ,

votes: 2, usage: 0.1 GB, proxy component: false)

Component: 4b22d664-e4ac-460c-fc2f-00505681abf3 (state: ACTIVE (5), host: esxi8-07.vxrail.local, capacity: 5274f814-ab9e-73fa-e964-a89dd73852ad, cache: ,

votes: 2, usage: 0.1 GB, proxy component: false)

RAID_1

RAID_0

Component: 7318ac64-8904-a605-ec97-005056817346 (state: ACTIVE (5), host: esxi8-03.vxrail.local, capacity: 52b533ad-5244-d3de-c012-fa620cb115e9, cache: ,

votes: 1, usage: 2.2 GB, proxy component: false)

Component: 7318ac64-d335-a705-a625-005056817346 (state: ACTIVE (5), host: esxi8-03.vxrail.local, capacity: 52b7f26e-35c7-ffb5-04e3-d934b0f5ae78, cache: ,

votes: 1, usage: 2.2 GB, proxy component: false)

Component: 7318ac64-870a-a805-d6b9-005056817346 (state: ACTIVE (5), host: esxi8-03.vxrail.local, capacity: 52d3055e-4135-4e86-caa6-648c449efb0c, cache: ,

votes: 1, usage: 2.2 GB, proxy component: false)

RAID_0

Component: 4b22d664-328a-4a0c-cd89-00505681abf3 (state: ACTIVE (5), host: esxi8-09.vxrail.local, capacity: 52e31d64-16f6-1dd3-5c83-32c4dd1110e6, cache: ,

votes: 4, usage: 2.2 GB, proxy component: false)

Component: 4b22d664-4a25-4e0c-cff7-00505681abf3 (state: ACTIVE (5), host: esxi8-07.vxrail.local, capacity: 5274f814-ab9e-73fa-e964-a89dd73852ac, cache: ,

votes: 2, usage: 2.2 GB, proxy component: false)

Component: 4b22d664-4d18-500c-0a54-00505681abf3 (state: ACTIVE (5), host: esxi8-05.vxrail.local, capacity: 525263b4-343e-0369-8775-6655f10096ca, cache: ,

votes: 4, usage: 2.2 GB, proxy component: false)

/localhost/Datacenter/vms>vsan.what_if_host_failures (command list)

The command “vsan.what_if_host_failures” simulates how host failures impact the vSAN resource usage compared to the current usage.

This test simulates the failure of 1 ESXi host and shows the resource usage after the failure:

/localhost/Datacenter/computers> ls

0 Cluster-OSA (cluster): cpu 61 GHz, memory 102 GB

1 Cluster-ESA (cluster): cpu 151 GHz, memory 105 GB

/localhost/Datacenter/computers>

/localhost/Datacenter/computers> vsan.whatif_host_failures 0

Simulating 1 host failures:

+-----------------+----------------------------+-----------------------------------+

| Resource | Usage right now | Usage after failure/re-protection |

+-----------------+----------------------------+-----------------------------------+

| HDD capacity | 3% used (249.23 GB free) | 4% used (185.24 GB free) |

| Components | 1% used (3572 available) | 1% used (2672 available) |

| RC reservations | 0% used (0.00 GB free) | 0% used (0.00 GB free) |

+-----------------+----------------------------+-----------------------------------+

/localhost/Datacenter/computers>