Working with Fault Sets on PowerFlex shows what Fault Sets are and how they can be used.

This is a simple example of how we can work with Fault Sets. All tests were performed in a lab environment using virtual machines (nested lab). The PowerFlex version is 4.5.2.

First and foremost, what is Dell PowerFlex?

Dell PowerFlex is a software-defined storage platform that combines storage and compute resources into a single system. It offers high performance, scalability, and flexibility for modern data center needs. PowerFlex supports block storage in two-layer (compute and storage separate) or single-layer (hyper-converged) deployments (we can also mix both types of implementation). It is ideal for applications like databases, virtualized environments, and containers. In addition to providing block storage, PowerFlex can also offer file services such as SMB and NFS shares.

We have written a small article introducing PowerFlex. Check here to read it!

What are Fault Sets?

Fault Sets are logical entities that contain a group of SDSs within a protection domain. A Fault Set is defined as a set of servers likely to fail together, for example, an entire rack full of servers.

PowerFlex requires a minimum of three Fault Sets per protection domain, with at least two nodes in each Fault Set.

Each fault set must have at least two nodes to achieve high availability and tolerance.

The minimum number of Fault Sets is 3, and the maximum is 64!

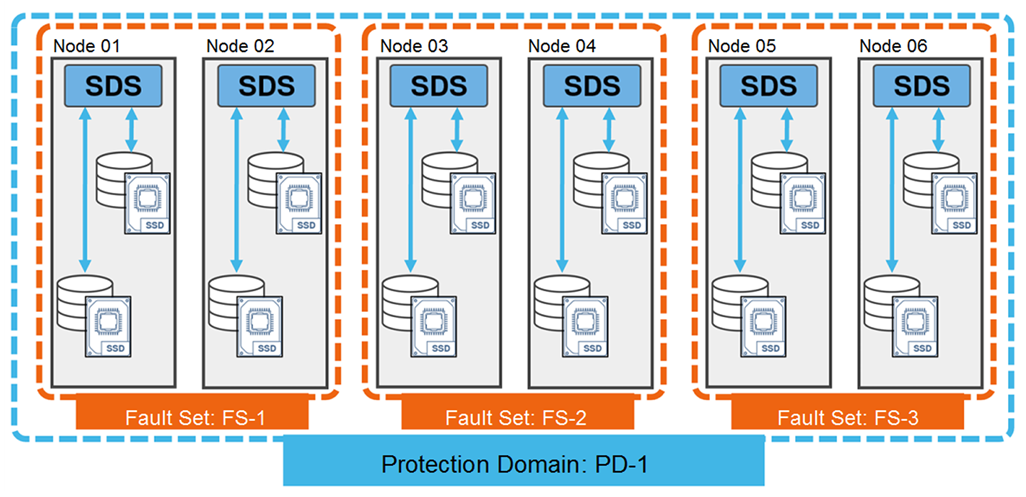

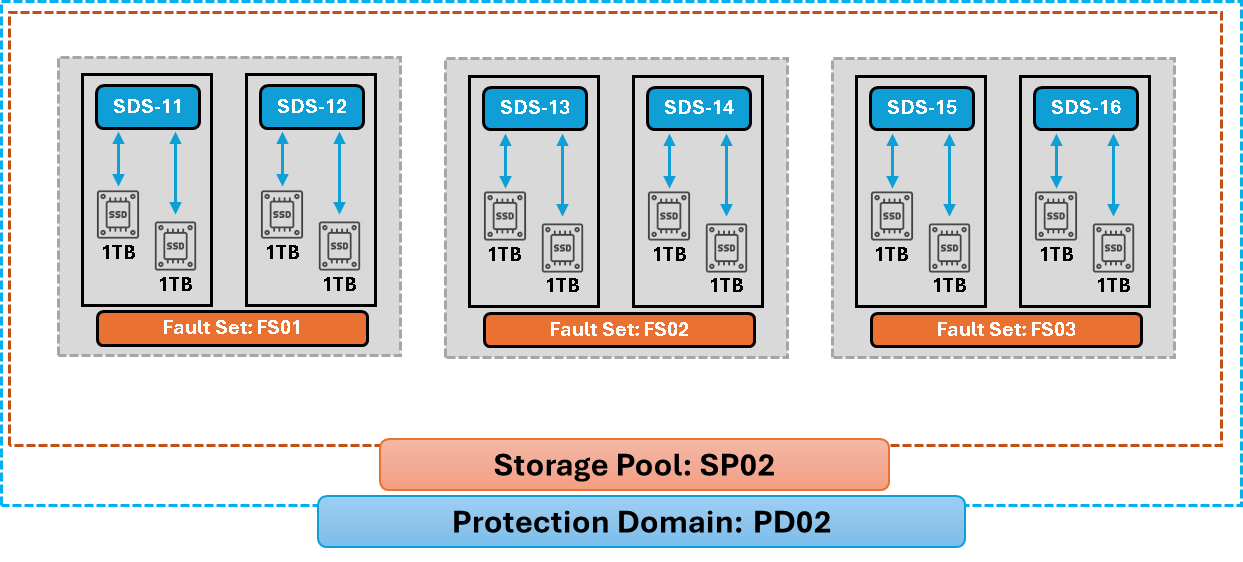

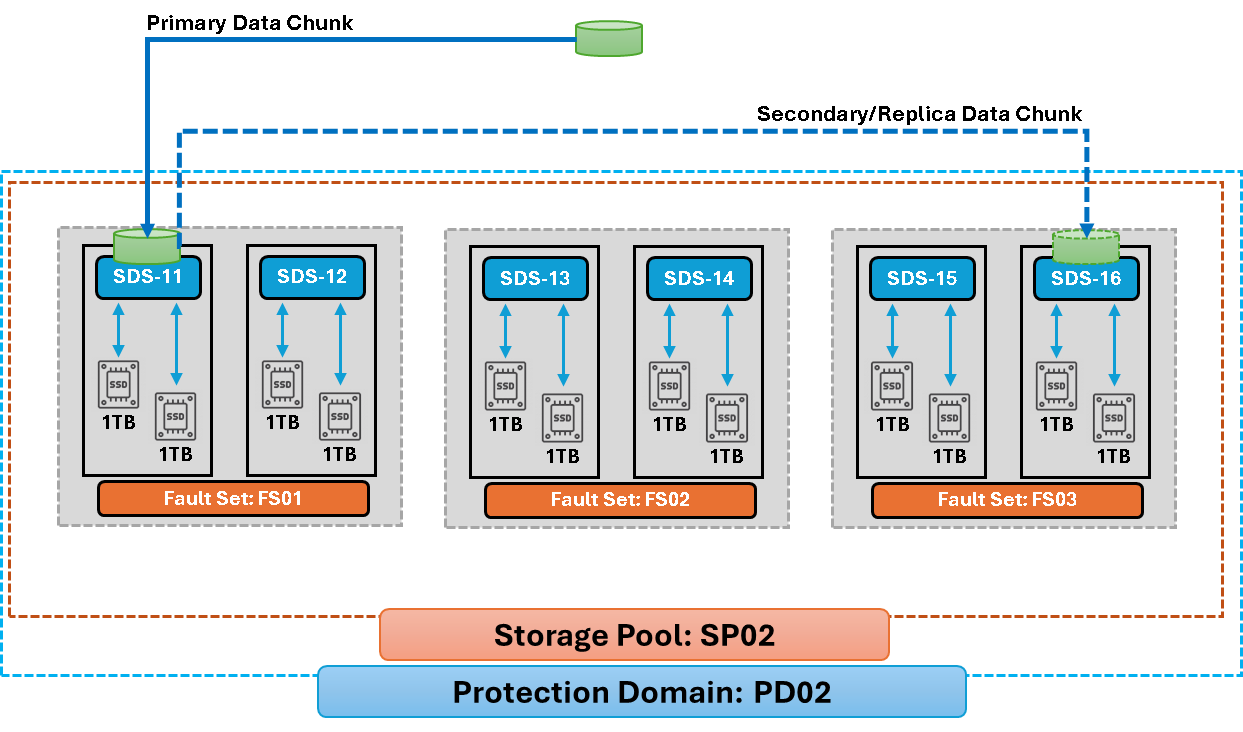

As we can see in the above picture, there are three fault sets and two SDS nodes for each fault set. All Fault Sets together are part of the Protection Domain “PD-1” (or we can say that our Protection Domain comprises three Fault Sets).

How do Fault Sets work?

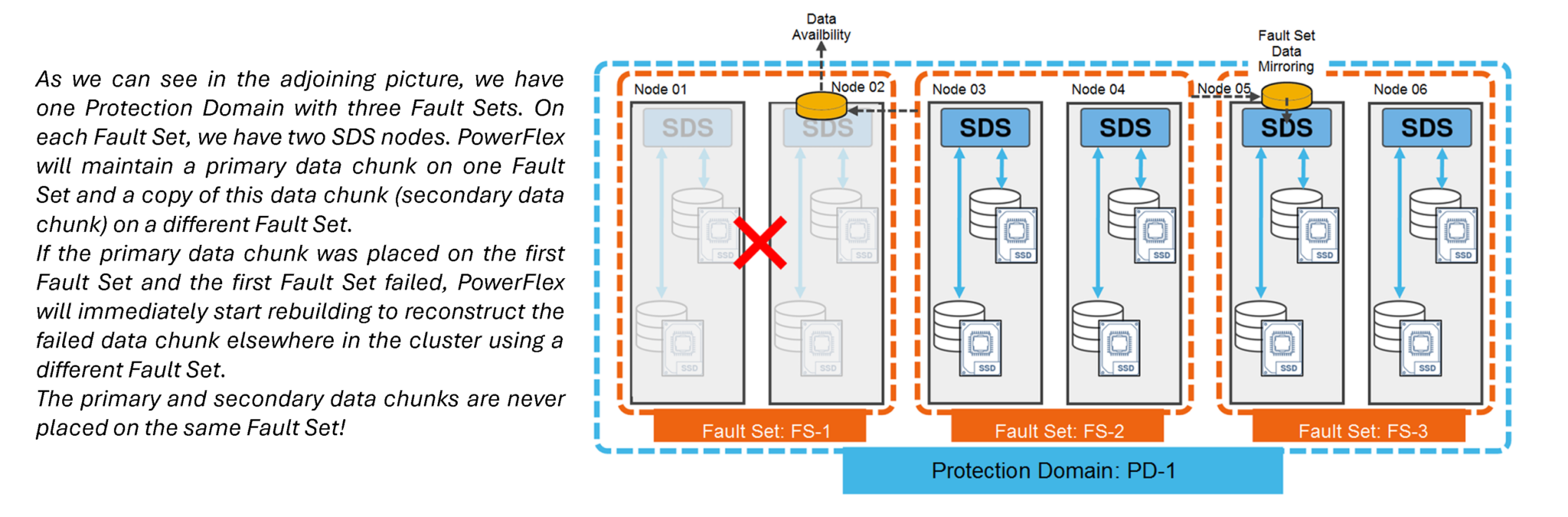

PowerFlex maintains a copy of all data chunks (blocks) within the Fault Sets on SDSs outside of itself:

Mirroring Fault Set data ensures that another data copy is always available even if all the servers within the defined Fault Set fail simultaneously!

How can I create Fault Sets?

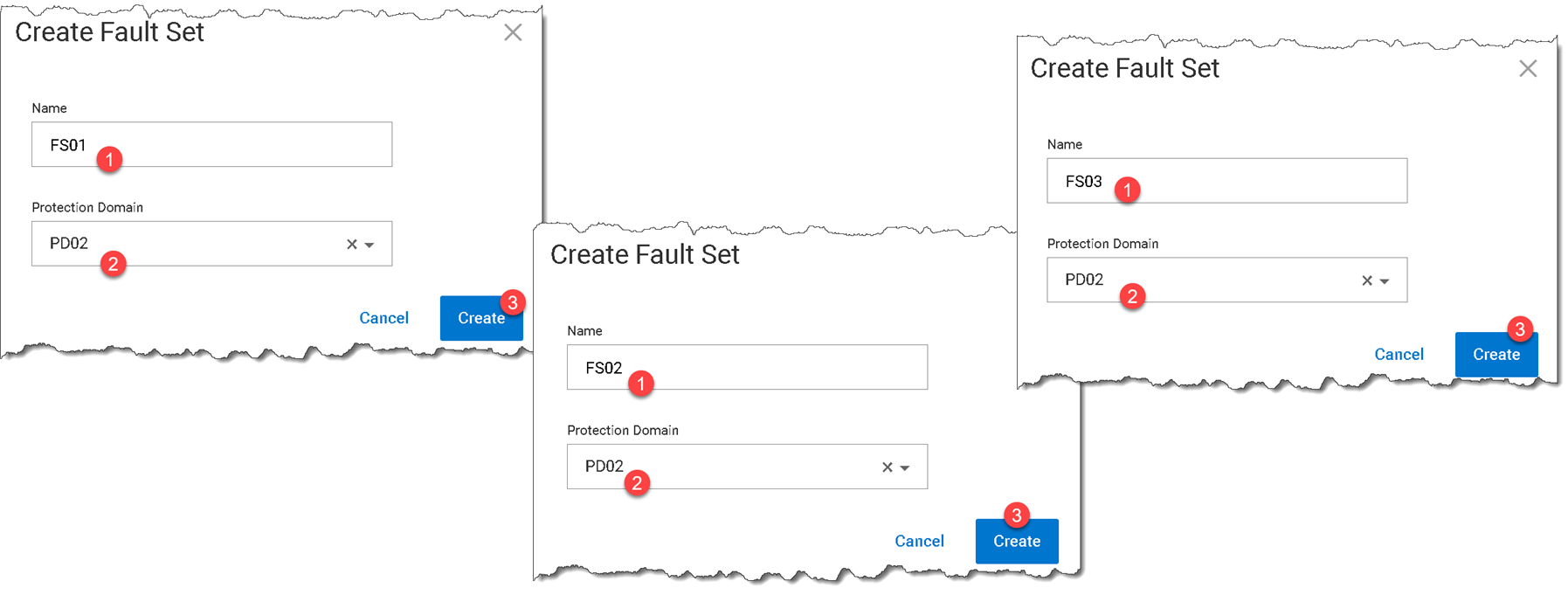

1- To create a Fault Set, access the PowerFlex Manager UI –> Block –> Fault Sets –> + Create Fault Set:

In this example, we will create three Fault Sets (FS01, FS02, and FS03):

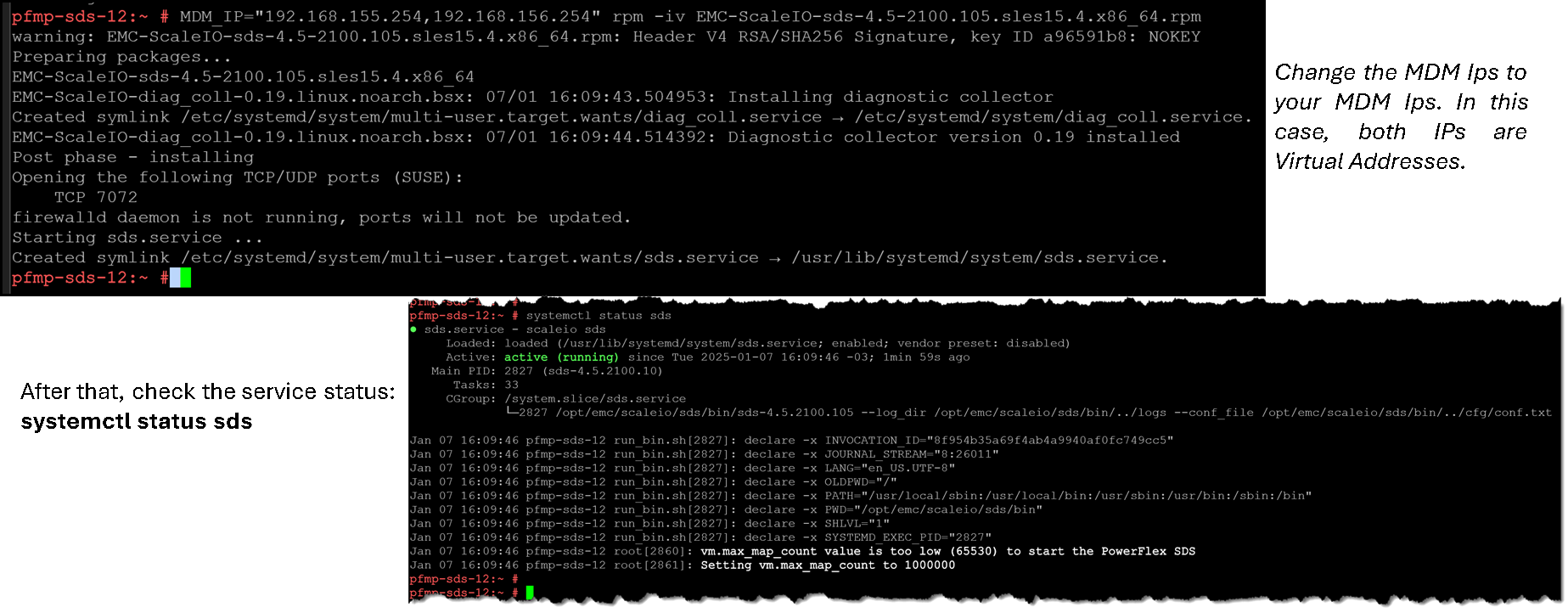

2- Copy the SDS package to the SDS node and install it (Since we are using SLES 15 SP4, we chose the package for it):

MDM_IP="192.168.155.254,192.168.156.254" rpm -iv EMC-ScaleIO-sds-4.5-2100.105.sles15.4.x86_64.rpm

Note: Install the SDS package on all SDS nodes you want to be part of Fault Sets!

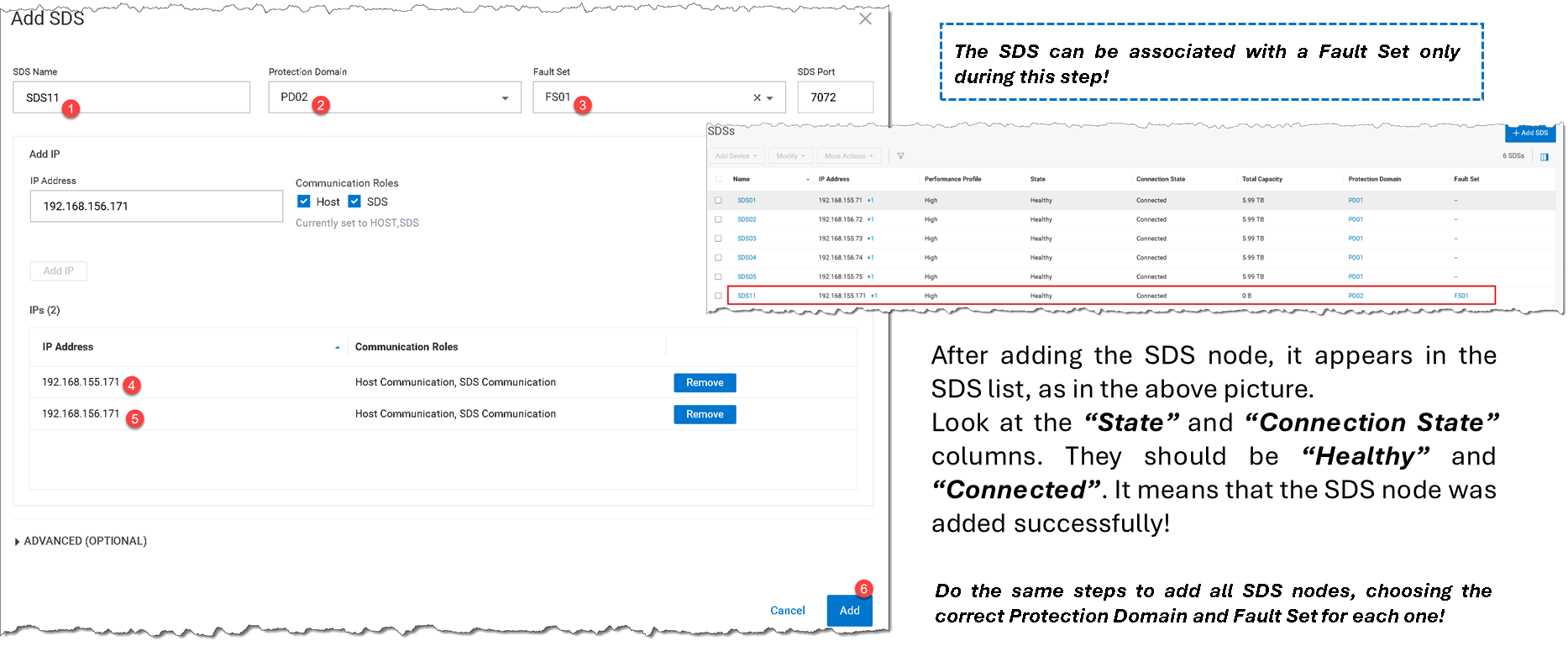

3- To add an SDS node to a Fault Set, access the PowerFlex Manager UI –> Block –> SDSs –> + Add SDS:

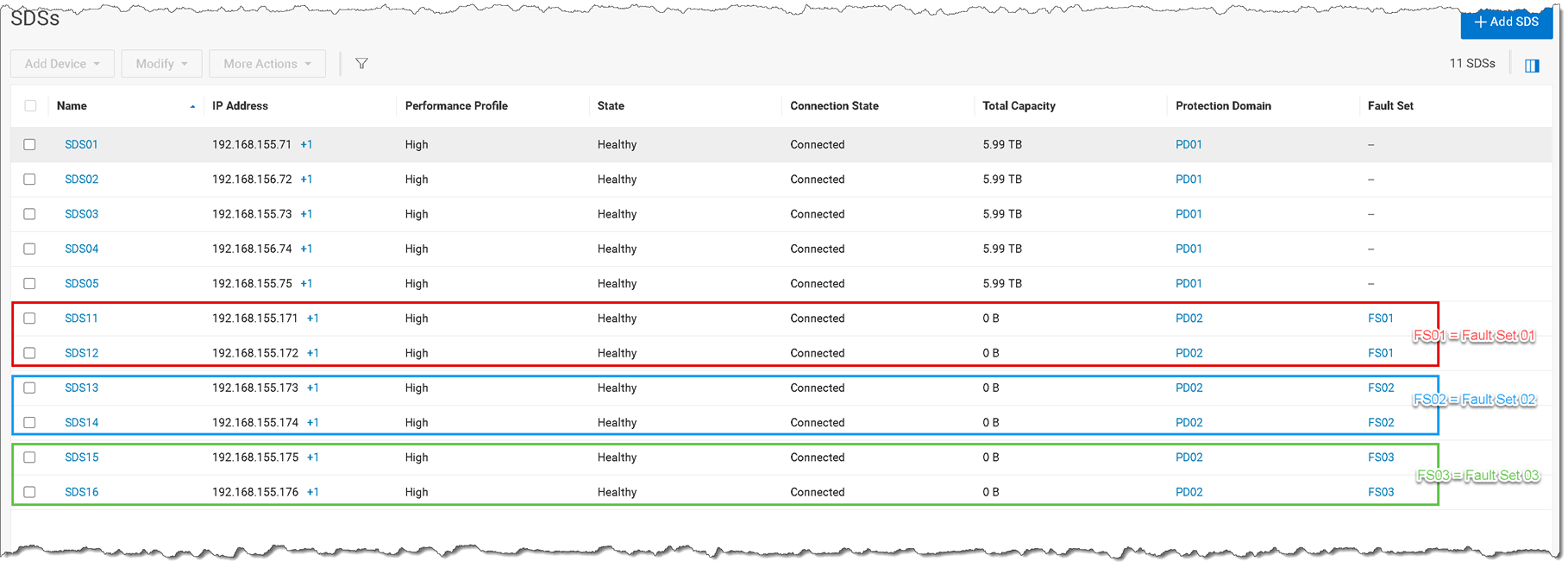

4- The result is three Fault Sets with two SDS nodes each, as we can see in the following picture:

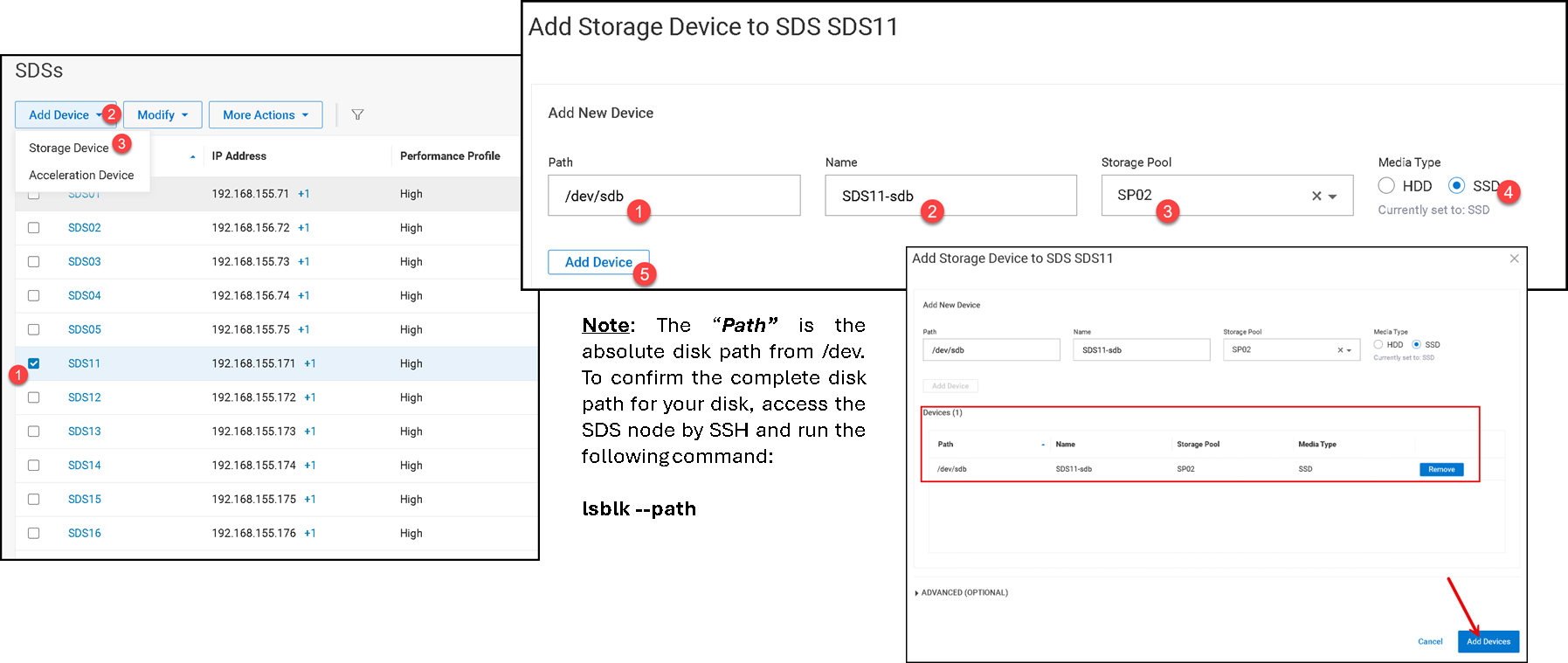

5- For each SDS node, we have one disk device. Currently, the Storage Pool has no capacity because we do not add the disk devices (we only add the SDS node). The next step is to add each disk device. On the SDSs menu, select the SDS –> Add Device –> Storage Device:

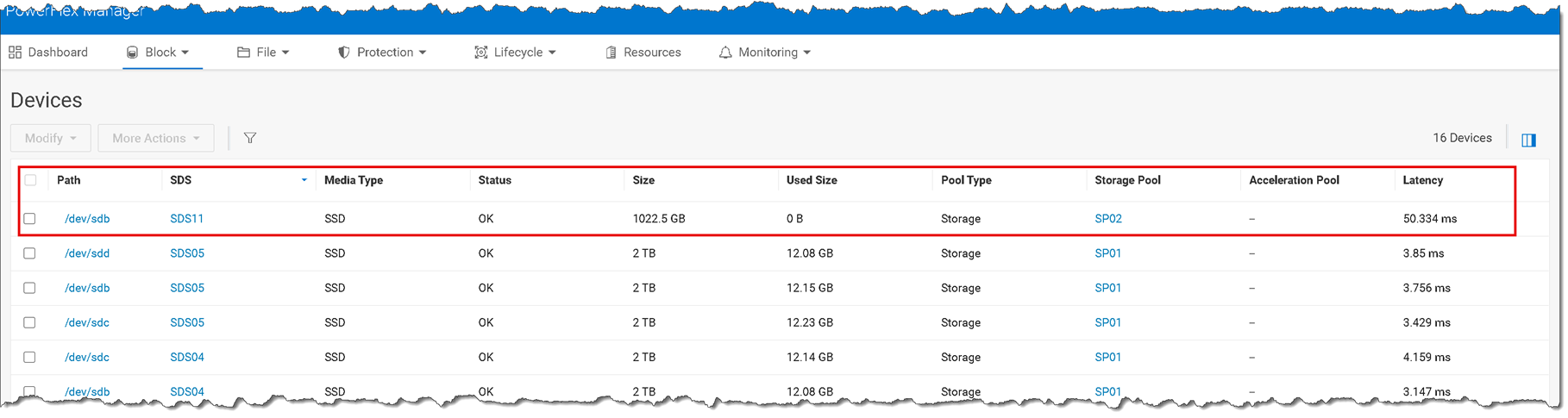

6- Afterward, go to the Devices menu to see the added device. On the PowerFlex Manager UI –> Block –> Devices:

Do the same steps to add the other disk devices!

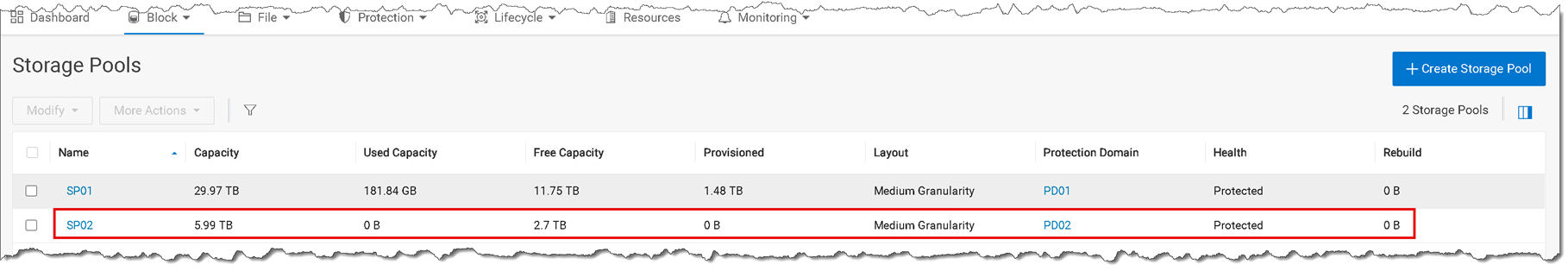

7- After adding all device disks, the Storage Pool “SP02” has the available capacity for using:

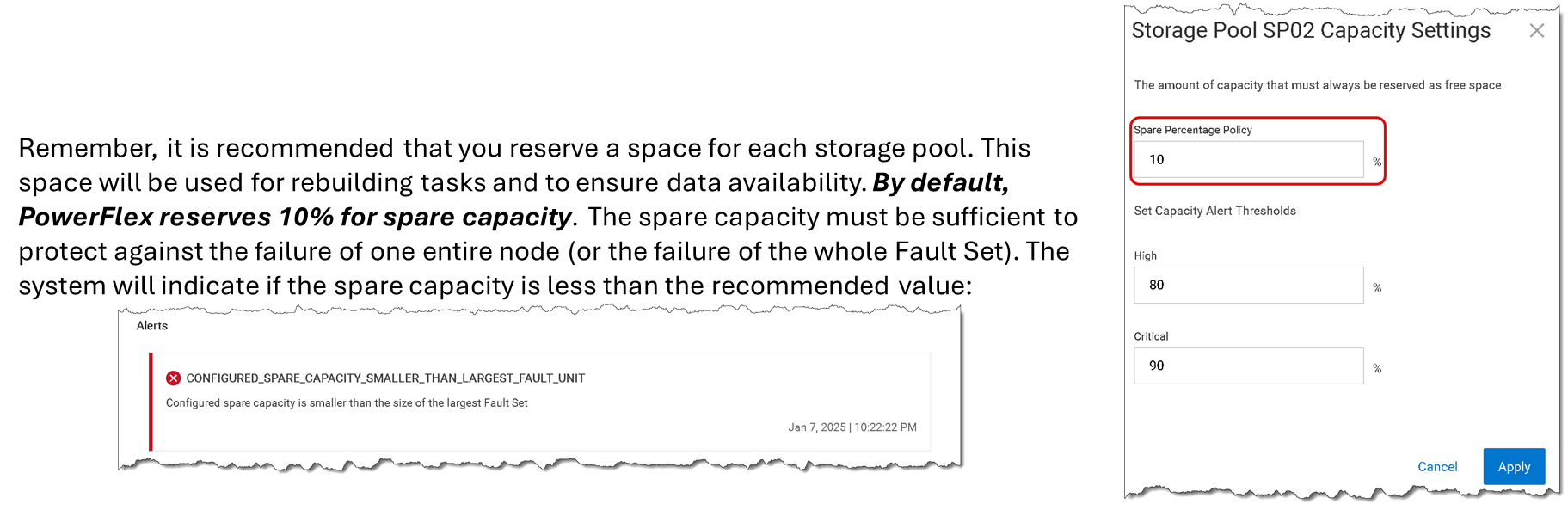

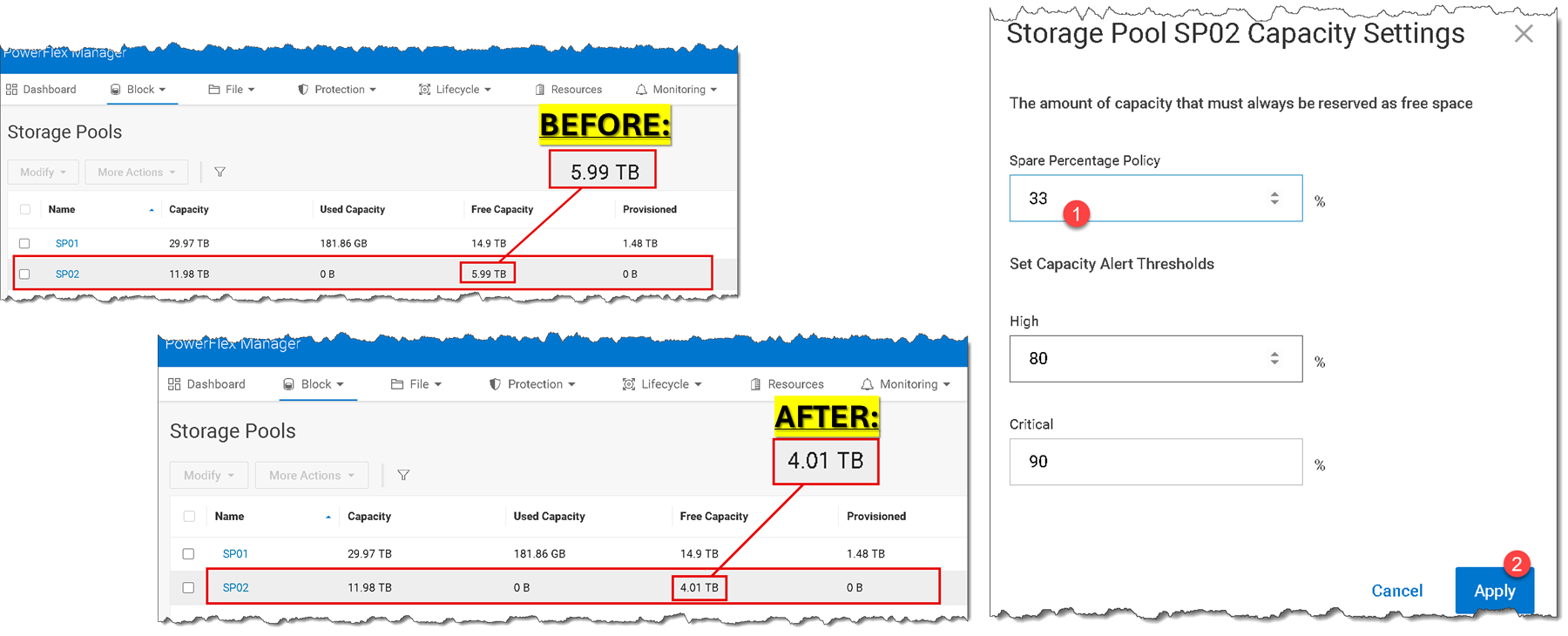

8- To set up the recommended spare capacity percentage, we can use the following calc:

100% divided by the number of SDS nodes or number of Fault Sets (if you are using Fault Sets)

100% divided by 3 (number of Fault Sets) = ~ 33%

Deeping Dive about Raw and Free Space with Fault Sets

The following diagram shows our scenario using Fault Sets. We have three Fault Sets (FS01, FS02, and FS03), each with two SDS nodes. Looking inside each Fault Set, we have 2TB of raw capacity. However, since PowerFlex places each data chunk in a mesh-mirror way, it results in 1TB of usable/free capacity (PowerFlex automatically reserves 50% of the raw capacity to store the replica of each data chunk). So, based on this scenario:

1) What is the total usable/free capacity for the PowerFlex Cluster?

2) How do we calculate the spare capacity percentage for this PowerFlex Cluster?

Answers:

1- FS01 (4TB) + FS02 (4TB) + FS03 (4TB) = 12TB of raw capacity

12TB – 50% of mirror-mesh overhead = 6TB of usable/free capacity

2- By default, 10% of spare capacity is reserved for each Storage Pool. However, to set up the correct value based on the number of SDS nodes/Fault Sets, we need:

100% / number of SDS nodes or Fault Sets

100% / 3 Fault Sets = ~ 33% of spare capacity

6TB – 33% = ~ 4TB of usable/free capacity

How a data chunk is placed within Fault Sets?

As we can see in the following picture, a data chunk (green item) is placed on a mirror-mesh way, spread over different Fault Sets (the primary data chunk is placed somewhere on one Fault Set, and the secondary/copy data chunk is placed somewhere on a different Fault Set):

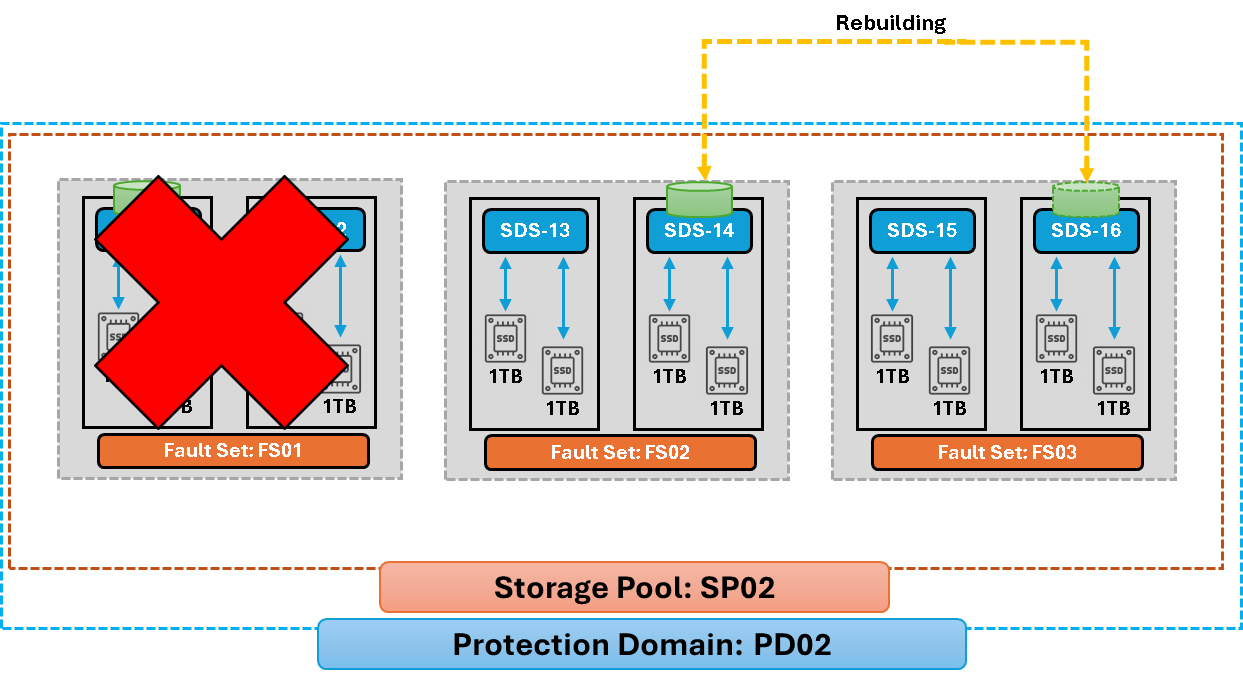

Note: When an entire Fault Set fails, the PowerFlex immediately starts a rebuild to reconstruct the failed data chunks somewhere in another Fault Set:

That’s it 🙂