HPC is an acronym for High Performance Computing.

High-performance computing (HPC) generally refers to processing computationally intensive tasks at high speed across multiple servers in parallel. Those server sets are clusters with compute servers using a high-speed, low-latency network.

What’s an HPC Cluster?

In an HPC cluster, each computer/server is a node. So, an HPC cluster is a set of nodes.

HPC clusters run batches of computations. The core of any HPC cluster is the scheduler (Slurm, for example), which tracks available resources and efficiently assigns job requests to compute resources (CPU and GPU) over a fast network.

We can consider a typical HPC solution with three main components or layers. They are:

- Compute, Network, and Storage

In the same context, the term “supercomputer” can refer to an HPC cluster. A supercomputer is composed of thousands of compute nodes that work together to complete tasks. So, there is a possibility of running parallel tasks to get the result faster.

While historically supercomputers were single super-fast machines, current high-performance computers are built using massive clusters of servers with one or more central processing units (CPUs).

Each node in an HPC cluster requires an operating system (OS). According to the TOP500 list, which continues to track the world’s most powerful computer systems, the majority of operating systems for high-performance computing are Linux. To access the TOP500 lists, click here.

Why HPC? What can we do with HPC?

Nowadays, organizations generate large volumes of data and need to process and use it more quickly in real time. So, with HPC clusters, organizations can easily align their goals.

HPC is now running from the cloud to the edge and can be applied to a wide range of problems—across industries such as science, oil and gas, healthcare, and engineering—due to its ability to solve large-scale computational problems within reasonable time and cost parameters. HPC can also be applied in other industries and use cases, such as government and academic research, high-performance graphics, biosciences, genomics, manufacturing, financial services and banking, geoscience, and media.

To power increasingly sophisticated algorithms, high-performance data analysis (HPDA) has emerged as a new segment that leverages HPC resources for big data. In addition, supercomputing is enabling deep learning and neural networks to advance artificial intelligence (AI).

What’s Slurm?

As we told you, HPC clusters run batches of computations, and the core is the scheduler. So, let’s talk about Slurm.

Slurm is an open source, fault-tolerant, and highly scalable cluster management and job scheduling system for large and small Linux clusters. Slurm requires no kernel modifications to operate and is relatively self-contained. As a cluster workload manager, Slurm has three key functions:

1- It allocates exclusive and/or non-exclusive access to resources (compute nodes) to users for some duration of time so they can perform work.

2- It provides a framework for starting, executing, and monitoring work (usually a parallel job) on the set of allocated nodes.

3- It arbitrates contention for resources by managing a queue of pending work.

To know more about Slurm, click here to access its documentation page!

Slurm Architecture

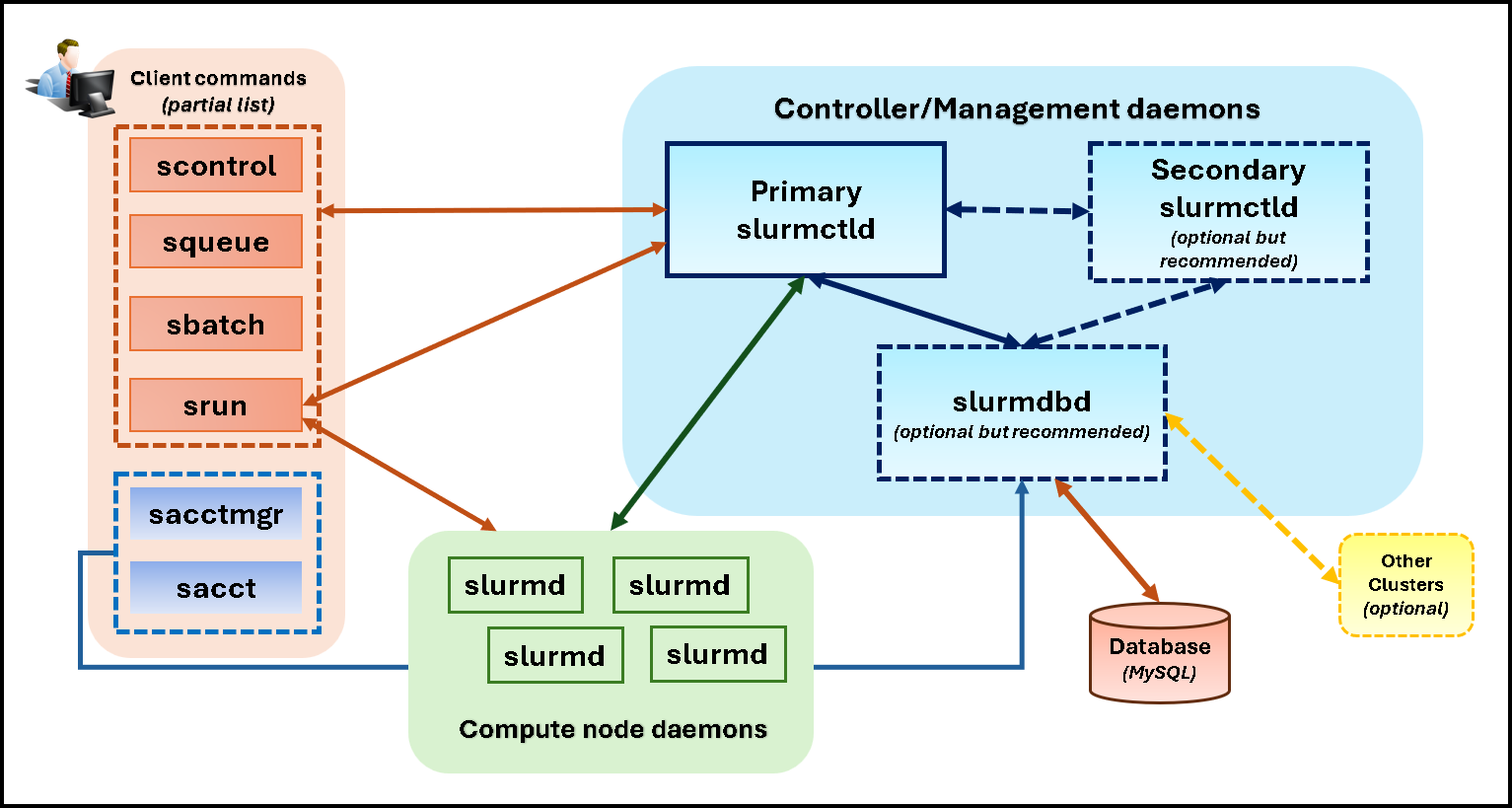

Slurm has a centralized manager, slurmctld, that monitors resources and workloads. There may also be a backup manager to assume those responsibilities in the event of a failure (it is known as the primary slurmctld and the secondary slurmctld).

Each compute server (node) has a slurmd daemon, which can be compared to a remote shell: it waits for work, executes it, returns its status, and waits for more work.

There is an optional Slurmdbd (Slurm Database Daemon), which can be used to record accounting information for multiple Slurm-managed clusters in a single database.

Users or clients have many commands to interact with the Slurm architecture. Some users’ Slurm commands/tools are (these are some commands, but there are more commands):

- srun: command used to initiate jobs. The job is sent to the cluster and queue for execution.

- scancel: command used to terminate a queued or a running job.

- sinfo: used to report system status, like node status.

- squeue: used to report the status of each job.

- sacct: used to get information about jobs and job steps that are running or have completed. This information is stored on a database and managed by the Slurmdbd daemon.

- scontrol: It is an administrative tool for monitoring and/or modifying configuration and state information on the cluster.

- sacctmgr: Another administrative tool used to manage database-related configurations.

The following picture shows each Slurm component and how each interacts with the others:

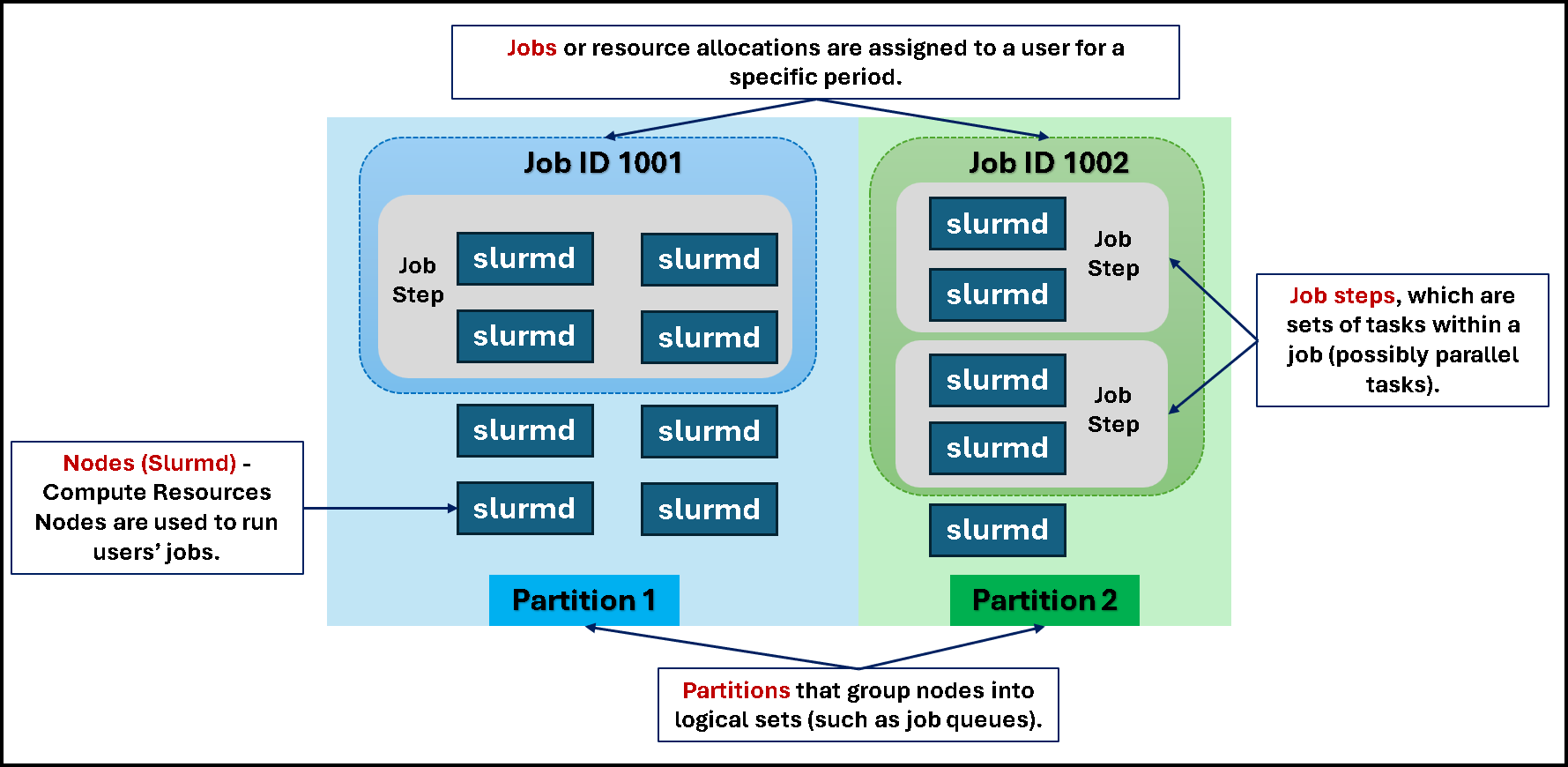

Each task executed by the user is called a “job”. A single job can execute one or more tasks (known as parallel tasks). The following picture shows an example of two jobs (job IDs 1001 and 1002). As we can see, a job can use more than one node (slurmd); the user can set it up before submitting the job to the HPC cluster.

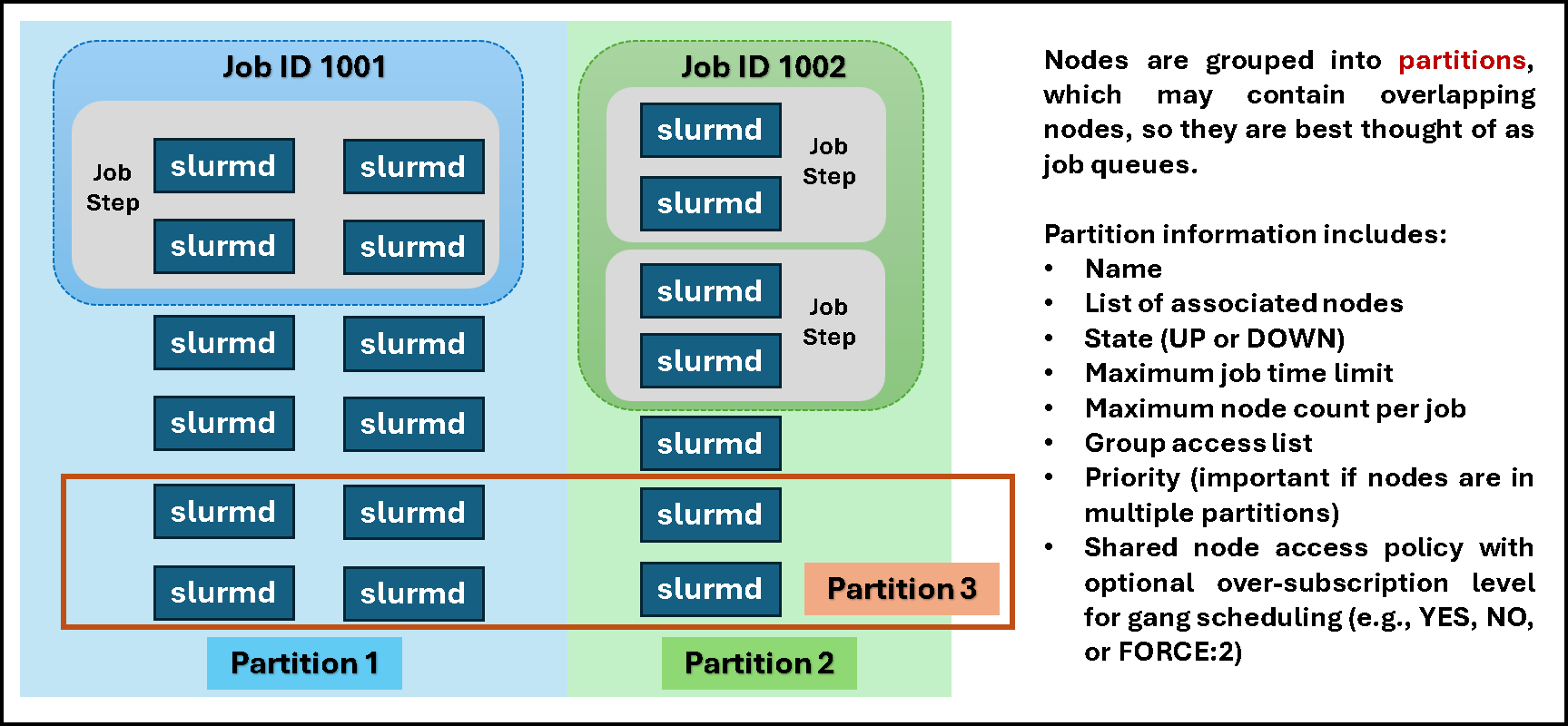

The partitions can be considered job queues, each with a set of constraints, such as a job size limit, a job time limit, the users permitted to use it, etc. Priority-ordered jobs are allocated nodes within a partition until the resources (nodes, processors, memory, etc.) within that partition are exhausted. Once a job is assigned a set of nodes, the user can initiate parallel work in the form of job steps across any configuration within the allocation:

Nodes are grouped into partitions, which may contain overlapping nodes, so they are best thought of as job queues. As we can see in the following picture, a node can be part of more than one Slurm partition at the same time:

That’s it for now 😉

This is a simple article to introduce HPC and Slurm. We’ll plan to release more related articles about this fantastic subject!