How to Deploy PowerFlex Manager on Linux will show all the necessary steps to deploy the PowerFlex Manager in a lab environment. We will use PowerFlex version 4.5.2!

What is PowerFlex Manager?

PowerFlex Manager simplifies deployment, management, and automation for PowerFlex systems. It centralizes control over computing and storage resources, streamlining lifecycle management and monitoring and configuration operations while efficiently managing and scaling PowerFlex environments.

PowerFlex Manager 4.5.2 is deployed on a Kubernetes cluster. The architecture leverages Kubernetes to enhance its scalability, resilience, and ability to manage containerized services, making it more efficient in managing PowerFlex environments.

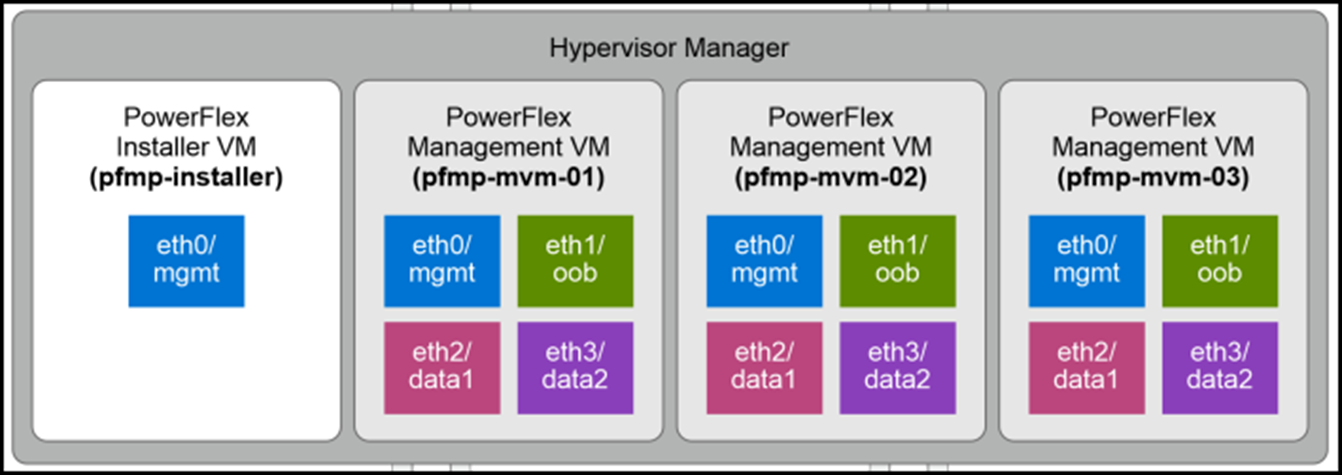

Create the PowerFlex Manager cluster with three nodes for redundancy purposes.

The previous PowerFlex versions, 3.6.x for example, did not have the PowerFlex Manager. For old versions like 3.6.x, the Gateway and Presentation Server are responsible for providing a UI to configure and manage the PowerFlex cluster!Temporary Installer VM

A temporary Installer VM is needed to deploy and configure the PowerFlex Management platform on a Linux environment. This PowerFlex management platform installer VM is used to deploy the containerized services required for the PowerFlex management platform.

The following figure shows that the PowerFlex management platform consists of three nodes:

In our lab, the temporary installer VM is a CentOS9-based Linux. We will talk about its configuration:

1- Network configuration:

Our management network interface is ens192 and has the IP address 192.168.255.50. We are using the “nmcli” tool to configure the network interface. The system automatically saves all commands:

nmcli connection modify ens192 ipv4.method manual ipv4.address 192.168.255.50/24

nmcli connection modify ens192 ipv4.gateway 192.168.255.1

nmcli con mod ens192 ipv4.dns 192.168.255.3"ipv4.dns-search lab.local

nmcli connection up ens1922- NTP configuration:

Set up all devices using the same NTP server. On CentOS, we have the chronyd daemon responsible for syncing the date and time through an NTP server:

vi /etc/chrony.conf # Under “server” add the NTP Server IP address

systemctl enable chrony

systemctl enable chronyd3- Install all the necessary packages (the installer VM needs to have Internet access):

dnf install -y python3 python3-pip httpd-tools libselinux-python3 sshpass java-11-openjdk-headless jq haproxy keepalived lvm2 skopeo numactl libaio wget apr python3 python3-rpm python3-cryptography yum-utils bash-completion binutils java-11-openjdk-headless smartmontools binutils sg3_utils hdparm pciutils ndctl jq daxio libpmem4- Create a working directory to extract all PowerFlex Manager installation files:

Download the ‘PFMP2-4.5.2.0-173.tgz’ file from support.dell.com in the PowerFlex section:

mkdir -p /var/lib/pfmp_installer

cd /var/lib/pfmp_installer

tar -zxvf PFMP2-4.5.2.0-173.tgz5- Install the podman-docker:

The setup_installer.sh script uses docker to extract an image from a tar file and then run this image as a container. Red Hat decided from RHEL 8 onwards not to use docker but instead to use postman. The good news is that a podman-docker package converts any docker command to a podman command. Each Docker command issues a warning, but you can suppress these by creating the /etc/containers/nodocker file:

dnf install -y podman podman-docker

touch /etc/containers/nodocker6- Solve a clash related to the network containers:

There is a clash/conflict between the network plugin used by the container to create the cluster and the Kubernetes cluster’s network plugin. The container runs fine, creating a network called pfmp_installer_nw. The bad news is that when the Kubernetes cluster deploys, it somehow tries to grab this network and falls over in a heap. The good news is that with podman version 4, there is a new network option called netavark (https://www.redhat.com/sysadmin/podman-new-network-stack) eliminating this ‘clash’.

It is necessary to install it and then set it as the default network_backend for podman (interestingly, with RHEL 9.2, netavark was installed automatically with podman):

dnf install -y netavark

mkdir -p /etc/containers/containers.conf.d

echo "[network]" > /etc/containers/containers.conf.d/pfmp.conf

echo 'network_backend = "netavark"' >> /etc/containers/containers.conf.d/pfmp.conf7- Create a podman firewall service:

The deployment of the PowerFlex Manager Kubernetes cluster opens several firewall ports and performs firewall resets. This has a catastrophic effect on podman networks.

To fix it, create a file “/etc/systemd/system/podman-firewalld-reload.service” and add the following content:

vi /etc/systemd/system/podman-firewalld-reload.service

# /etc/systemd/system/podman-firewalld-reload.service

[Unit]

Description=Redo podman NAT rules after firewalld starts or reloads

Wants=dbus.service

After=dbus.service

[Service]

Type=simple

Environment=LC_CTYPE=C.utf8

ExecStart=/bin/bash -c "dbus-monitor --profile --system 'type=signal,sender=org.freedesktop.DBus,path=/org/freedesktop/DBus,interface=org.freedesktop.DBus,member=NameAcquired,arg0=org.fedoraproject.FirewallD1' 'type=signal,path=/org/fedoraproject/FirewallD1,interface=org.fedoraproject.FirewallD1,member=Reloaded' | sed -u '/^#/d' | while read -r type timestamp serial sender destination path interface member _junk; do if [[ $type = '#'* ]]; then continue; elif [[ $interface = org.freedesktop.DBus && $member = NameAcquired ]]; then echo 'firewalld started'; podman network reload --all; elif [[ $interface = org.fedoraproject.FirewallD1 && $member = Reloaded ]]; then echo 'firewalld reloaded'; podman network reload --all; fi; done"

Restart=always

[Install]

WantedBy=multi-user.target8- Enable the new service created on the step before:

systemctl daemon-reload

systemctl enable --now podman-firewalld-reload.service9- Disable the firewall service:

systemctl stop firewalld

systemctl disable firewalld10- Create the “PMPF_Config.json” (we will discuss it later).

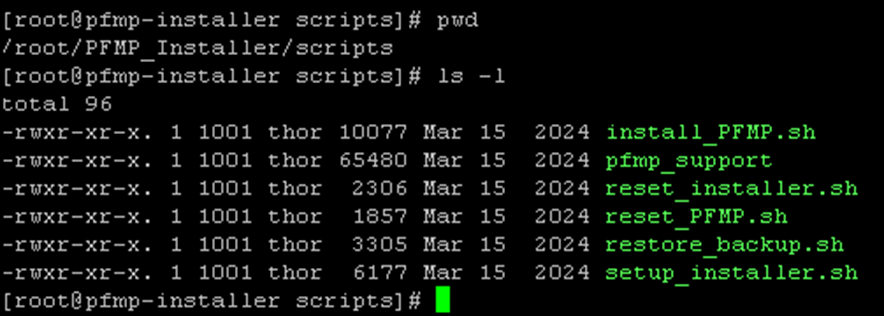

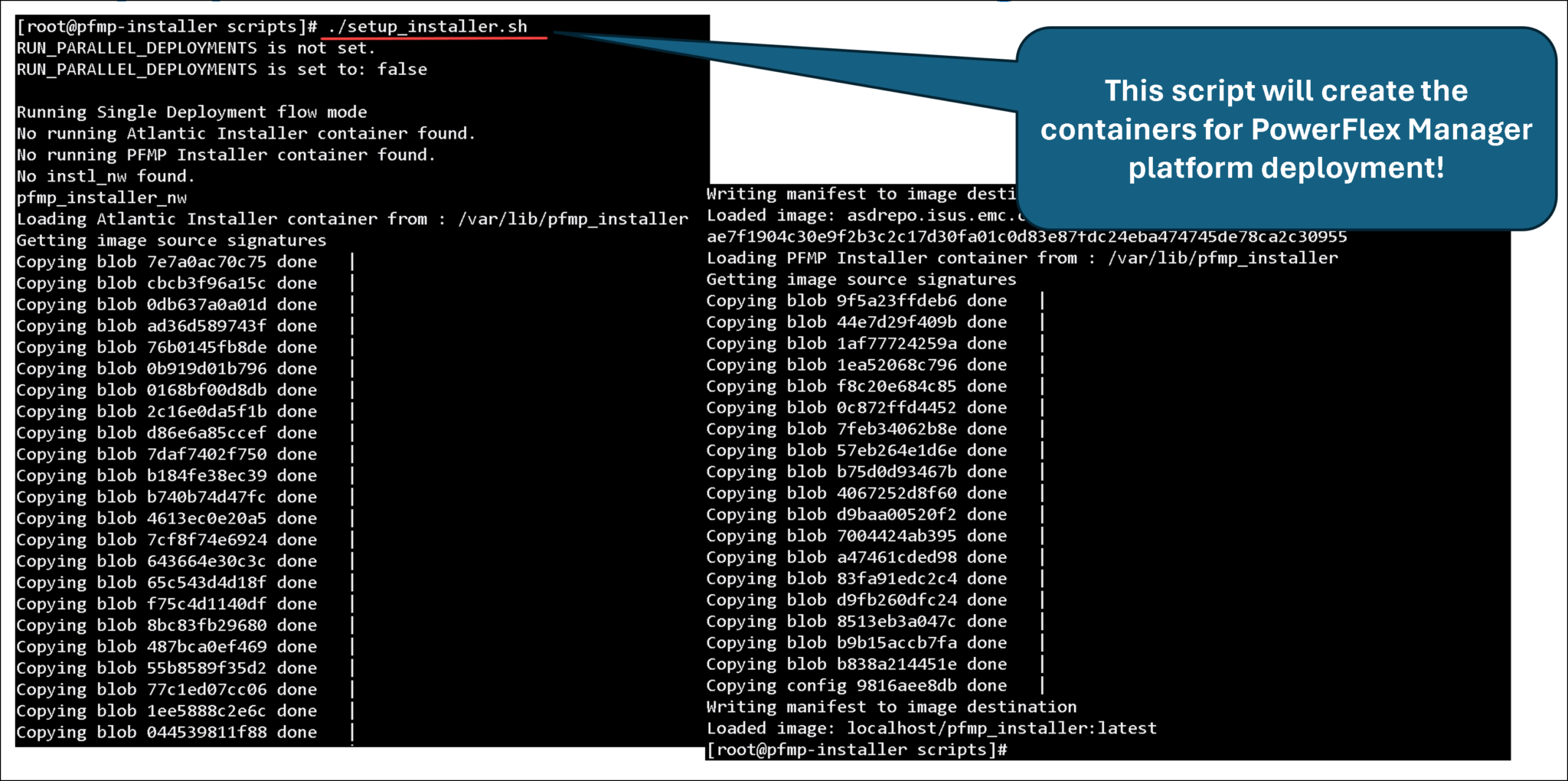

11- Create the installation container:

Execute the “setup_installer.sh” to create the container responsible for performing the PowerFlex Manager deployment:

cd /var/lib/pfmp_installer/PFMP_Installer/scripts

./setup_installer.shNote: I’ve used “podman” instead of “docker ” based on an excellent article. Click here to read it!

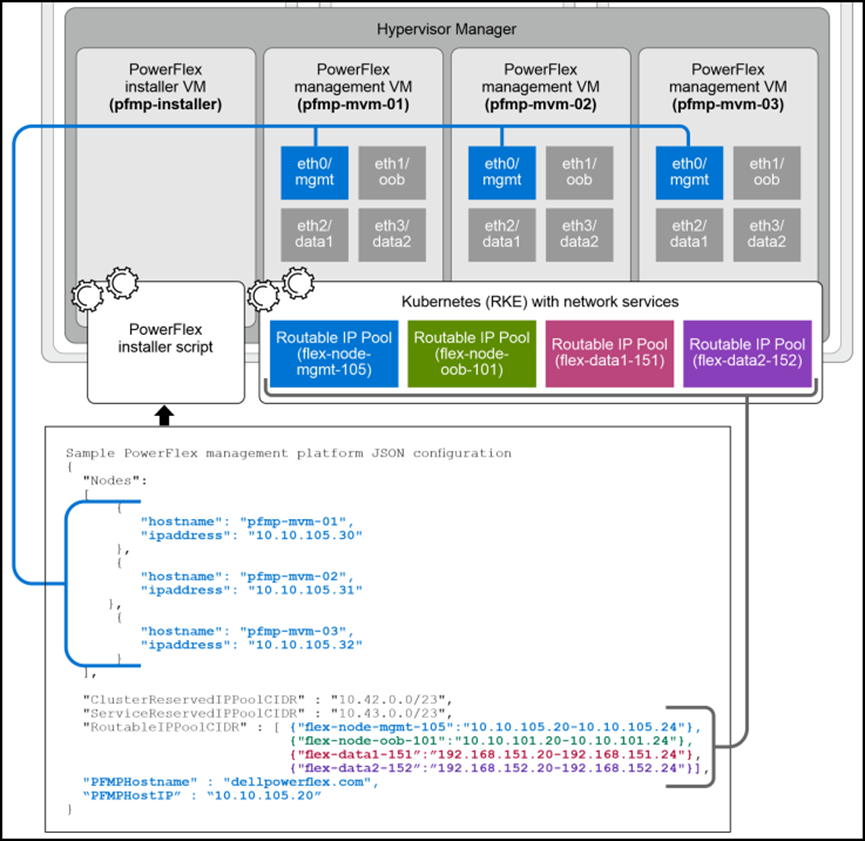

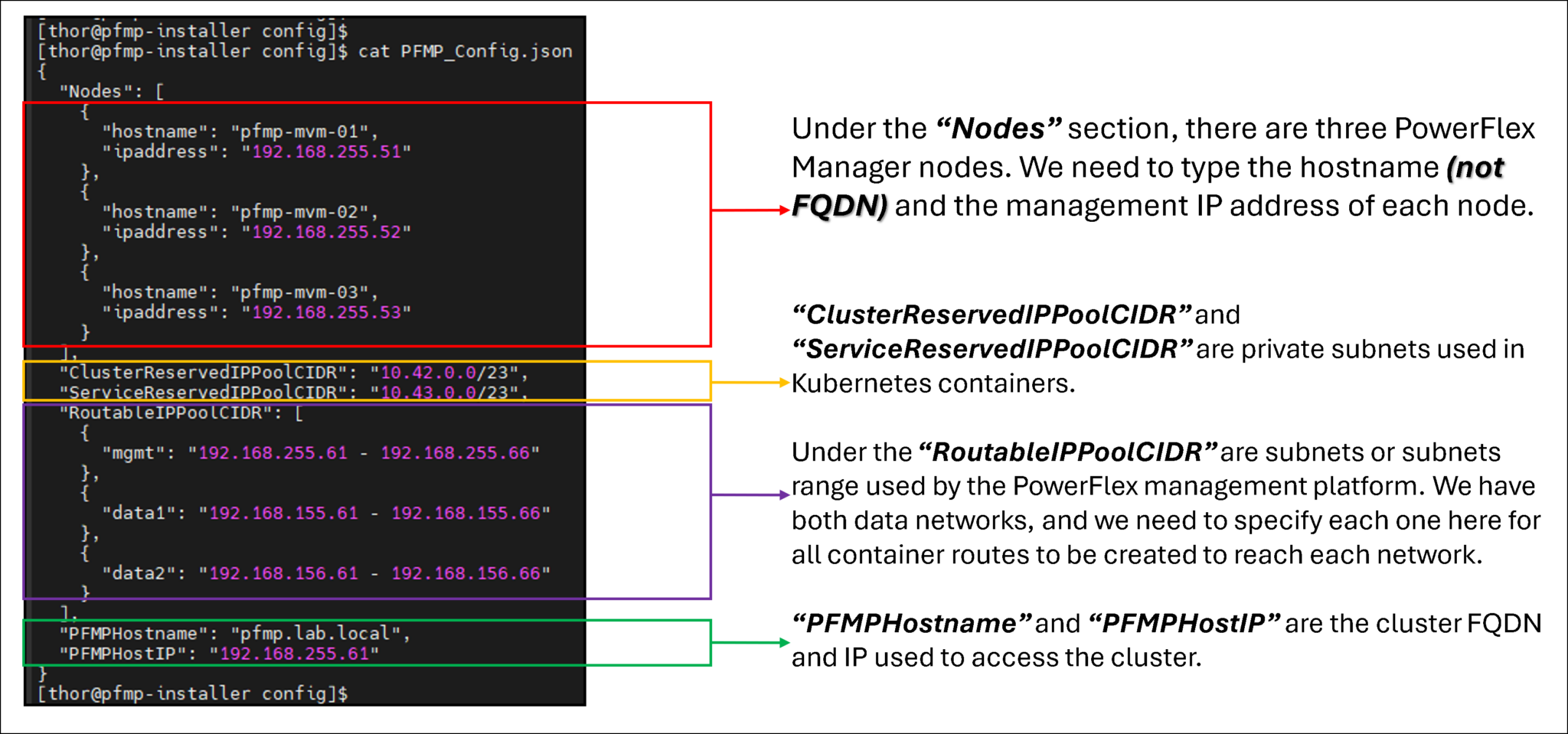

The PFMP_Config.json Configuration File

Use the PFMP_Config.json file to configure and deploy the PowerFlex management platform cluster:

The temporary VM uses this JSON file, along with other scripts, to deploy and configure the cluster. Under the “scripts” directory, for instance, we can see all available scripts:

The JSON file is under the “config” directory!

In the following picture, we can see an example of our configuration file, filled out with details about our lab environment:

Deployment of PowerFlex Management Platform Cluster

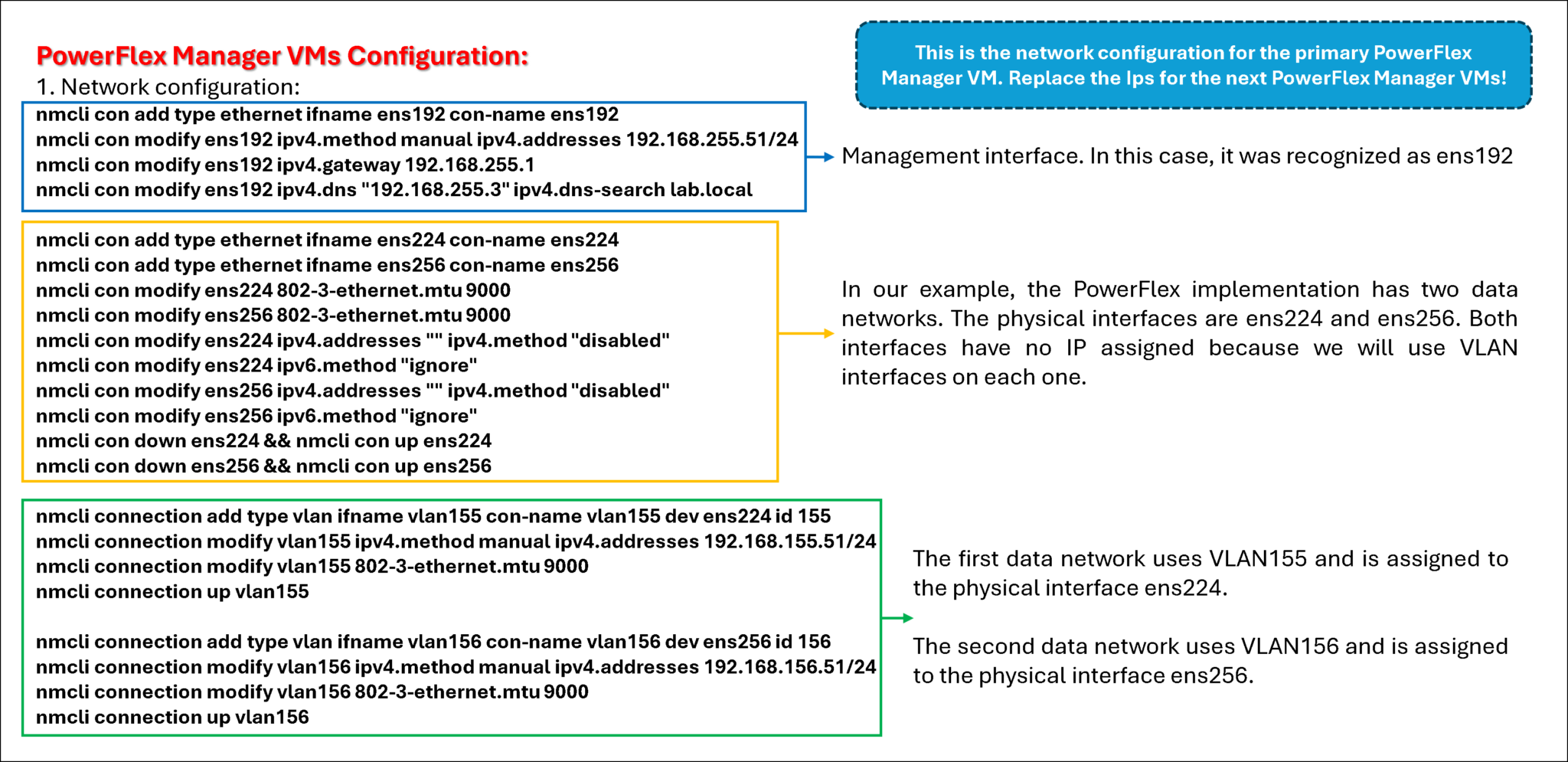

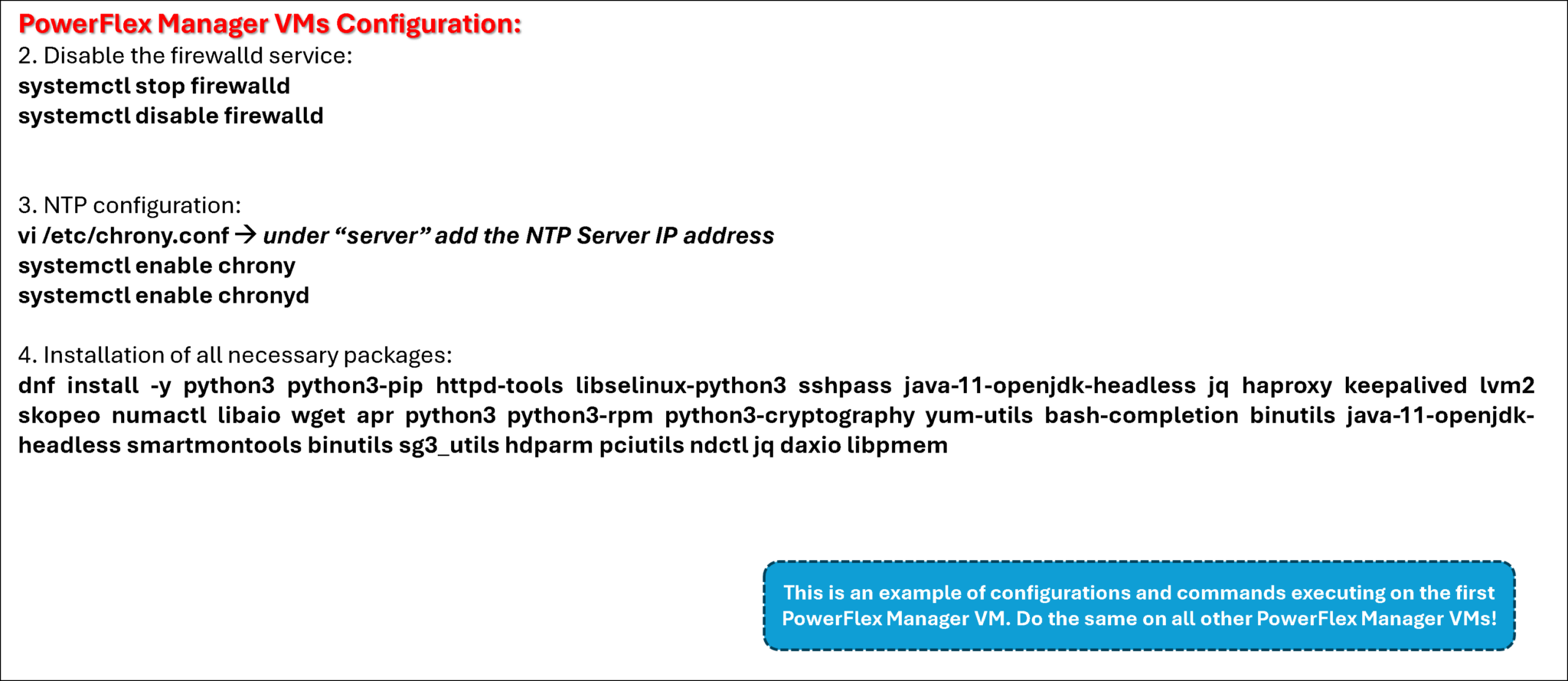

The following step configures the three VMs that will be part of the PowerFlex Manager cluster.

I used the PowerFlex Install and Upgrade guide to get details of the hardware configuration for those VMs. You can access this guide by clicking here.

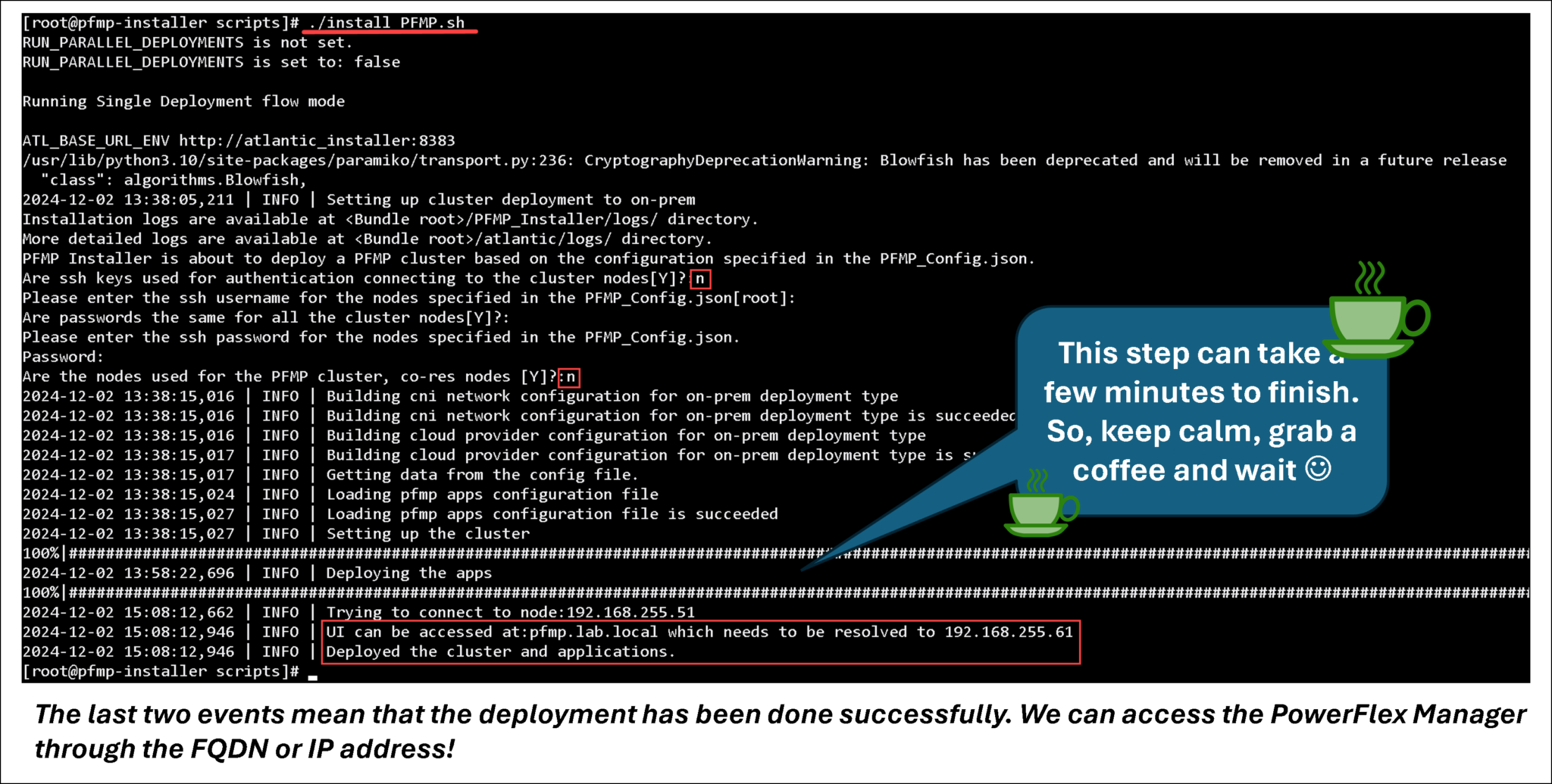

The script “install_PFMP.sh” will deploy the PowerFlex Management Cluster:

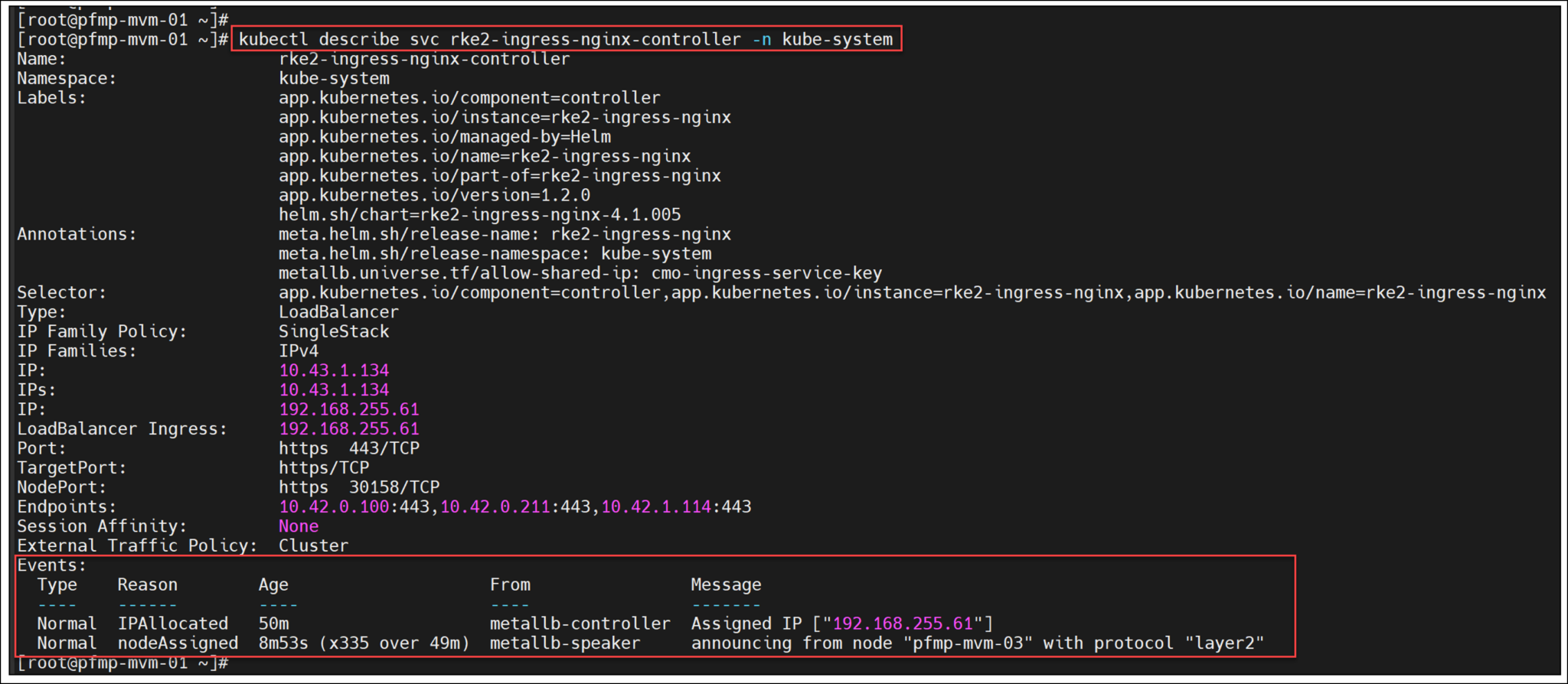

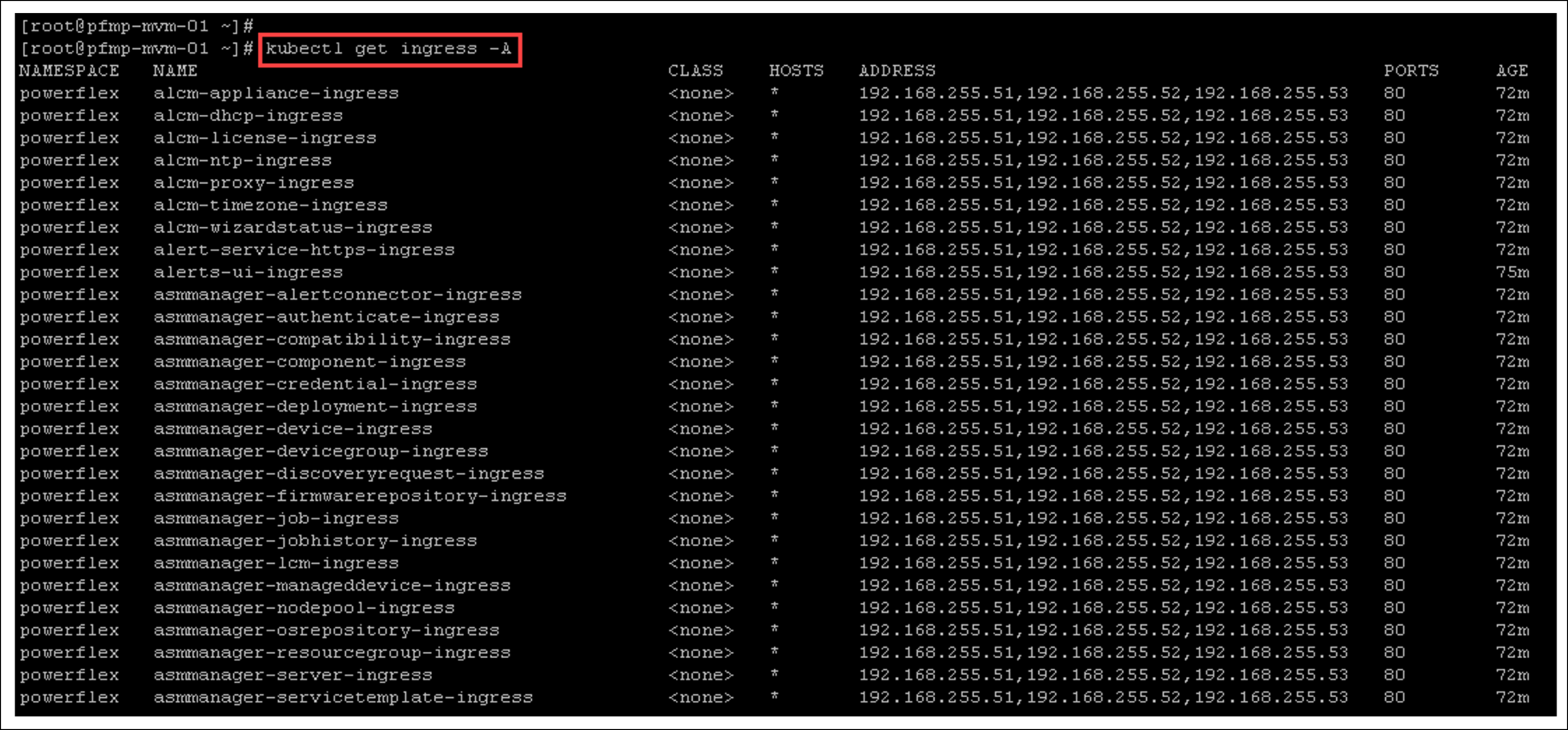

The service rke2-ingress-nginx-controller displays the details of the LoadBalancer ingress IP and the VM assigned to the LoadBalancer IP (in this example, the node pfmp-mvm-03):

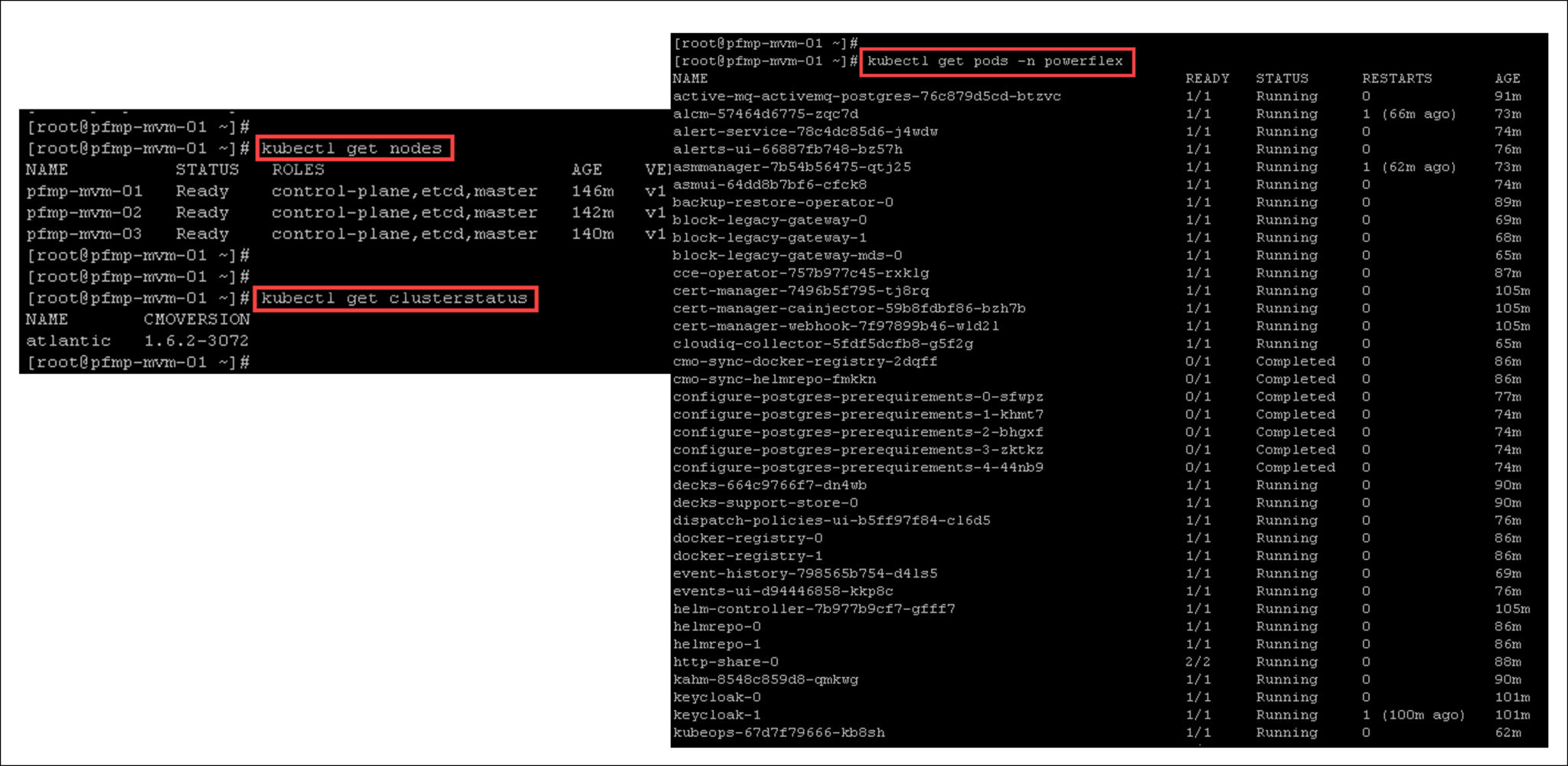

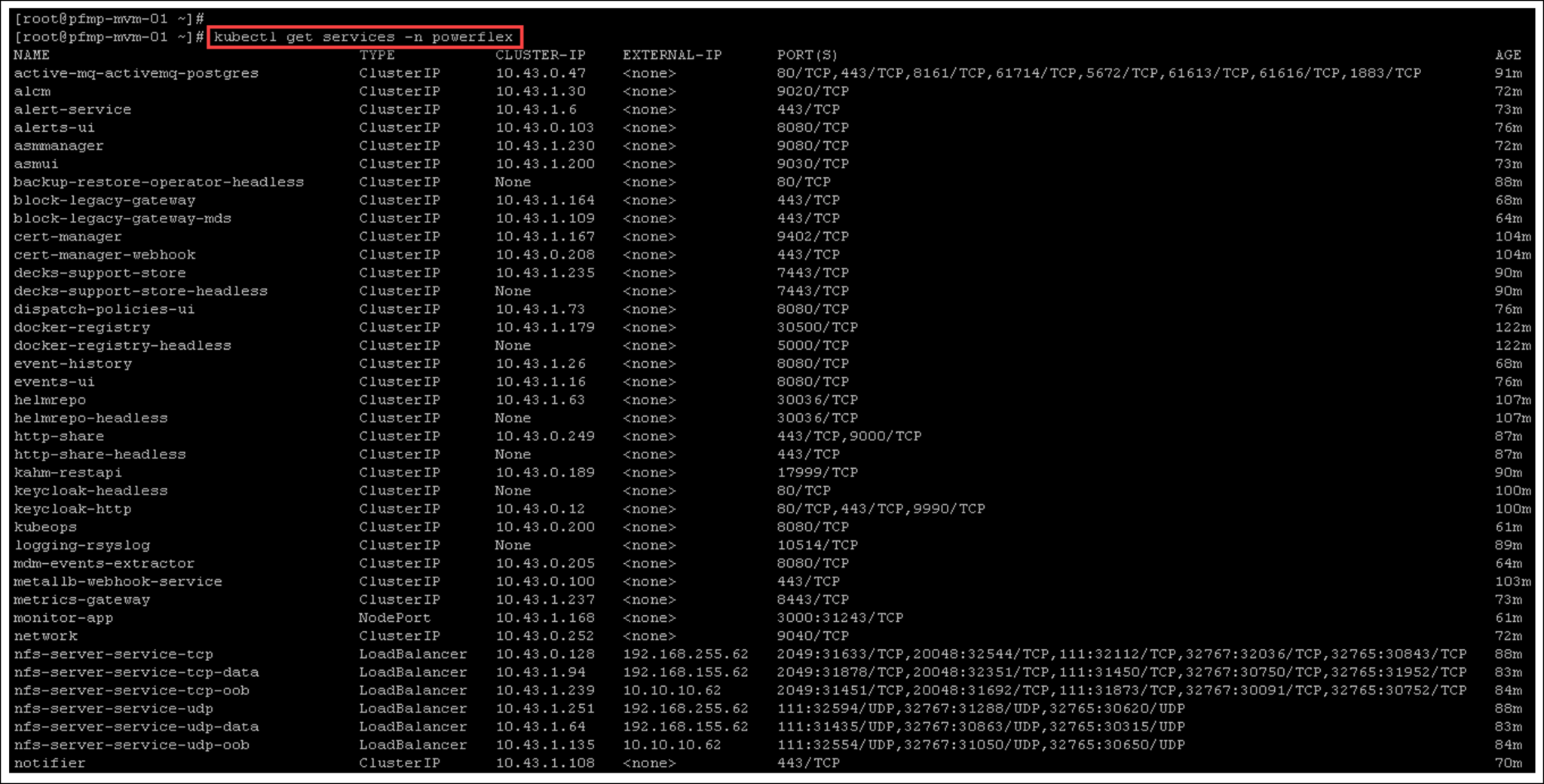

Below, there are more valuable “kubectl” commands to inspect details of the cluster:

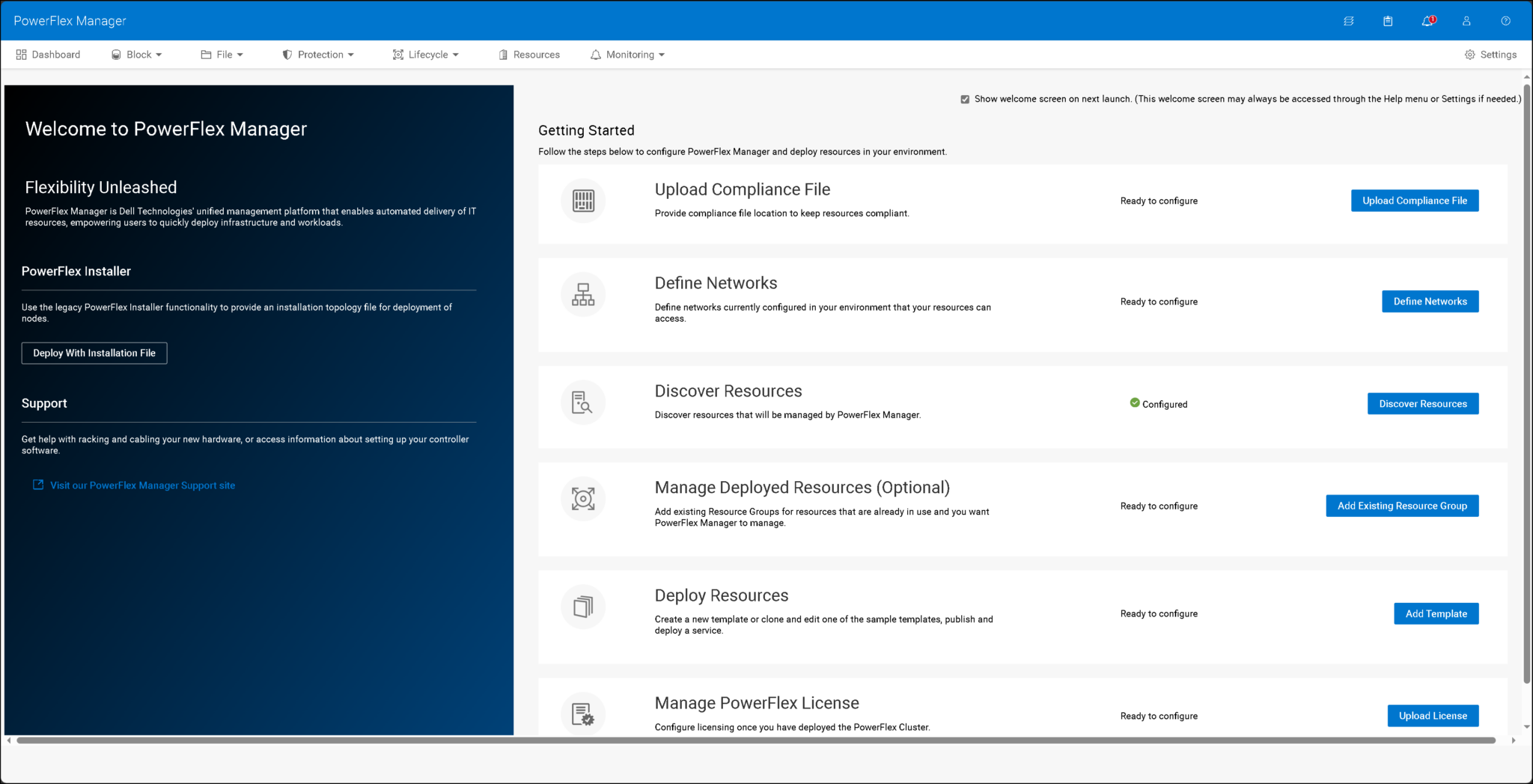

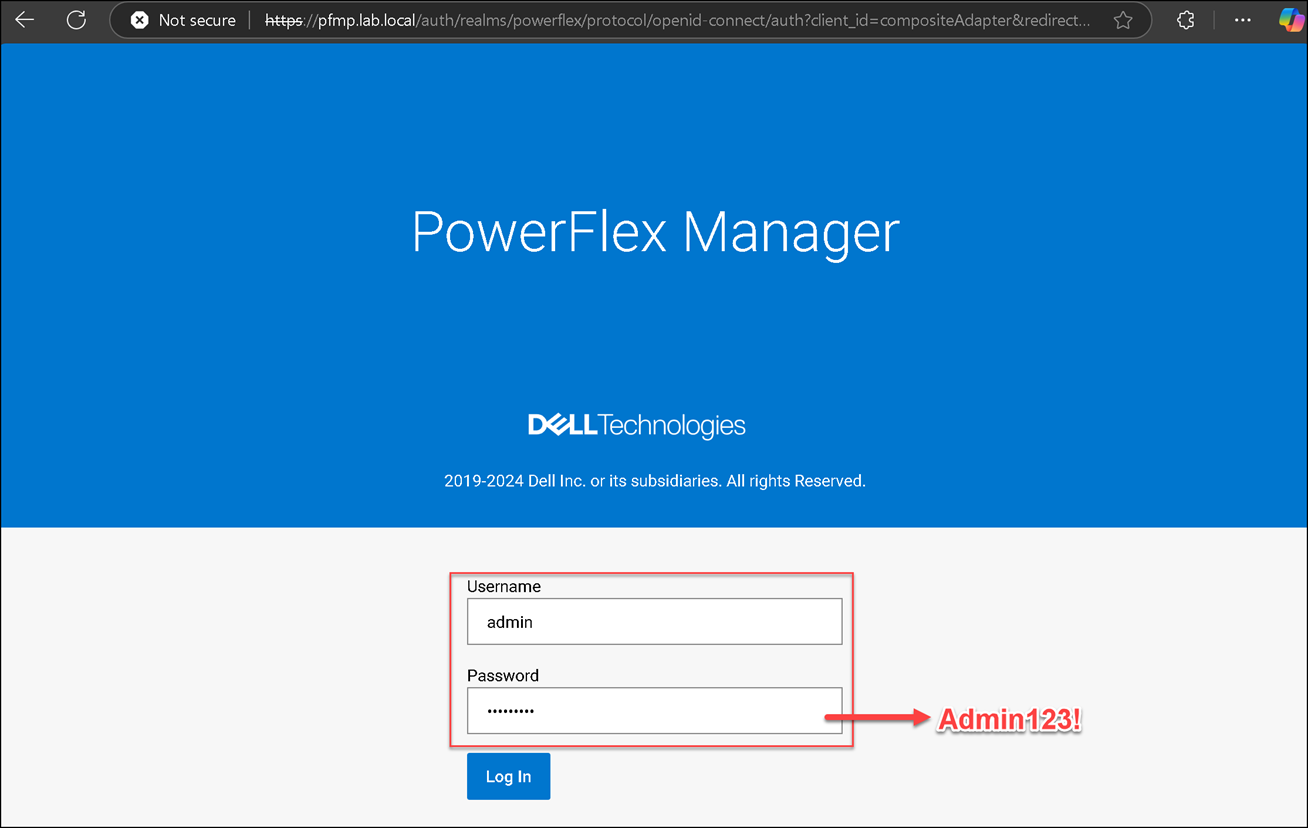

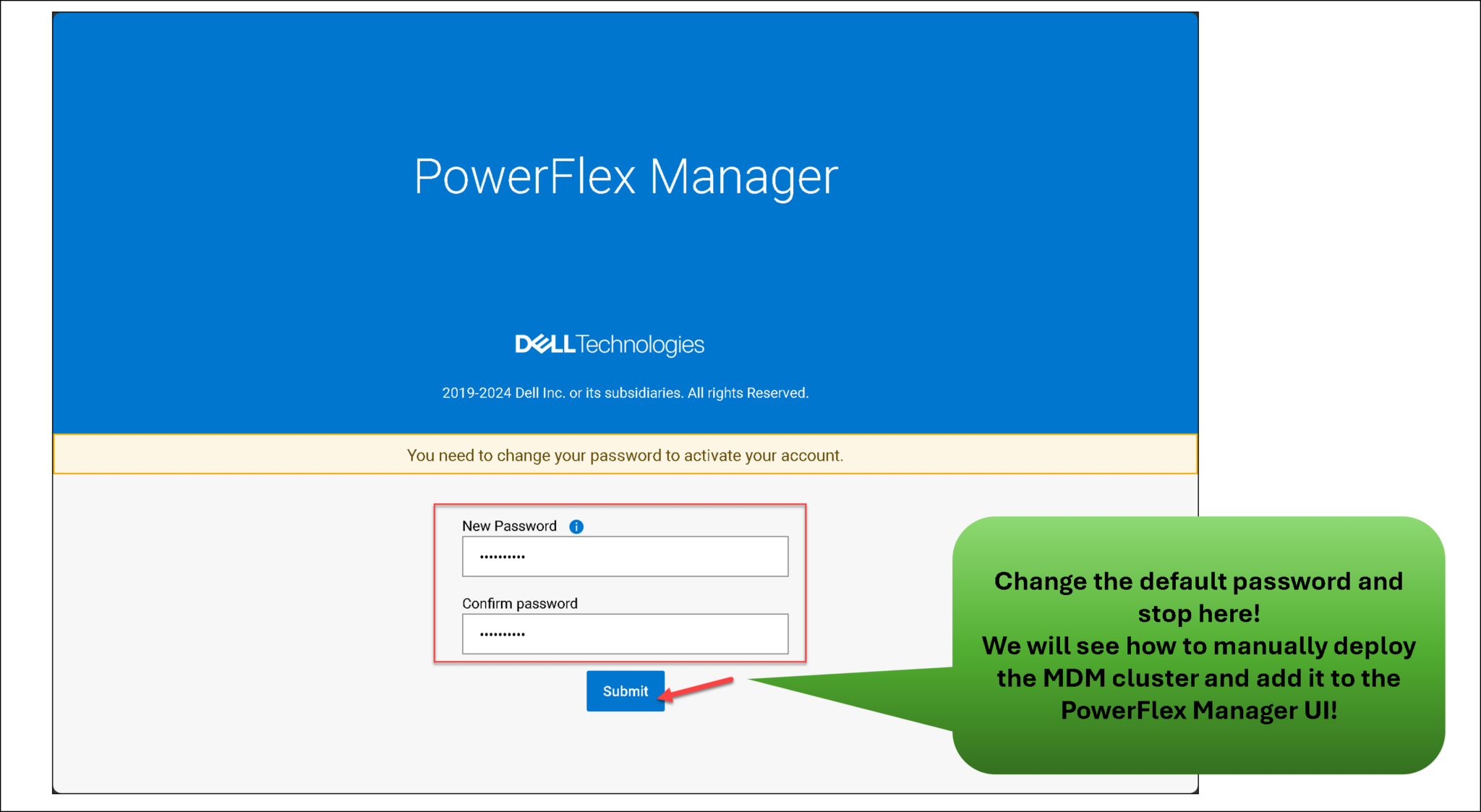

Opening the PowerFlex Manager UI

In our lab, we can access the PowerFlex Manager UI using:

- FQDN: pfmp.lab.local

- IP address: 192.168.255.61

Use this “virtual address” to access the PowerFlex Manager UI:

That’s it 🙂