Installing the SDC on ESXi shows how to install the PowerFlex SDC manually on an ESXi host.

About our Environment

PowerFlex Cluster version 4.5.2.

VMware ESXi 8.0.3 build-24022510.

Both PowerFlex and ESXi run in a nested (virtual) environment.

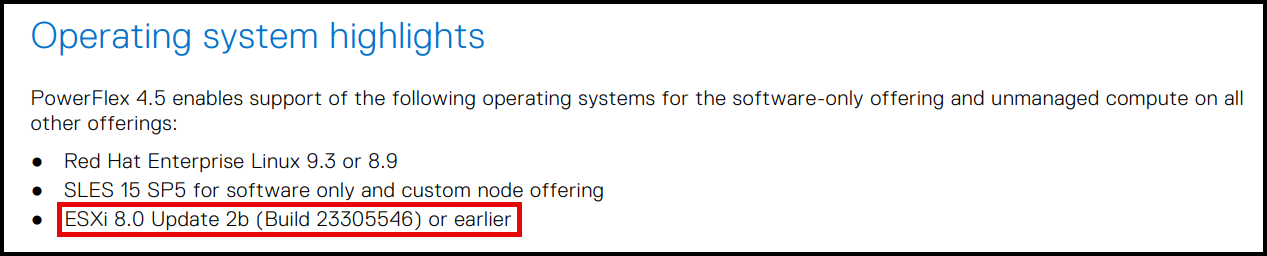

PowerFlex Release Notes Compatibility Check

First and foremost, we always recommend consulting the PowerFlex release notes to confirm what ESXi versions are compatible with the current PowerFlex version:

https://dl.dell.com/content/manual68830250-dell-powerflex-4-5-x-release-notes.pdf

In this case, for instance, the PowerFlex 4.5.2 supports ESXi 8.0 Update 2b (Build 23305546) or earlier:

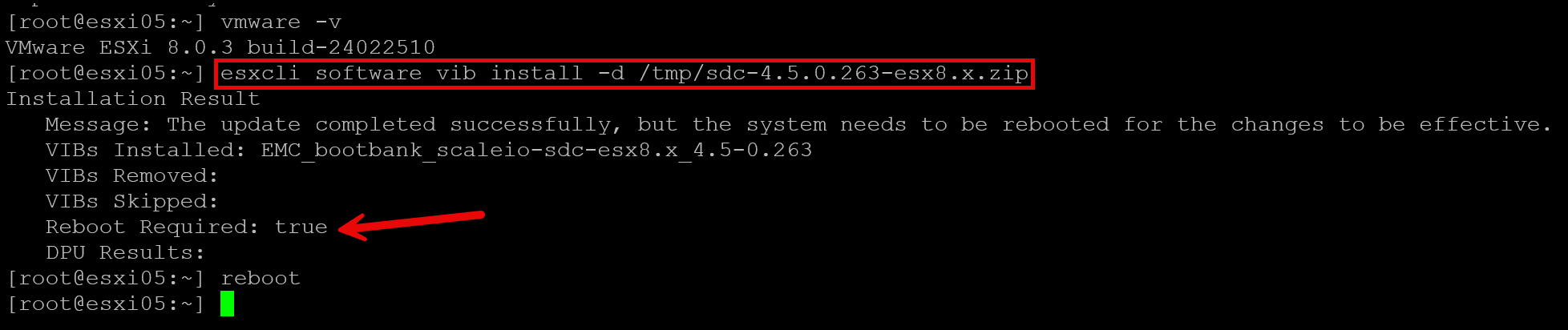

Installing the SDC “vib” on ESXi Host

After downloading the SDC package for vSphere ESXi 8.0 from support.dell.com, access the desired ESXi host by SSH and check if the SDC vib is already installed:

esxcli software vib list | grep -i emcCopying the SDC vib from the local desktop to the ESXi host and installing it. After installing the vib, reboot the system:

# We are copying the ".zip" from a Windows client using PowerShell:

scp C:\Temp\sdc-4.5.0.263-esx8.x.zip root@192.168.255.15:/tmp

esxcli software vib install -d /tmp/sdc-4.5.0.263-esx8.x.zip

rebootNote: If the ESXi host is a production host, before rebooting, put it first in maintenance mode and then reboot it:

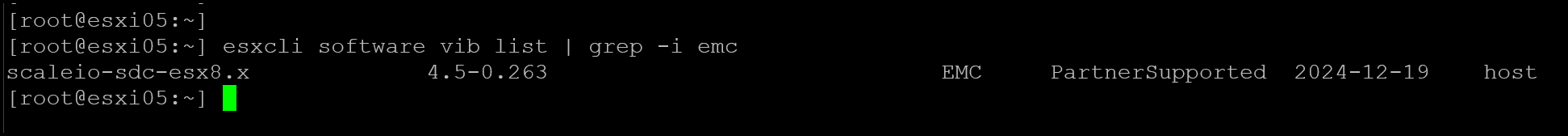

Checking vib details after installation:

esxcli software vib list | grep -i emc

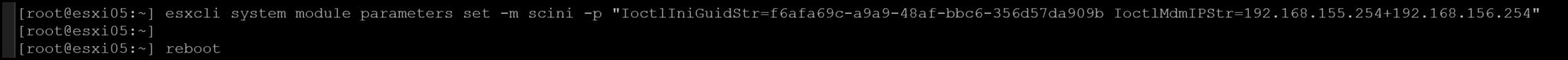

Connecting SDC to the MDM Cluster

After installing the SDC vib, we must configure it to connect to the MDM cluster.

Generating a UUID for the ESXi host. We can use the Powershell to do it:

[guid]::NewGuid()

Example:

PS C:\Users\tux000> [guid]::NewGuid()

Guid

----

f6afa69c-a9a9-48af-bbc6-356d57da909bNote: https://guidgenerator.com/ (another option to generate the GUID).

The GUID must be unique for each device!

Configuring the scini module (the module name used by the SDC) points to the MDM servers and passes the ESXi’s GUID. After doing it, reboot the system again:

esxcli system module parameters set -m scini -p "IoctlIniGuidStr=f6afa69c-a9a9-48af-bbc6-356d57da909b IoctlMdmIPStr=192.168.155.254+192.168.156.254"Where:

IoctlIniGuidStr=f6afa69c-a9a9-48af-bbc6-356d57da909b –> GUID generated using the PowerShell;

IoctlMdmIPStr=192.168.155.254+192.168.156.254 –> Since we have both virtual IPs, we need to specify them using the “+”.

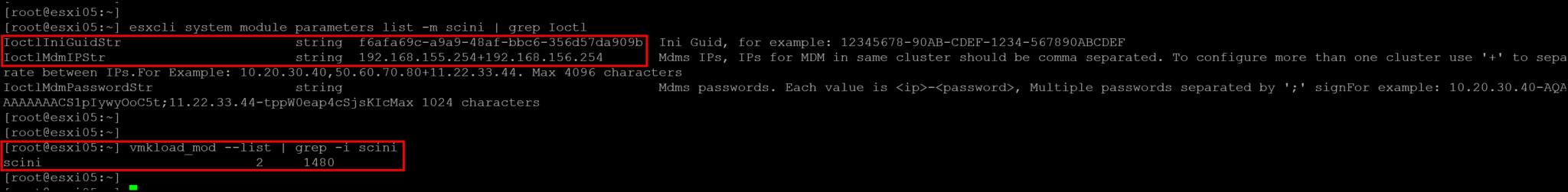

Checking the SDC module configuration and confirming if the scini module was loaded:

esxcli system module parameters list -m scini | grep Ioctl

vmkload_mod --list | grep -i scini

As we can see in the above picture, the scini module has been loaded successfully!

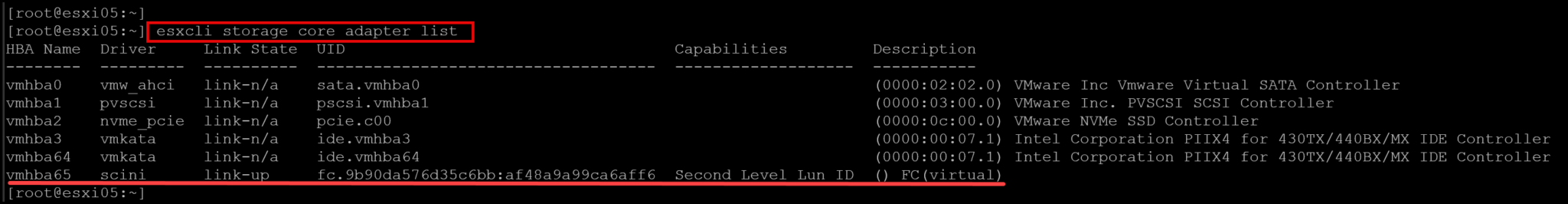

If the scini module was loaded successfully, we could see a software adapter using the scini driver:

esxcli storage core adapter list

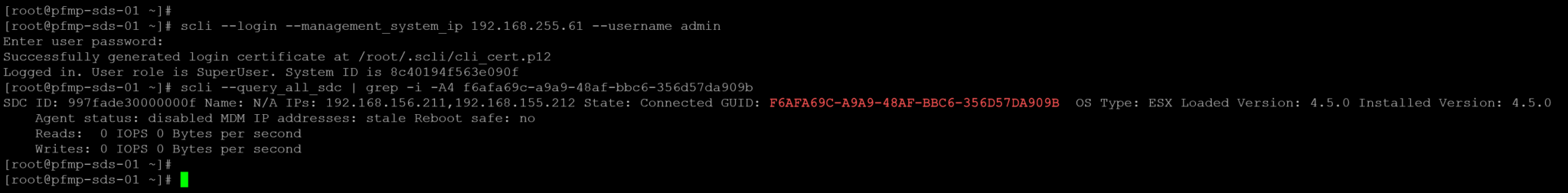

On the MDM primary, check the new SDC details (in this case, we are filtering by GUID the command output):

scli --login --management_system_ip 192.168.255.61 --username admin

scli --query_all_sdc | grep -i -A4 f6afa69c-a9a9-48af-bbc6-356d57da909b

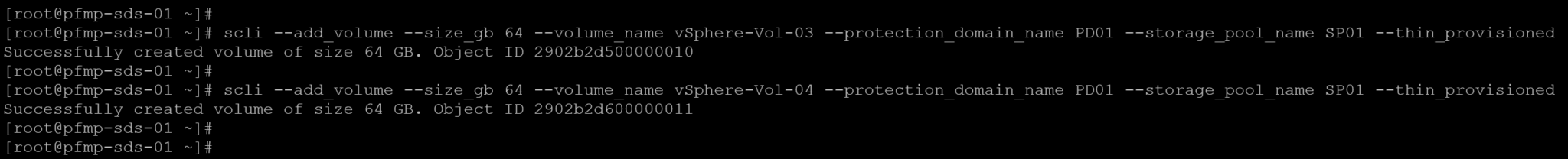

Creating and Mapping Volumes to the new SDC

Creating two new volumes, “vSphere-Vol-03” and “vSphere-Vol-04”, both thin-provisioned and with 64GB each:

scli --add_volume --size_gb 64 --volume_name vSphere-Vol-03 --protection_domain_name PD01 --storage_pool_name SP01 --thin_provisioned

scli --add_volume --size_gb 64 --volume_name vSphere-Vol-04 --protection_domain_name PD01 --storage_pool_name SP01 --thin_provisioned

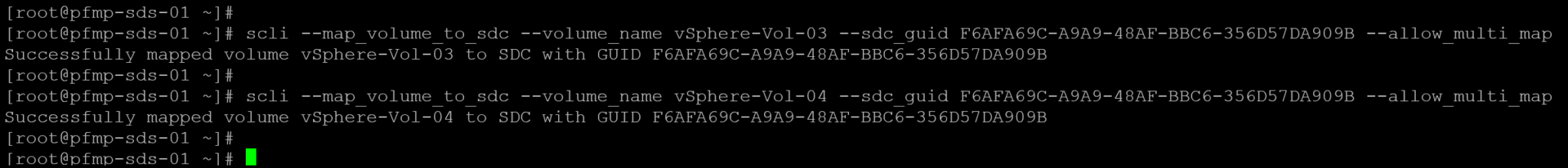

Mapping both volumes to the new SDC. In this case, for instance, we are mapping each volume to the SDC using the SDC GUID:

scli --map_volume_to_sdc --volume_name vSphere-Vol-03 --sdc_guid F6AFA69C-A9A9-48AF-BBC6-356D57DA909B --allow_multi_map

scli --map_volume_to_sdc --volume_name vSphere-Vol-04 --sdc_guid F6AFA69C-A9A9-48AF-BBC6-356D57DA909B --allow_multi_map

Checking what SDCs are mapped to each volume:

scli --query_volume --volume_name vSphere-Vol-03

>> Volume ID: 2902b2d500000010 Name: vSphere-Vol-03

Provisioning: Thin

Size: 64.0 GB (65536 MB)

Total base user data: 0 Bytes

Storage Pool 2714b2fe00000000 Name: SP01

Protection Domain b187c36500000000 Name: PD01

Creation time: 2024-12-19 08:59:32

Class: default

Reads: 0 IOPS 0 Bytes per second

Writes: 0 IOPS 0 Bytes per second

Data layout: Medium granularity

Mapping:

Host ID: 997fade30000000f Name: N/A Host Type: SDC IP: 192.168.156.211 Access Mode: READ_WRITE , Device is mapped to SDC

No SCSI reservations

No Nvme reservations

Migration status: Not in migration

Doesn't use RAM Read Cache

Replication info:

Replication state: Not being Replicated

Volume NVMe Namespace ID: 17

==========

scli --query_volume --volume_name vSphere-Vol-04

>> Volume ID: 2902b2d600000011 Name: vSphere-Vol-04

Provisioning: Thin

Size: 64.0 GB (65536 MB)

Total base user data: 0 Bytes

Storage Pool 2714b2fe00000000 Name: SP01

Protection Domain b187c36500000000 Name: PD01

Creation time: 2024-12-19 08:59:38

Class: default

Reads: 0 IOPS 0 Bytes per second

Writes: 0 IOPS 0 Bytes per second

Data layout: Medium granularity

Mapping:

Host ID: 997fade30000000f Name: N/A Host Type: SDC IP: 192.168.156.211 Access Mode: READ_WRITE , Device is mapped to SDC

No SCSI reservations

No Nvme reservations

Migration status: Not in migration

Doesn't use RAM Read Cache

Replication info:

Replication state: Not being Replicated

Volume NVMe Namespace ID: 18As we can see in the output above, the new SDC (ESXi) has mapped both volumes correctly. The next step is to rename the SDC hostname:

-- BEFORE:

scli --query_all_sdc | grep -i -A4 F6AFA69C-A9A9-48AF-BBC6-356D57DA909B

SDC ID: 997fade30000000f Name: N/A IPs: 192.168.156.211,192.168.155.212 State: Connected GUID: F6AFA69C-A9A9-48AF-BBC6-356D57DA909B OS Type: ESX Loaded Version: 4.5.0 Installed Version: 4.5.0

Agent status: disabled MDM IP addresses: stale Reboot safe: no

Reads: 0 IOPS 0 Bytes per second

Writes: 0 IOPS 0 Bytes per second

-- RENAMING:

scli --rename_sdc --sdc_guid F6AFA69C-A9A9-48AF-BBC6-356D57DA909B --new_name ESXI05

Successfully renamed host SDC with GUID F6AFA69C-A9A9-48AF-BBC6-356D57DA909B to ESXI05

-- AFTER:

scli --query_all_sdc | grep -i -A4 F6AFA69C-A9A9-48AF-BBC6-356D57DA909B

SDC ID: 997fade30000000f Name: ESXI05 IPs: 192.168.156.211,192.168.155.212 State: Connected GUID: F6AFA69C-A9A9-48AF-BBC6-356D57DA909B OS Type: ESX Loaded Version: 4.5.0 Installed Version: 4.5.0

Agent status: disabled MDM IP addresses: stale Reboot safe: no

Reads: 0 IOPS 0 Bytes per second

Writes: 0 IOPS 0 Bytes per secondListing and Using the PowerFlex Volumes on the ESXi

On the ESXi command line, we can list all devices provided by the PowerFlex:

esxcli storage core device list | grep -i -B7 "Vendor: EMC"

Example:

eui.8c40194f563e090f2902b2d600000011

Display Name: EMC Fibre Channel Disk (eui.8c40194f563e090f2902b2d600000011)

Has Settable Display Name: true

Size: 65536

Device Type: Direct-Access

Multipath Plugin: NMP

Devfs Path: /vmfs/devices/disks/eui.8c40194f563e090f2902b2d600000011

Vendor: EMC

--

eui.8c40194f563e090f2902b2d500000010

Display Name: EMC Fibre Channel Disk (eui.8c40194f563e090f2902b2d500000010)

Has Settable Display Name: true

Size: 65536

Device Type: Direct-Access

Multipath Plugin: NMP

Devfs Path: /vmfs/devices/disks/eui.8c40194f563e090f2902b2d500000010

Vendor: EMC

--

eui.8c40194f563e090f997fade30000000f

Display Name: EMC Fibre Channel Disk (eui.8c40194f563e090f997fade30000000f)

Has Settable Display Name: true

Size: 0

Device Type: Direct-Access

Multipath Plugin: NMP

Devfs Path: /vmfs/devices/disks/eui.8c40194f563e090f997fade30000000f

Vendor: EMCCreating datastores on the ESXi command line:

1- Checking the current filesystem before the datastore creation:

esxcli storage filesystem list

Example:

Mount Point Volume Name UUID Mounted Type Size Free

---------------------------------------------------- ------------------------------------------ -------------------------------------- ------- ------ ------------ ------------

/vmfs/volumes/670bfcd4-bd923ea8-aedf-000c29af4643 OSDATA-670bfcd4-bd923ea8-aedf-000c29af4643 670bfcd4-bd923ea8-aedf-000c29af4643 true VMFSOS 19058917376 15767437312

/vmfs/volumes/d8d8e65f-944e99e0-f1be-cc6ddb33da14 BOOTBANK1 d8d8e65f-944e99e0-f1be-cc6ddb33da14 true vfat 1073577984 790855680

/vmfs/volumes/7fcc6c4b-934b5543-d4ae-816dcfa848e9 BOOTBANK2 7fcc6c4b-934b5543-d4ae-816dcfa848e9 true vfat 1073577984 791838720

/vmfs/volumes/vsan:52a469c557ab8a3f-6d6eb81eaa2b3c1d vsanDatastore-ESA vsan:52a469c557ab8a3f-6d6eb81eaa2b3c1d true vsan 273720279040 1244037336412- Grabbing both disk identifiers (esxcli storage core device list | grep -i -B7 “Vendor: EMC”):

eui.8c40194f563e090f2902b2d600000011

eui.8c40194f563e090f2902b2d500000010

3- Getting the disk path for both disks:

ls /vmfs/devices/disks/eui.*

/vmfs/devices/disks/eui.8c40194f563e090f2902b2d600000011

/vmfs/devices/disks/eui.8c40194f563e090f2902b2d5000000104- Creating the disk partition:

partedUtil showGuids --> grabbing the GUID for the vmfs filesystem

Example:

partedUtil showGuids

Partition Type GUID

vmfs AA31E02A400F11DB9590000C2911D1B8

vmkDiagnostic 9D27538040AD11DBBF97000C2911D1B8

vsan 381CFCCC728811E092EE000C2911D0B2

virsto 77719A0CA4A011E3A47E000C29745A24

VMware Reserved 9198EFFC31C011DB8F78000C2911D1B8

Basic Data EBD0A0A2B9E5443387C068B6B72699C7

Linux Swap 0657FD6DA4AB43C484E50933C84B4F4F

Linux Lvm E6D6D379F50744C2A23C238F2A3DF928

Linux Raid A19D880F05FC4D3BA006743F0F84911E

Efi System C12A7328F81F11D2BA4B00A0C93EC93B

Microsoft Reserved E3C9E3160B5C4DB8817DF92DF00215AE

Unused Entry 00000000000000000000000000000000

# Creating an empty gpt partition on both disk devices:

partedUtil setptbl /vmfs/devices/disks/eui.8c40194f563e090f2902b2d500000010 gpt

partedUtil setptbl /vmfs/devices/disks/eui.8c40194f563e090f2902b2d600000011 gpt

# Getting the usable sectors from each gpt partition:

partedUtil getUsableSectors /vmfs/devices/disks/eui.8c40194f563e090f2902b2d500000010

partedUtil getUsableSectors /vmfs/devices/disks/eui.8c40194f563e090f2902b2d600000011

Example:

[root@esxi05:/dev/disks] partedUtil getUsableSectors /vmfs/devices/disks/eui.8c40194f563e090f2902b2d500000010

34 134217694

[root@esxi05:/dev/disks] partedUtil getUsableSectors /vmfs/devices/disks/eui.8c40194f563e090f2902b2d600000011

34 134217694

# Creating one partition with all usable sectors:

partedUtil setptbl /vmfs/devices/disks/eui.8c40194f563e090f2902b2d500000010 gpt "1 34 134217694 AA31E02A400F11DB9590000C2911D1B8 0"

partedUtil setptbl /vmfs/devices/disks/eui.8c40194f563e090f2902b2d600000011 gpt "1 34 134217694 AA31E02A400F11DB9590000C2911D1B8 0"Where, in this case:

“1 34 134217694 AA31E02A400F11DB9590000C2911D1B8 0”

1 = The first partition

34 = The first usable disk sector

134217694 = The last usable disk sector

AA31E02A400F11DB9590000C2911D1B8 = GUID for the vmfs filesystem type

0 = flags (no special flag)

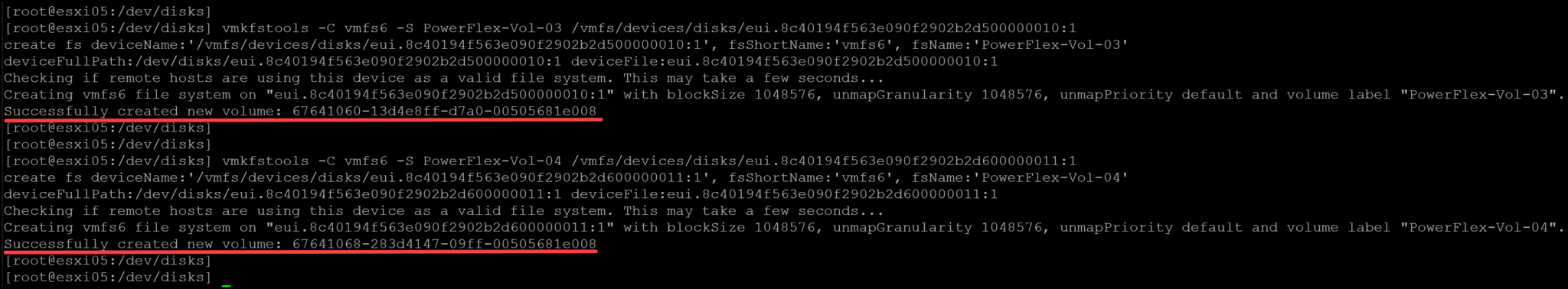

After creating the disk partition, we will create the VMFS datastores:

# Creating the VMFS datastore "PowerFlex-Vol-03" using the disk partition eui.8c40194f563e090f2902b2d500000010:1:

vmkfstools -C vmfs6 -S PowerFlex-Vol-03 /vmfs/devices/disks/eui.8c40194f563e090f2902b2d500000010:1

# Creating the VMFS datastore "PowerFlex-Vol-04" using the disk partition eui.8c40194f563e090f2902b2d600000011:1:

vmkfstools -C vmfs6 -S PowerFlex-Vol-04 /vmfs/devices/disks/eui.8c40194f563e090f2902b2d600000011:1

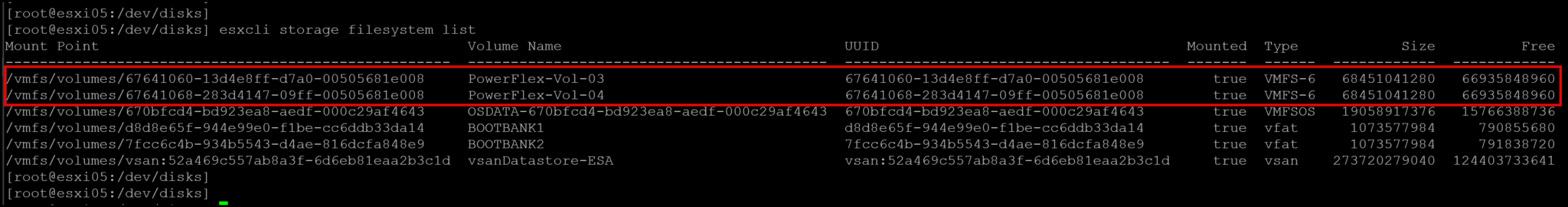

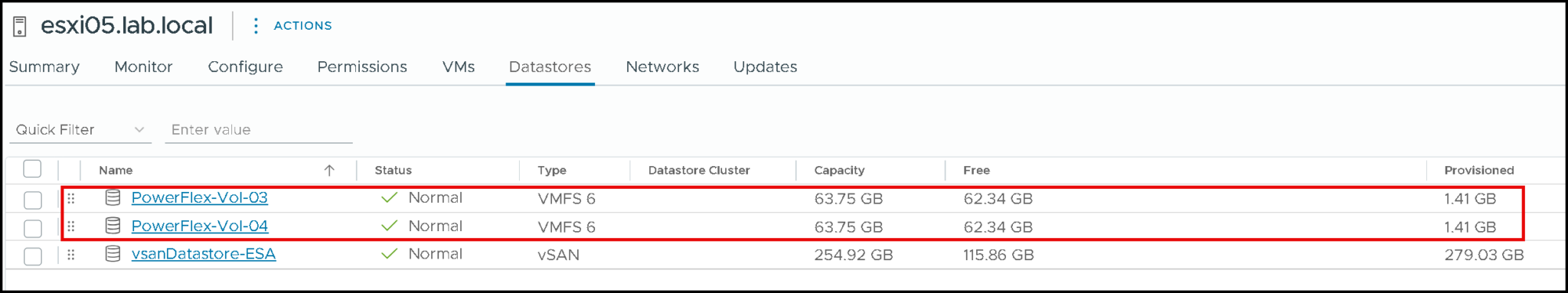

Checking current filesystem after the datastore creation:

esxcli storage filesystem list

That’s it 🙂