Introduction to NSX-T is an article that explains the basic concepts of VMware NSX-T.

Update: Nowadays, VMware has changed the name to only “NSX”!

Current Business Days

In the digital business era, nearly every organization is building custom applications to drive its core business and gain a competitive advantage. The speed at which development teams deliver new applications and capabilities directly impacts the success and bottom line of an organization.

This has placed increasing pressure on organizations to innovate quickly and has made developers central to this critical mission. As a result, the way developers create apps and the way IT provides services for those apps and the wider business has been evolving.

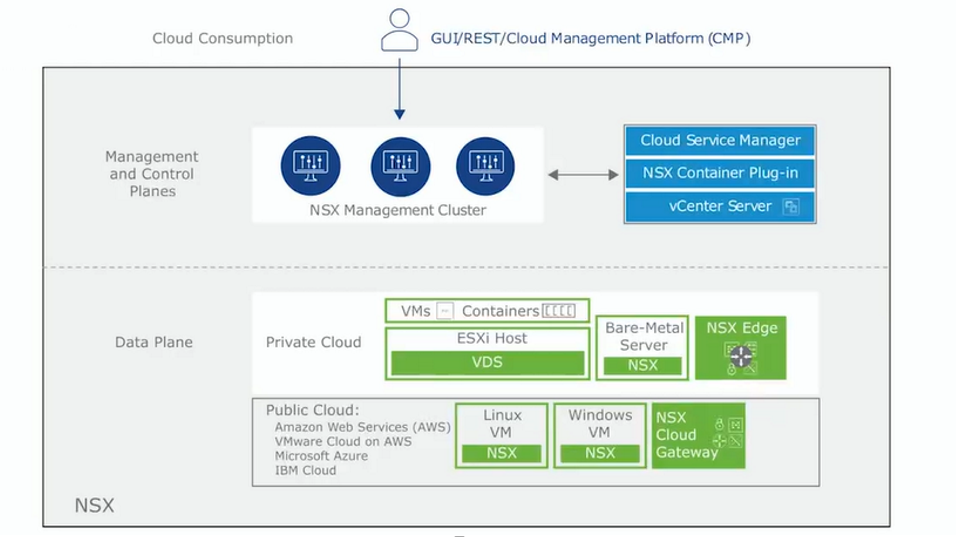

In this context, VMware NSX-T is designed to address emerging application frameworks and architectures that have heterogeneous endpoints and technology stacks. In addition to vSphere, these environments may also include other hypervisors, containers, bare metal, and public clouds. NSX-T allows IT and development teams to choose the technologies best suited for their particular applications.

About NSX-T

Basically, the NSX-T is a solution for SDN (Software-Definied Network). With NSX-T, all physical network components are virtualized. For example, we have routers, switches, firewalls, VPNs, load balance, etc, all virtualized with NSX. So, in this way, the same security and control mechanisms can be applied to virtualized environments.

NSX-T is fully integrated with vSphere. So, it works perfectly with ESXi and vCenter Server. And, as I said, other hypervisors can be used, such as KVM.

NSX-T Architecture Overview

The NSX-T architecture has a built-in separation of the data, control, and management planes. This separation delivers multiple benefits, including scalability, performance, resiliency, and heterogeneity.

So, inside each pillar of the NSX-T Architecture:

- Management Plane: The NSX-T management plane is designed from the ground up with advanced clustering technology, which allows the platform to process large-scale concurrent API requests. However, here is the management point for all NSX administrative tasks.

- Control Plane: The NSX-T control plane keeps track of the real-time virtual networking and security state of the system. NSX-T control separates the control plane into a central clustering control plane (CCP) and a local control plane (LCP). This simplifies the job of the CCP significantly and enables the platform to extend and scale for heterogeneous endpoints.

- Data Plane: The NSX-T data plane introduces a host switch (rather than relying on the vSwitch), which decouples it from the compute manager and normalizes networking connectivity. All create, read, update, and delete operations are performed via the NSX-T Manager. In this plane, we have the Hosts ESXi or KVM, for example.

Below we have a picture that explains the NSX-T architecture:

NSX Management Cluster

As I said, the NSX Manager is the management point for all administrative tasks in the NSX-T environment. It is fully recommended to deploy 3 NSX Managers (for resilience and redundancy purposes).

Here, we have the Management and Control Plane.

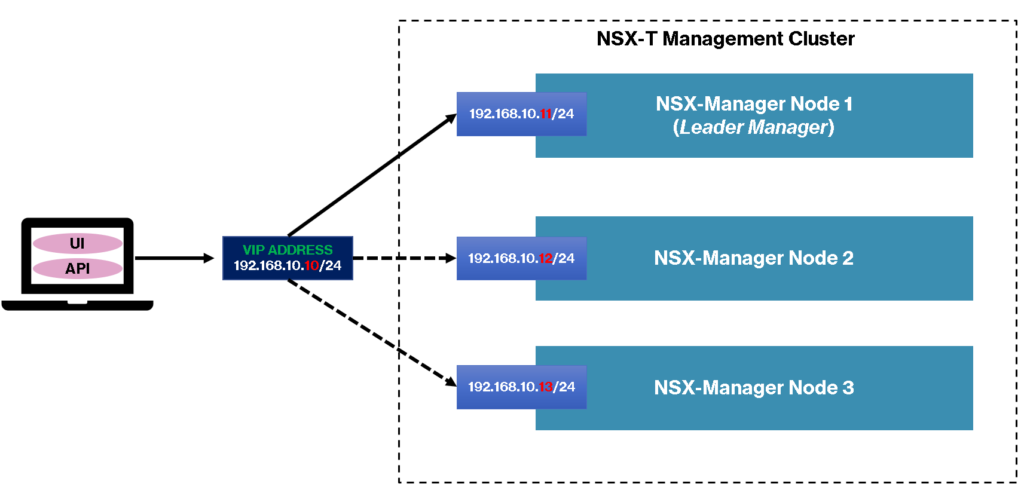

The first NSX Manager should be deployed via OVA file, and the next two NSX Managers can be deployed via NSX Manager. After the NSX Managers deployment, we will access each NSX Manager independently or we can configure a VIP address. A VIP address is an IP that we can access in the NSX Management Cluster, regardless of what NSX Manager will be available at the moment.

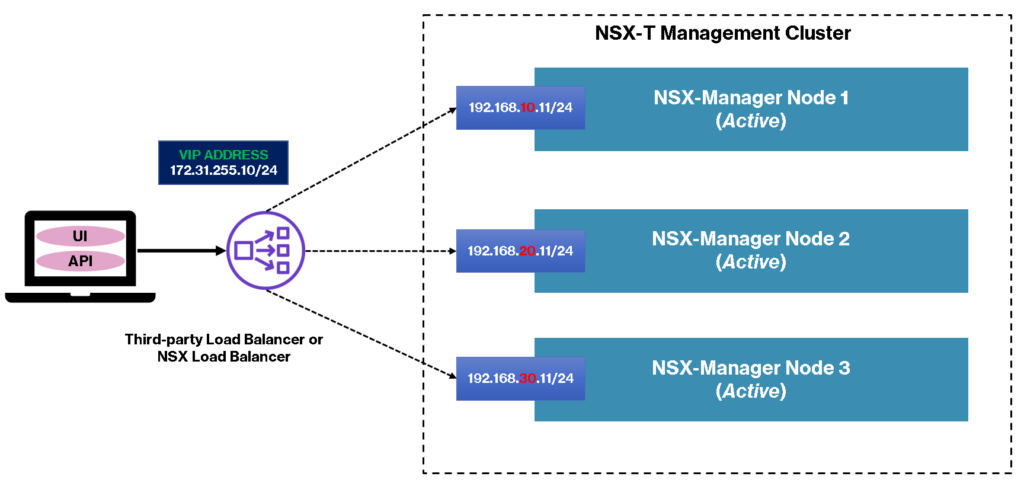

For the VIP, we can use the internal NSX Manager VIP or, we can use a Load Balancer for it.

Internal NSX Manager VIP:

Load Balancer for VIP:

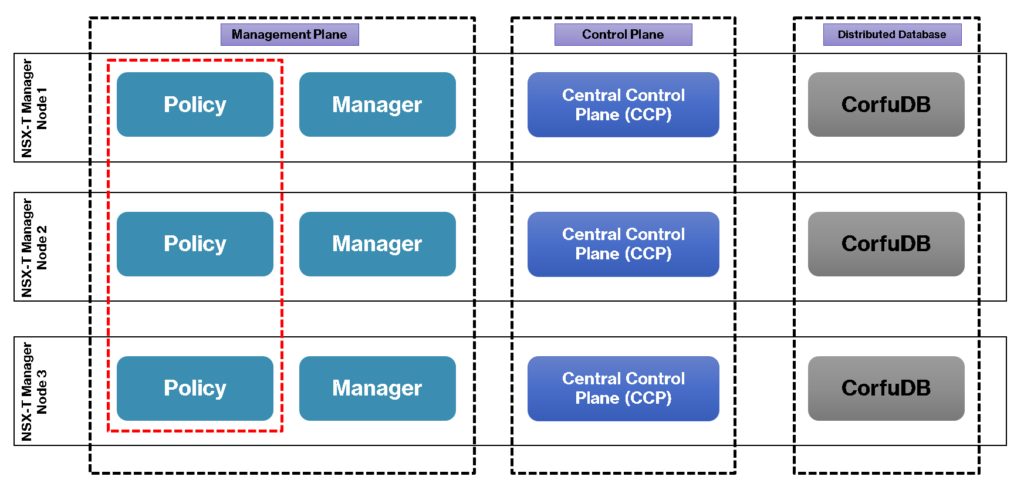

Inside the Management Cluster, we have three roles: Policy Role, Manager Role, and Controller Role.

Also, we have the Distributed Database (CorfuDB), responsible for storing all NSX environment data and components:

Note: It is very important to know that the data replication between each NSX Manager occurs automatically. This is provided by the clustering service that runs on each NSX Manager. So, you can access everyone’s NSX Manager, and make changes, and these changes will be replicated to other NSX Managers automatically.

NSX-T Data Plane

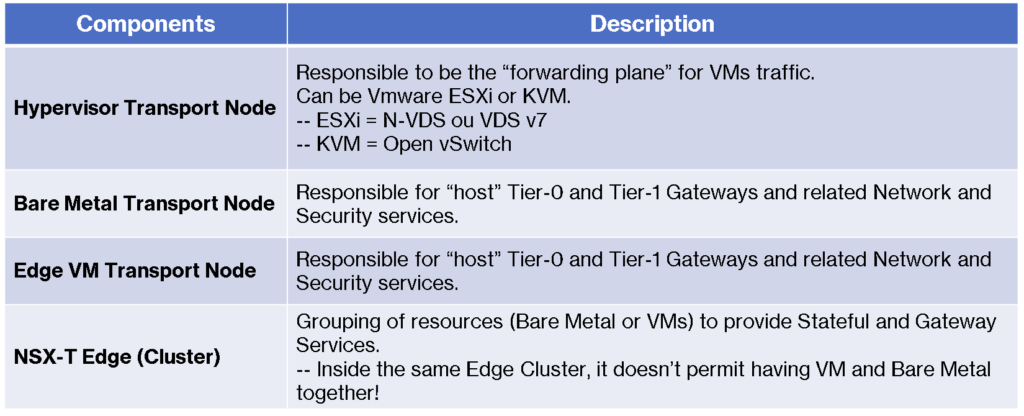

In this plane, we have the ESXi hosts, KVM hosts, and the NSX-T Edges. These devices are known as Data Plane Endpoints.

Here, we have workloads such as Virtual Machines, Containers, apps running on Bare metal servers, etc. Below, we can see the NSX-T Data Plane components:

Notes:

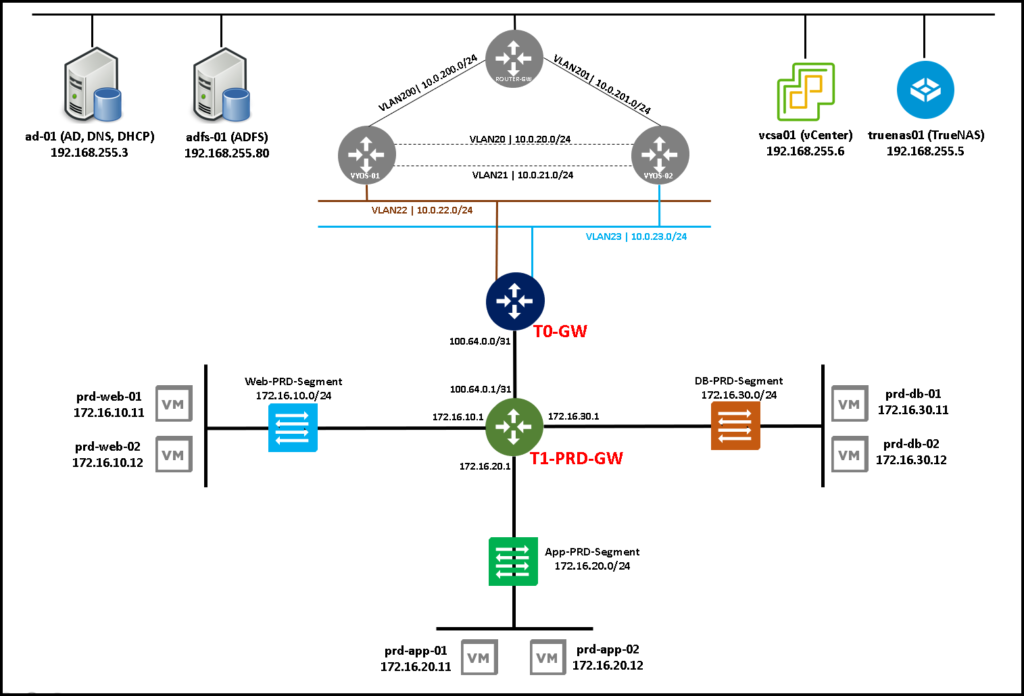

The Tier-0 Gateway is used to handle the North/South traffic. In other words, it is the traffic between the physical infrastructure and the virtual infrastructure.

The Tier-1 Gateway is used to handle the East/West traffic. In other words, it is the traffic between internal virtualized networks (internal NSX segments):Here, we have a small logic topology with Tier-0 and Tier-1. As we can see, the Tier-1 gateway has some Segments (Virtual Switches) where we can connect our virtual machines (VMs).

Between Tier-0 and Tier-1 we have an internal link called “Router Link” – this link is created automatically when we associate the Tier-1 gateway to the Tier-0 gateway.

Additionally, the Tier-0 gateway has one or more uplink connections to the physical network or the outside network.

This is a small example of a possible NSX logical topology. We created it in a lab environment just to explore the NSX resources:

So, to get more detail about the NSX-T, I recommended checking the below document: