A friend asked me to help solve an issue related to an entire vSAN cluster going down after changing MTU values. Because this subject can sometimes seem complex and confusing, I created this simple post to explain it.

What is the issue? Let’s explain it.

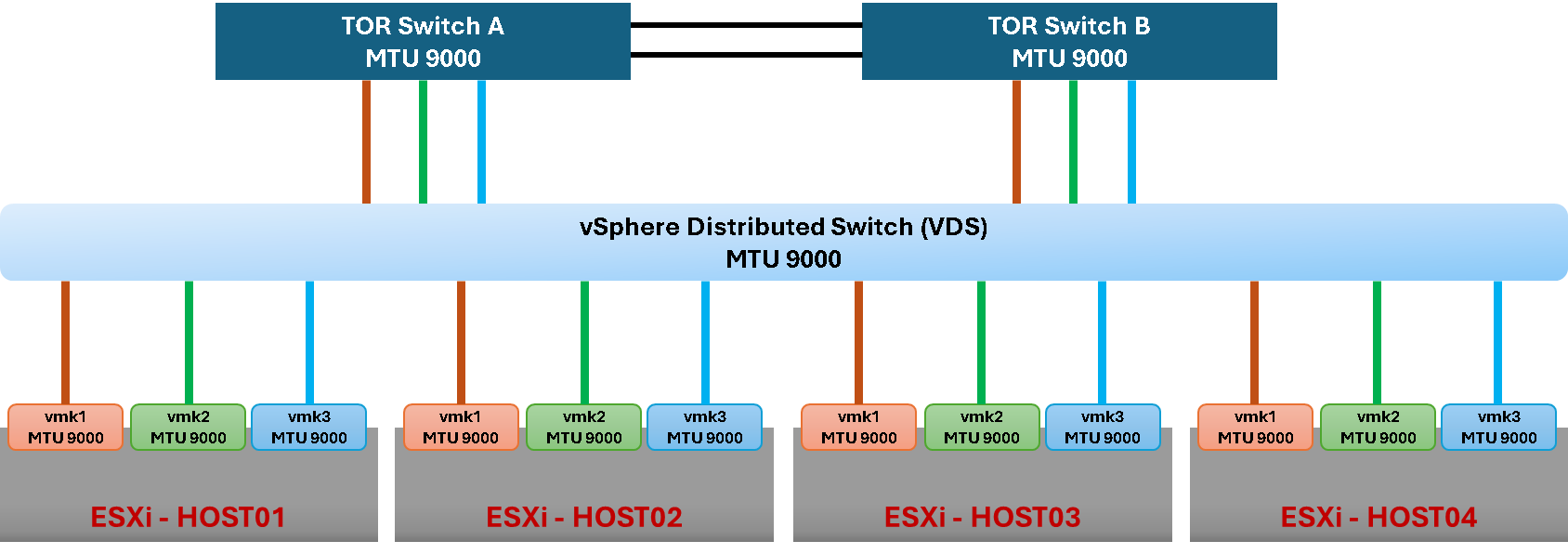

The vSAN cluster is a standard cluster with four ESXi nodes. The vCenter Server is embedded in this cluster, and the customer has several production VMs running.

The top-of-rack switches are Cisco-based, and the configured MTU is 9000 bytes. It means that all node ports are with 9000 bytes.

The vSphere Distributed Switch (VDS) and all node’s VMKernel ports are 9000 bytes, too.

As we can see in the following picture, all network devices and interfaces have the MTU as 9000 bytes:

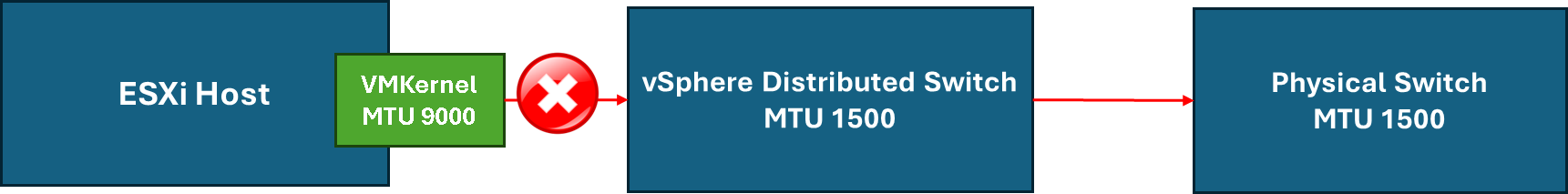

They changed the MTU value from 9000 to 1500 at the VDS and physical switch levels. After that, all VMKernel interfaces configured with 9000 bytes MTU stopped working, causing the entire environment to fail.

So, we have a question: Why did the cluster go down?

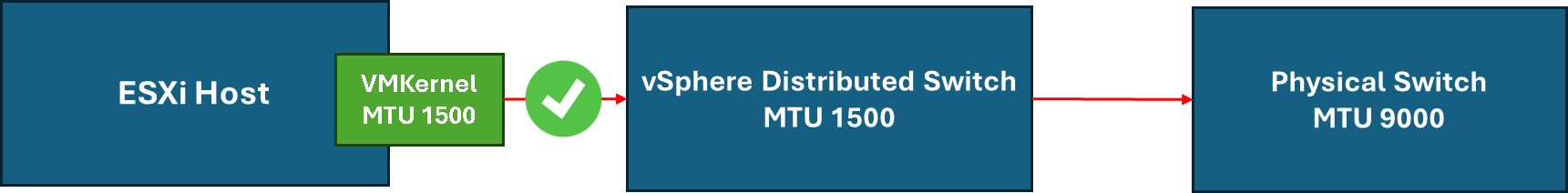

So, since the ESXi does not fragment the packets in the source interface, each VMKernel has the MTU 9000, and the VDS has its MTU 1500. As we can imagine based on it, the VMKernel was not able to talk on the network due to MTU mismatch (when the VMKernel interface sends a package and it arrives at the VDS, it is dropped due to MTU mismatch):

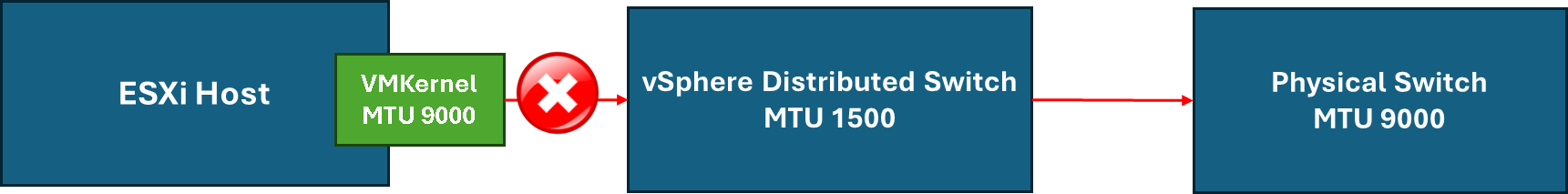

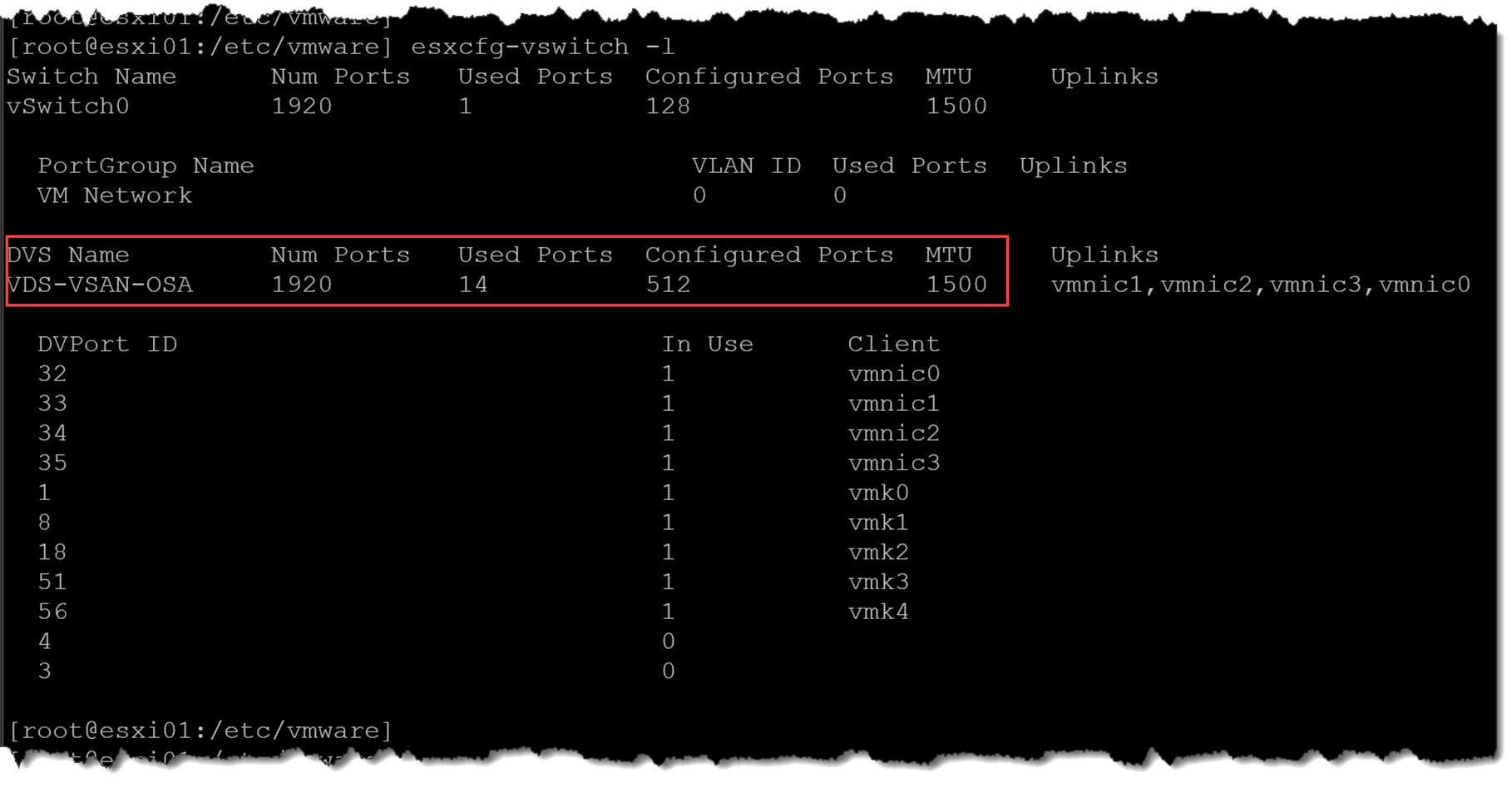

Afterward, they changed the MTU to 9000 at the physical switches, but the cluster remained down (as we can see in the following picture, even after changing the MTU to 9000 at the physical switch, the VDS MTU remained 1500):

vSphere Distributed Switch

When we use a vSphere Distributed Switch (VDS), all configurations are made at the vCenter Server level, and a copy of this configuration is sent to each ESXi host. The vCenter Server works as a management and controlling plane for VDS configurations. So, the majority of configurations are only supported by the vCenter Server (we cannot change directly at the ESXi host level).

So, if the vCenter Server goes down?

The ESXi host will have a copy of the configuration in the last vCenter Server status before it goes down. New changes will not be possible while the vCenter Server is down!

Troubleshooting the issue

At this point, we lost access to the vCenter Server and Host’s Client interface. We could not access anyone by SSH either. So, is the moment to sit down and start to cry =/

Remember, this is a vSAN cluster, and vSAN depends on the network to work correctly. Since the vSAN cluster is partitioned, all VMs are down.

All ESXi hosts are Dell-based. So, they have an out-of-band management interface (iDRAC). We could access each one and have access to the ESXi DCUI interface (Alt + F1). In this interface, we could access the ESXi as a root and execute some commands to troubleshoot the issue.

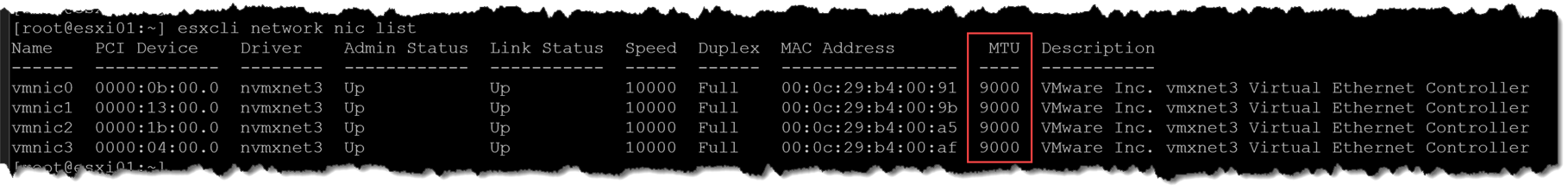

The first action we did was to check the MTU on each physical host interface:

esxcli network nic listAs we can see, each vmnic interface has the 9000 MTU (we applied this command on each ESXi host):

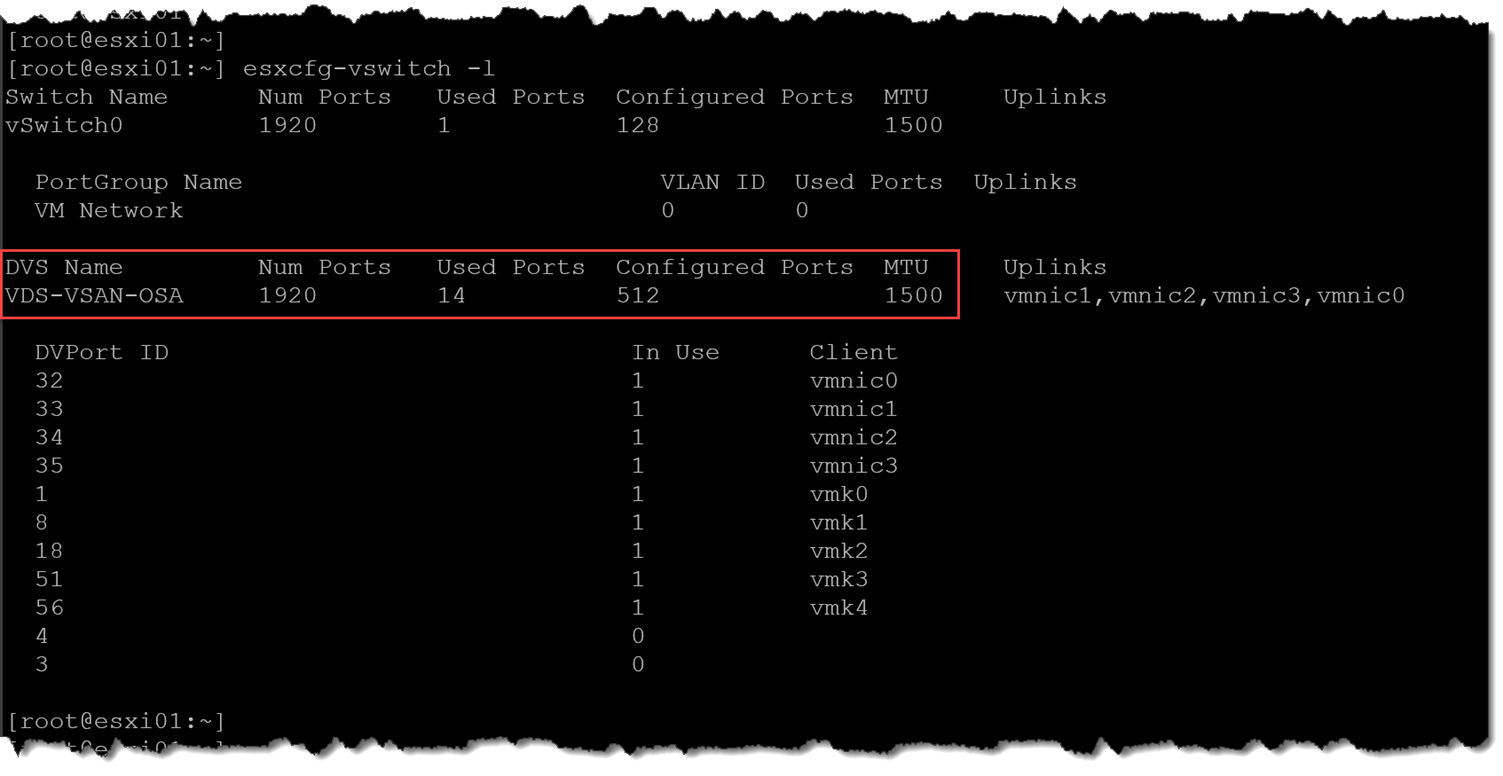

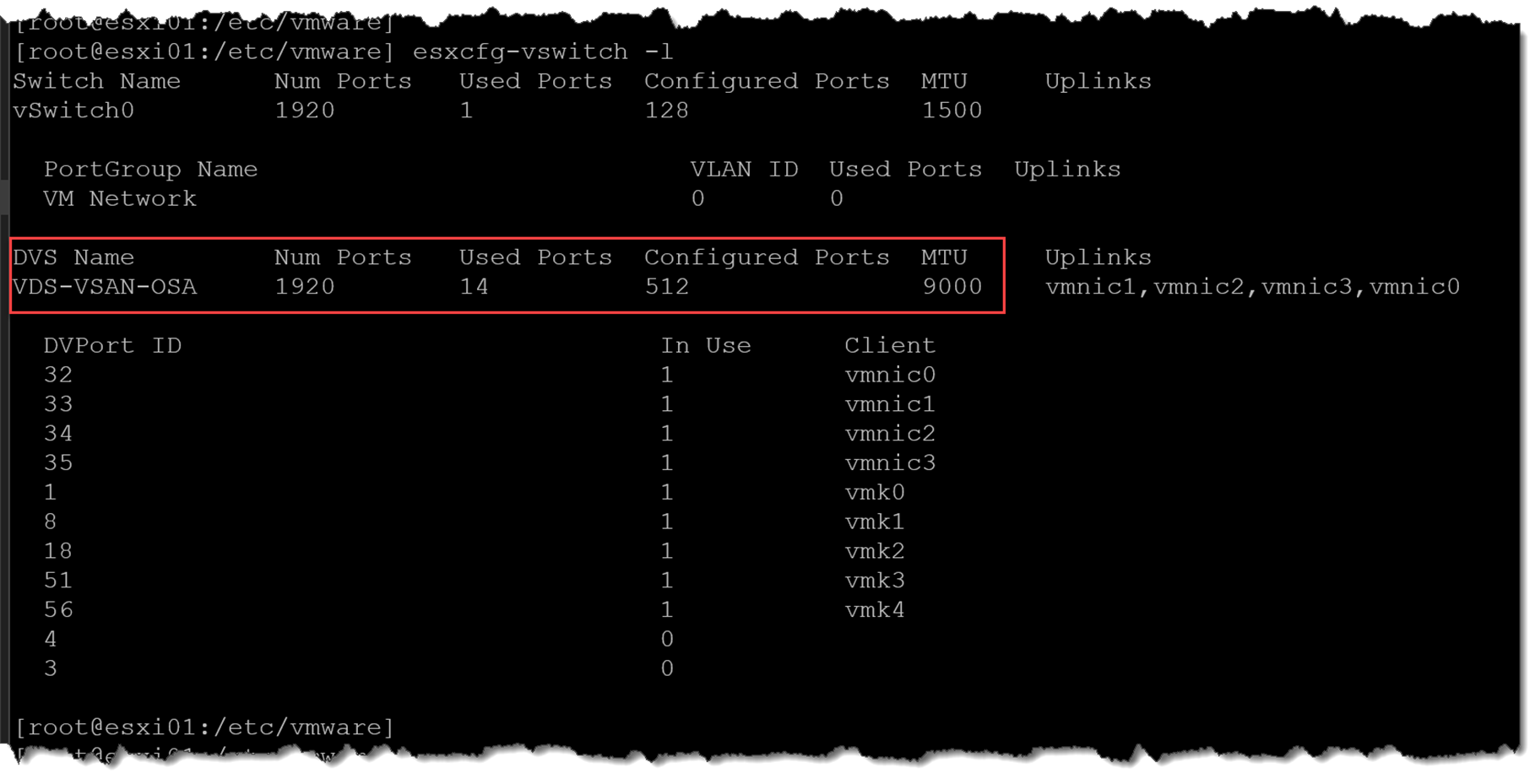

The next step was to check the MTU at the VDS level:

esxcfg-vswitch -lAs we can see, the VDS “VDS-VSAN-OSA” has the 1500 MTU:

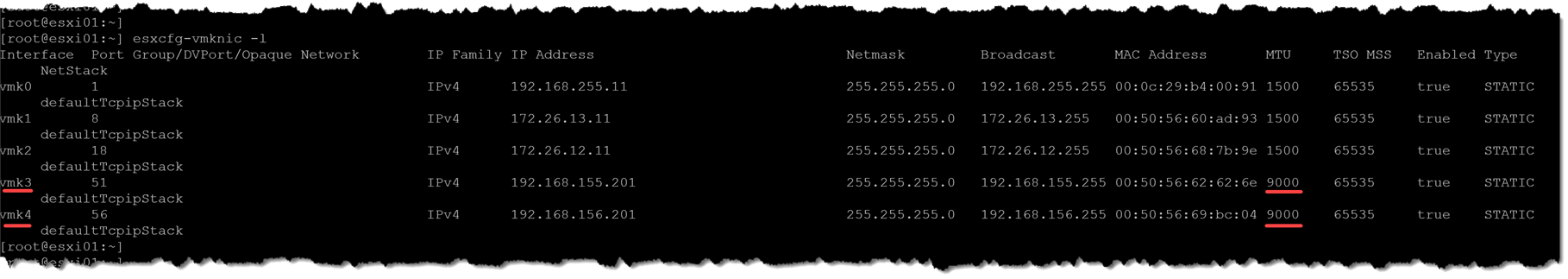

Next, we checked the MTU value for each host’s vmkernel interface:

esxcfg-vmknic -lAs we can see, some vmkernel has the 9000 MTU, making the communication from them impossible to happen:

Fixing the issue

So, after understanding the issue, we fixed it.

We changed the VMkernel MTU from 9000 to 1500 on all VMkernel interfaces needed with the following command:

esxcli network ip interface set -m=1500 --interface-name=vmkXWhere:

interface-name: Specify the vmkernel name, for example “vmk3”

After that, the cluster went up, and all VMs were accessible again:

After searching and testing many things in a lab environment, we found a way to change the MTU directly at the VDS level on the ESXi command line. Let’s see how to do that.

Warning: We did not find any official documentation of it. So, do it carefully. We will not be responsible for any troubles in your environment!

The following command shows that our VDS “VDS-VSAN-OSA” has a 1500 global MTU size:

esxcfg-vswitch -l

We have used the following command to change the VDS switch MTU from 1500 to 9000:

esxcfg-vswitch -m 9000 'VDS-VSAN-OSA'

😉

To Wrapping This Up

This topic can be easy for some VMware administrators. However, it can be unclear if you do not understand how MTU affects the host and its interface communication. Before making any changes in your environment, check all the necessary things first (physical switch, VDS, host interfaces, etc.) and make a plan to change them in non-production time.