Creating Your First Ansible Playbook is an article that introduces Ansible for complete beginners.

First and foremost: What’s Ansible?

- It’s a powerful tool for IT orchestration and automation.

- Ansible uses SSH to connect to target hosts and execute its tasks.

- The target nodes only need SSH and Python (it is not necessary to install any Ansible agent or something like that – “agentless”).

- Its architecture comprises modules, inventories, and playbooks.

How does Ansbile work?

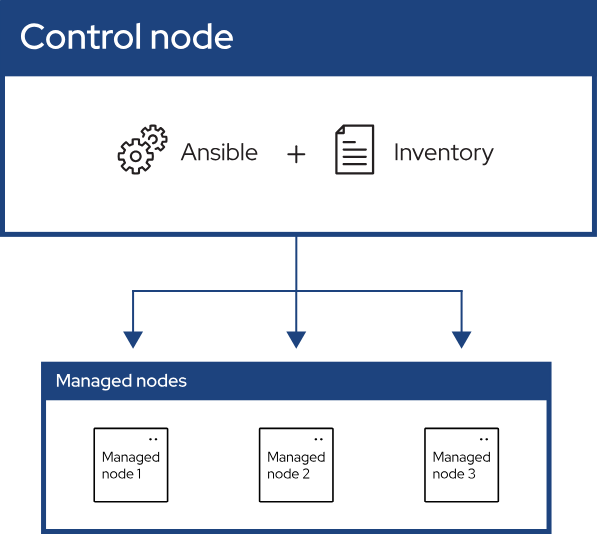

Most straightforwardly, to work with Ansible, we need:

- An Ansible control VM (it is the VM when we run Ansible).

- One or more systems managed by the Ansible control VM.

The following picture is a visual aid from the official Ansible documentation:

Ansible needs to know on what systems it will work or manage. So, we need to create an Inventory file to achieve this requirement. This can be a “.ini” file containing all hosts to be managed by Ansible.

Understanding some Ansible Concepts

These basic Ansible concepts are:

Control Node:

The machine from which we run the Ansible CLI tools (ansible-playbook , ansible, ansible-vault and others). We can use any computer that meets the software requirements as a control node.

Managed Nodes:

Also referred to as “hosts”, these are the target devices (servers, network devices, or any computer) we aim to manage with Ansible.

Inventory:

A list of managed nodes provided by one or more “inventory sources”. The inventory can specify information specific to each node, like the IP address. It is also used for assigning groups, which allows for node selection in the Play and bulk variable assignment.

Playbooks:

They contain Plays (which are the basic unit of Ansible execution). This is both an “execution concept” and how we describe the files on which ansible-playbook it operates. Playbooks are written in YAML and are easy to read, write, share, and understand.

Plays:

The main context for Ansible execution, this playbook object maps managed nodes (hosts) to tasks. The Play contains variables, roles, and an ordered list of tasks, and can be run repeatedly. It basically consists of an implicit loop over the mapped hosts and tasks and defines how to iterate over them.

Roles:

A limited distribution of reusable Ansible content (tasks, handlers, variables, plugins, templates and files) for use inside of a Play. To use any Role resource, the Role itself must be imported into the Play.

Tasks:

The definition of an “action” to be applied to the managed host. We can execute a single task once with an ad hoc command using ansible or ansible-console (both create a virtual Play).

Handlers:

A special form of a Task, that only executes when notified by a previous task which resulted in a “changed” status. For example, restart a specific service if its configuration was changed.

Modules:

The code or binaries that Ansible copies to and executes on each managed node (when needed) to accomplish the action defined in each Task. Each module has a particular use, from administering users on a specific type of database to managing VLAN interfaces on a specific type of network device.

Plugins:

Pieces of code that expand Ansible’s core capabilities. Plugins can control how you connect to a managed node (connection plugins), manipulate data (filter plugins), and even control what is displayed in the console (callback plugins).

Collections:

A format in which Ansible content is distributed that can contain playbooks, roles, modules, and plugins. You can install and use collections through Ansible Galaxy. Collection resources can be used independently and discretely from each other.

Note: In this basic article, we’ll not cover in detail all of these concepts. However, it’s essential to know that they exist!

To recap, what do we need to work with Ansible?

Control Node requirements:

- Linux machine (e.g., CentOS 9).

- Python – It is already installed by default on CentOS.

- SSH access to target nodes (managed hosts).

- Passwordless sudo or password (Ansible supports both) – we’ve written an article about SSH passwordless authentication. You can click here to read this article!

Managed Nodes requirements:

- Only need SSH + Python (already present on CentOS)

- No agent to install — that’s a big win!

How to Install Ansible on the Control Node?

For the control node (the machine that runs Ansible), we can use nearly any UNIX-like machine with Python installed. This includes Red Hat, Debian, Ubuntu, macOS, BSDs, and Windows via the Windows Subsystem for Linux (WSL – Windows without WSL is not natively supported as a control node).

To demonstrate the installation process, we’re using CentOS Stream. Let’s get started:

1- Install CentOS 9 Stream (just doing a basic CentOS 9 install and ensuring that it has Internet access);

2- Install Ansible:

# Enable EPEL repository

dnf install -y epel-release

# Install Ansible

dnf install -y ansible3- Check the Ansible version:

ansible --versionExample:

# ansible --version

ansible [core 2.14.18]

config file = /etc/ansible/ansible.cfg

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python3.9/site-packages/ansible

ansible collection location = /root/.ansible/collections:/usr/share/ansible/collections

executable location = /usr/bin/ansible

python version = 3.9.20 (main, Sep 9 2024, 00:00:00) [GCC 11.5.0 20240719 (Red Hat 11.5.0-2)] (/usr/bin/python3)

jinja version = 3.1.2

libyaml = TrueAt this point, we already have Ansible installed on our Control VM!

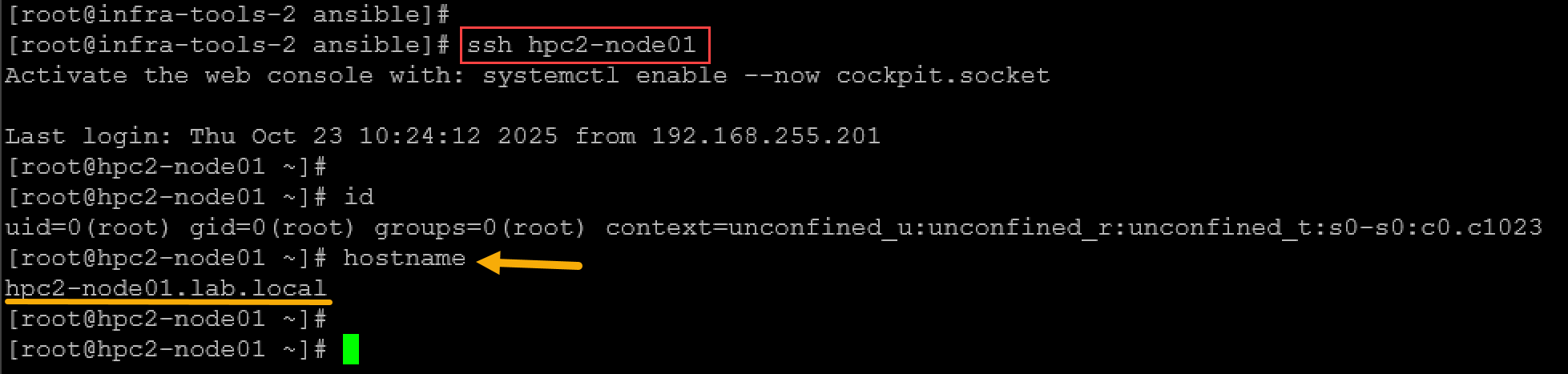

Before going forward, we’ll set up the Ansible control VM to access all managed nodes without providing SSH username and password (aka SSH passwordless authentication) – this is a good practice, and we’ll show a way to perform it now:

1- On the Ansible control VM, install sshpass – this package allows us to execute SSH commands on remote systems without providing the password interactively:

dnf install -y sshpass2- Generating the SSH key-pairs and copying the public SSH key to all Ansible-managed hosts – in this case, for instance, the managed hosts are “hpc2-node01 to hpc2-node06”.

After copying the public SSH key to the managed hosts, don’t forget to remove the “SSHPASS” variable (unset SSHPASS) – this variable was used by the command “sshpass”:

ssh-keygen

export SSHPASS='YOUR_USER_PASSWORD_HERE'

for i in {01..06}; do sshpass -e ssh-copy-id -o StrictHostKeyChecking=no root@hpc2-node$i; done

unset SSHPASS3- Populate the SSH known_hosts file with information about all managed hosts:

for i in {01..06}; do ssh-keyscan hpc2-node$i >> ~/.ssh/known_hosts; doneThe aim is to access the managed hosts by SSH without providing the SSH username and password, as we can see in the following picture:

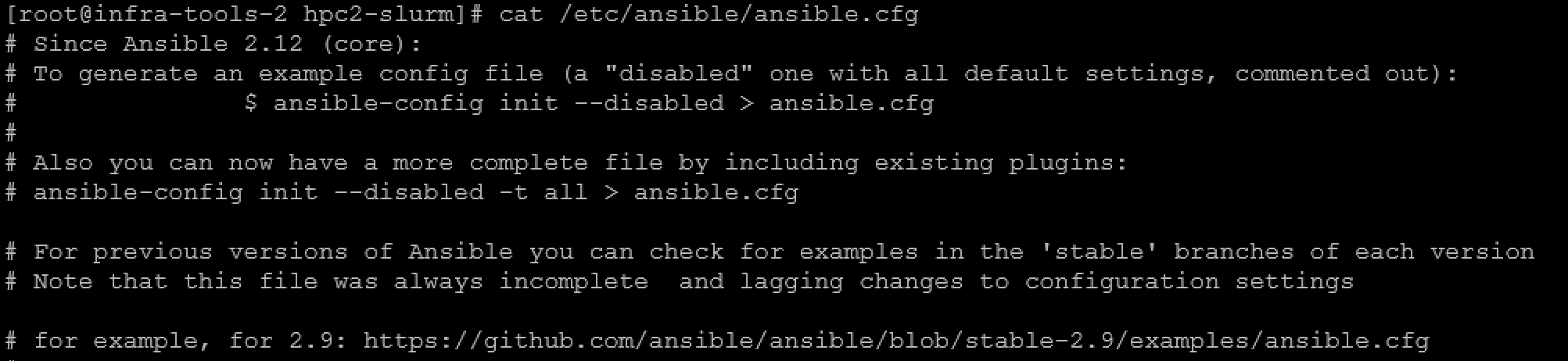

Ansible Configuration File

Specific settings in Ansible are adjustable with a configuration file (ansible.cfg). The stock configuration should be sufficient for most users, but there may be reasons you would want to change it.

If installing Ansible from a package manager, the latest ansible.cfg file should be present in /etc/ansible.

If you installed Ansible using pip or from a source, you may want to create this file to override default settings in Ansible.

In our case, since we installed Ansible using the package manager, the Ansible configuration file is:

Note: By default, this file has no configuration!

We can, for example, add the log file that Ansible must use to log all Playbook executions by adding the following entry:

[defaults]

log_path = /var/log/ansible.logCreating the Inventory File

Ansible needs to know on what systems it will work or manage. So, we need to create an Inventory file to achieve this requirement.

Let’s create the directory to store the inventory file:

mkdir -p /root/ansible/inventoryCreate the inventory file:

touch /root/ansible/inventory/hosts.iniAnd then, add the hosts in the inventory file:

[hpc_nodes] # name of the "group of hosts"

hpc2-node01 ansible_host=192.168.255.121 # hosts declaration, one on each line

hpc2-node02 ansible_host=192.168.255.122

hpc2-node03 ansible_host=192.168.255.123

hpc2-node04 ansible_host=192.168.255.124

hpc2-node05 ansible_host=192.168.255.125

hpc2-node06 ansible_host=192.168.255.126To verify the inventory:

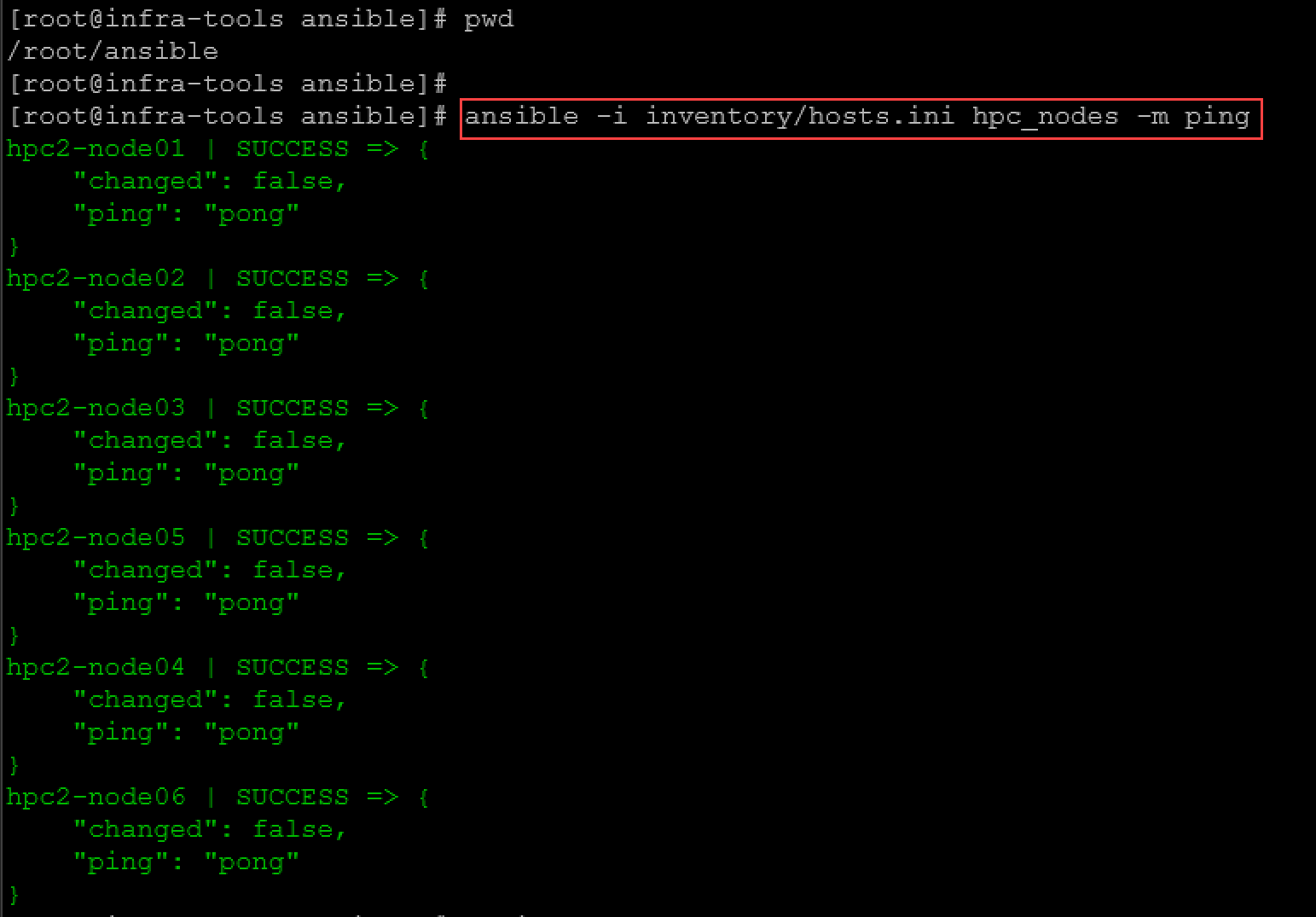

ansible-inventory -i inventory/hosts.ini --listTo test the connection to the managed hosts:

ansible -i inventory/hosts.ini hpc_nodes -m pingWhere:

-i inventory/hosts.ini: inventoryfile

hpc_nodes: name of the group of hosts inside the inventory files

-m ping: calling the module “ping”.

Note: Ansible does not use ICMP to test the hosts. Ansible establishes an SSH connection and writes “pong” if the connection is successful!

Creating the first Playbook

Playbooks are automation blueprints, in YAML format, that Ansible uses to deploy and configure managed nodes. It is a list of plays that define the order in which Ansible performs operations, from top to bottom, to achieve an overall goal.

So, let’s create a directory to store the playbooks:

mkdir -p /root/ansible/playbooksCreate the file “install_nfs_client.yml” under the playbook directory:

touch /root/ansible/playbook/install_nfs_client.ymlAnd then, add the playbook content – you should follow the file indentation, as we’re showing – this is a MUST for YAML format:

---

- name: Install and enable NFS client on HPC nodes

hosts: hpc2_nodes

become: yes

tasks:

- name: Ensure nfs-utils is installed

package:

name: nfs-utils

state: present

- name: Ensure nfs-client.target is enabled and started

service:

name: nfs-client.target

state: started

enabled: trueIn this case, for instance, the desired state for this playbook is to ensure that the NFS client package is installed and started on all hosts present in the inventory file.

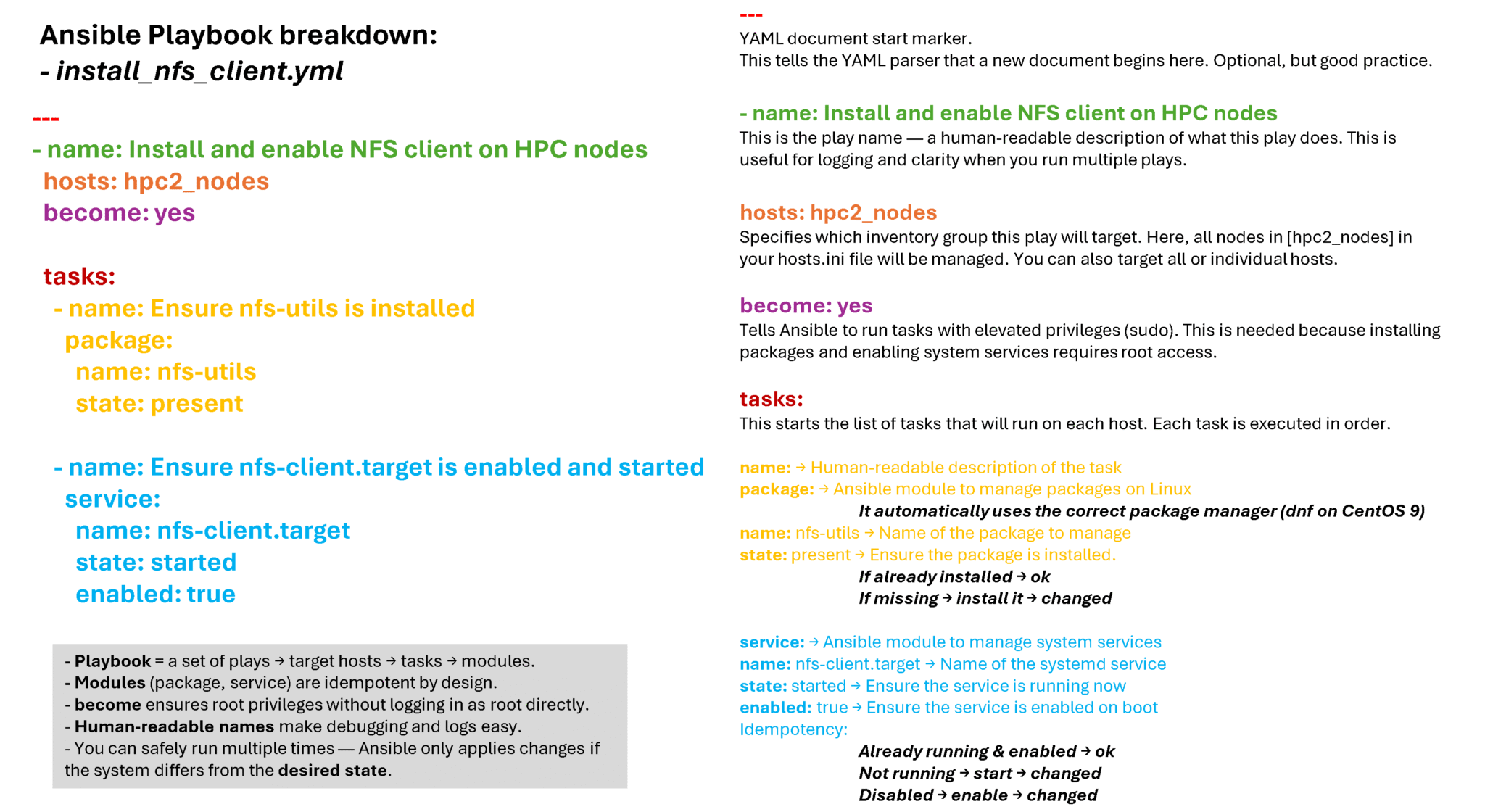

Before running the playbook, let’s break it down:

To run the playbook:

ansible-playbook -i inventory/hosts.ini playbook/install_nfs_client.ymlExample:

[root@infra-tools-2 ansible]# ansible-playbook -i inventory/hosts.ini playbook/install_nfs_client.yml

PLAY [Install and enable NFS client on HPC nodes] ***************************************************************************************************************************

TASK [Gathering Facts] ******************************************************************************************************************************************************

ok: [hpc2-node05]

ok: [hpc2-node02]

ok: [hpc2-node01]

ok: [hpc2-node03]

ok: [hpc2-node04]

ok: [hpc2-node06]

TASK [Ensure nfs-utils is installed] ****************************************************************************************************************************************

ok: [hpc2-node05]

ok: [hpc2-node01]

ok: [hpc2-node03]

ok: [hpc2-node02]

ok: [hpc2-node04]

ok: [hpc2-node06]

TASK [Ensure nfs-client.target is enabled and started] **********************************************************************************************************************

ok: [hpc2-node03]

ok: [hpc2-node02]

ok: [hpc2-node01]

ok: [hpc2-node05]

ok: [hpc2-node04]

ok: [hpc2-node06]

PLAY RECAP ******************************************************************************************************************************************************************

hpc2-node01 :ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

hpc2-node02 :ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

hpc2-node03 :ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

hpc2-node04 :ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

hpc2-node05 :ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

hpc2-node06 :ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0Since nfs-utils was already installed and nfs-client.target was already enabled & running, Ansible reported:

changed=0

ok=3

That means:

— It checked the state of each item.

— It found that the system already matches the desired state.

— It did not re-run or reinstall anything.

— No redundant action, no disruption — safe by design.

— The play recap that summarizes the results of all tasks in the playbook per host. In this example, there are three tasks, so ok=3 indicates that each task ran successfully.

This is called idempotency:

— Ansible playbooks are meant to be run multiple times without side effects.

— We could run that same playbook 100 times a day — our cluster would stay correct and nothing would be reinstalled or restarted unless needed.

— That is the core difference between:

- Shell scripting: “just run commands again”

- Ansible: “declare desired state and enforce only when diverging”

Note: The “Gathering Facts” task runs implicitly. By default, Ansible gathers information about your inventory that it can use in the playbook.

That’s it for now 🙂 Enjoy it!