Creating Virtual VMs Using Ansible shows an example of automating the creation of virtual machines in a lab environment. Our lab is composed of a physical server running VMware ESXi and, over it, we’ll create all the necessary virtual machines (VMs).

How will it work?

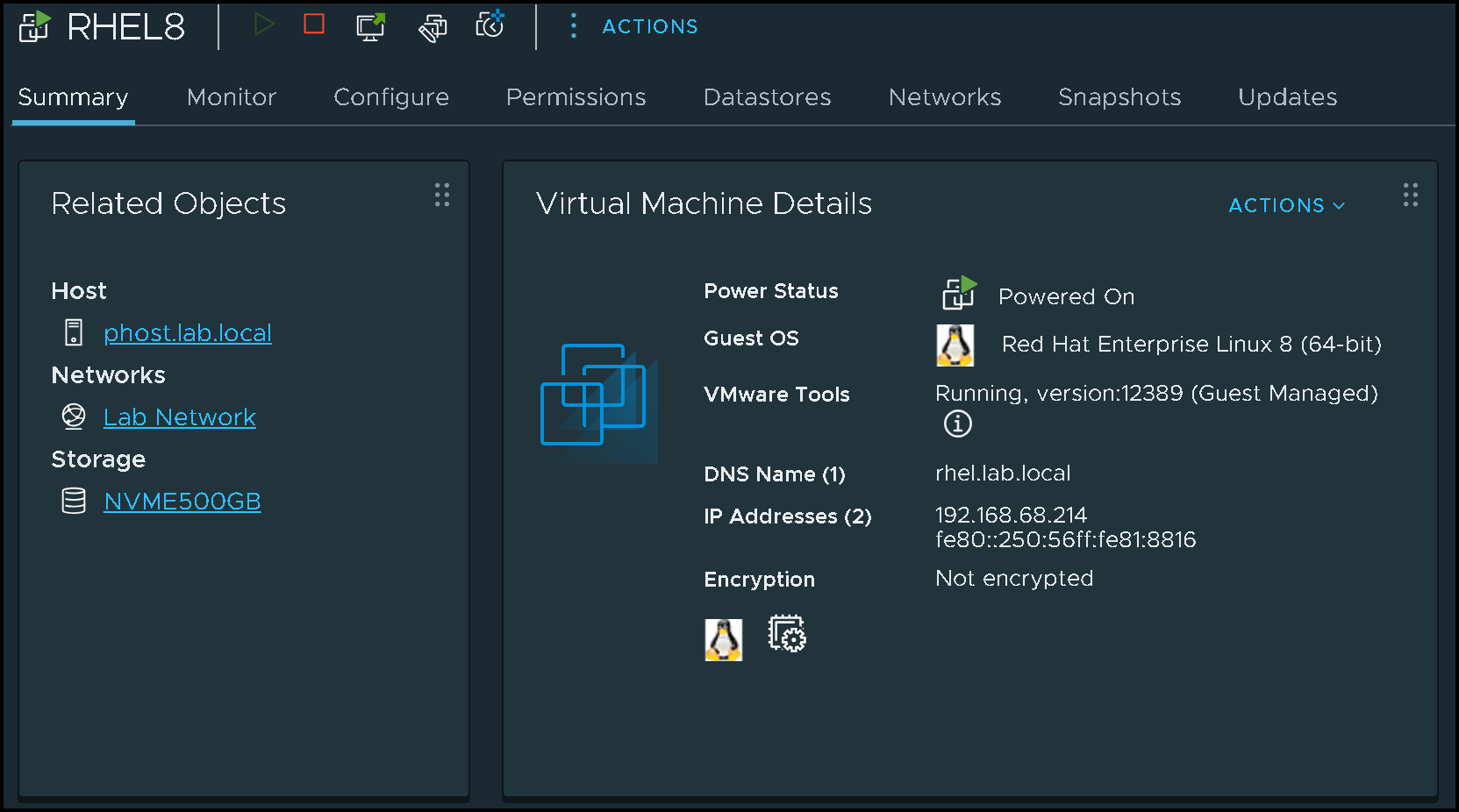

So, the first step is to install Red Hat Enterprise Linux 8. We have written an article to explain, step-by-step, how to perform it. Click here to access the article.

Note: We’re using RHEL, but you can try using your preferred Linux distribution. However, all the steps presented here were tested using RHEL (We cannot guarantee that all the steps here will work fine with another Linux distribution):

This RHEL VM is our “Template VM”. We’ll use it as a base to create the other VMs.

Make sure to install the following packages in this base VM:

dnf install -y open-vm-tools

dnf install -y cloud-init cloud-utils-growpartNote: To install packages on RHEL, you probably need to register your RHEL. We have an article that explains it. Just click here to access the article!

Making Some Configurations on the Base VM

So, since our goal is to create many virtual machines from the “base” RHEL VM, we need to prep the base VM for that. To help us, we’re using the “cloud-init” package.

The cloud-init package on RHEL is a utility that automates the initial setup of a Red Hat Enterprise Linux instance during its first boot. It allows for unattended configuration of a new server by performing tasks like setting the hostname, configuring the network, installing packages, and running scripts. This makes it easier to deploy multiple RHEL instances with consistent, pre-defined configurations across different cloud platforms.

So, let’s get started and adjust some things:

1- On the base RHEL VM, create the configuration file /etc/cloud/cloud.cfg.d/10-ssh-configuration.cfg with the following content – Basically, this file will ensure we can access the cloned VMs using root with password:

ssh_pwauth: true

disable_root: false

# Keep existing SSH server configuration

ssh_deletekeys: false

ssh_genkeytypes: ['rsa', 'ecdsa', 'ed25519']

# Prevent cloud-init from disabling password login

ssh:

ssh_pwauth: true2- Creating the configuration file /etc/cloud/cloud.cfg.d/99-DataSource.cfg with the following content – This file will ensure that the VMware Guest injects some configurations during the clone process:

datasource_list: [ VMware, NoCloud, ConfigDrive ]

datasource:

VMware:

metadata_ssl_verify: false3- Edit the configuration file /etc/cloud/cloud.cfg and change the following entry:

FROM:

disable_vmware_customization: false

TO:

disable_vmware_customization: true4- After that, we can shut down the base RHEL VM. Since we’re using ESXi, we can create a VM Template and use the Template as a source for creating the VMs. But it’s not necessary (we can use the VM itself).

Creating Ansible Files on the Control Node

If you are new to Ansible like me, don’t worry about that 🙂

We’ve written an article to explain Ansible in its basics. Click here to access the article!

Basically, the Ansible Control Node is the system where we execute Ansible.

So, let’s create our project to make things happen:

1- On the Ansible Control Node, create a directory for this project:

mkdir -p /root/ansible/create_vms2- Creating the inventory file hosts.yml with the following content – In fact, we’ll use this file to store all variables to use during the Playbook execution (you must replace all variable values with your values):

all:

vars:

vcenter_hostname: "vcsa01.lab.local"

vcenter_username: "administrator@vsphere.local"

vcenter_password: "Your_Password_Here"

vcenter_datacenter: "DC-LOCALHOST"

vcenter_cluster: "phost.lab.local"

vm_template: "RHEL8"

vm_folder: "RHEL-INFRA/HPC"

vm_network: "Lab Network"

vm_datastore: "NVME2TB"Explaining each variable:

vcenter_hostname –> FQDN or IP address of the vCenter Server

vcenter_username –> vCenter Username

vcenter_password –> Password of the vCenter Username

vcenter_datacenter –> Datacenter Name

vcenter_cluster –> Cluster Name (or you can point to a Standalone ESXi host)

vm_template –> Virtual Machine Name (this is the “base” VM)

vm_folder –> Folder Name to store the cloned VMs

vm_network –> Network Name (Port Group) that will be used by the cloned VMs

vm_datastore –> Datastore Name

3- Creating the playbook file create_vms.yml with the following content:

---

- name: Deploy VMs from template

hosts: localhost

gather_facts: no

collections:

- community.vmware

vars:

vm_list:

- { name: hpchead01, ip: 192.168.255.138 }

- { name: hpchead02, ip: 192.168.255.139 }

- { name: hpcnfs01, ip: 192.168.255.140 }

- { name: hpcnode01, ip: 192.168.255.141 }

- { name: hpcnode02, ip: 192.168.255.142 }

- { name: hpcnode03, ip: 192.168.255.143 }

- { name: hpcnode04, ip: 192.168.255.144 }

- { name: hpcnode05, ip: 192.168.255.145 }

- { name: hpcnode06, ip: 192.168.255.146 }

- { name: hpcnode07, ip: 192.168.255.147 }

- { name: hpcnode08, ip: 192.168.255.148 }

- { name: hpcnode09, ip: 192.168.255.149 }

- { name: hpcnode10, ip: 192.168.255.150 }

tasks:

- name: Clone RHEL template

vmware_guest:

hostname: "{{ vcenter_hostname }}"

username: "{{ vcenter_username }}"

password: "{{ vcenter_password }}"

validate_certs: no

name: "{{ item.name }}"

template: "{{ vm_template }}"

datacenter: "{{ vcenter_datacenter }}"

folder: "{{ vm_folder }}"

cluster: "{{ vcenter_cluster }}"

datastore: "{{ vm_datastore }}"

networks:

- name: "{{ vm_network }}"

ip: "{{ item.ip }}"

netmask: "255.255.255.0"

gateway: "192.168.255.1"

customization:

hostname: "{{ item.name }}"

domain: "lab.local"

dns_servers:

- "192.168.255.3"

- "8.8.8.8"

- "1.1.1.1"

state: poweredon

loop: "{{ vm_list }}"Note: This playbook file was created to fit my lab needs. So, adjust it to fit your needs 🙂

4- Before executing the Playbook, install the “community.vmware” from Ansible Galaxy – This package is necessary for Ansible to execute the Playbook and do all the things inside the Playbook (Connect to vCenter, Clone VM, Inject configurations, etc):

ansible-galaxy collection install community.vmware4.1 – Install the Python module “pyvmomi”:

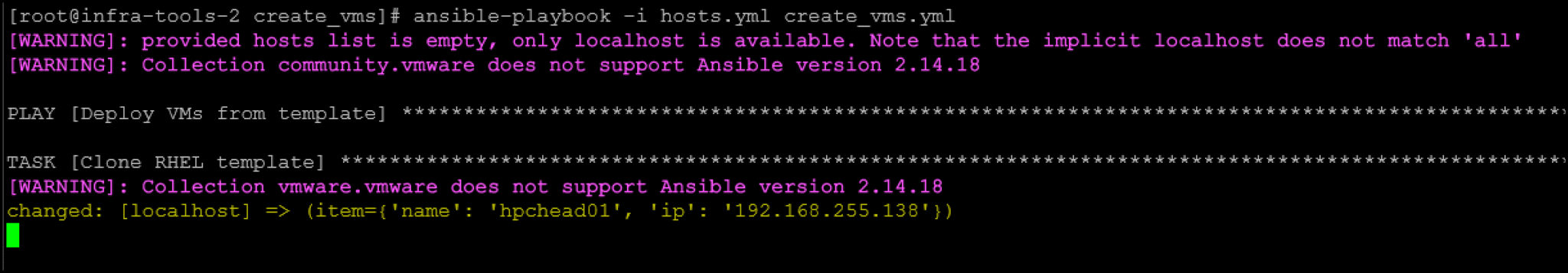

pip install --upgrade pyvmomi5- Running the Playbook:

ansible-playbook -i hosts.yml create_vms.yml

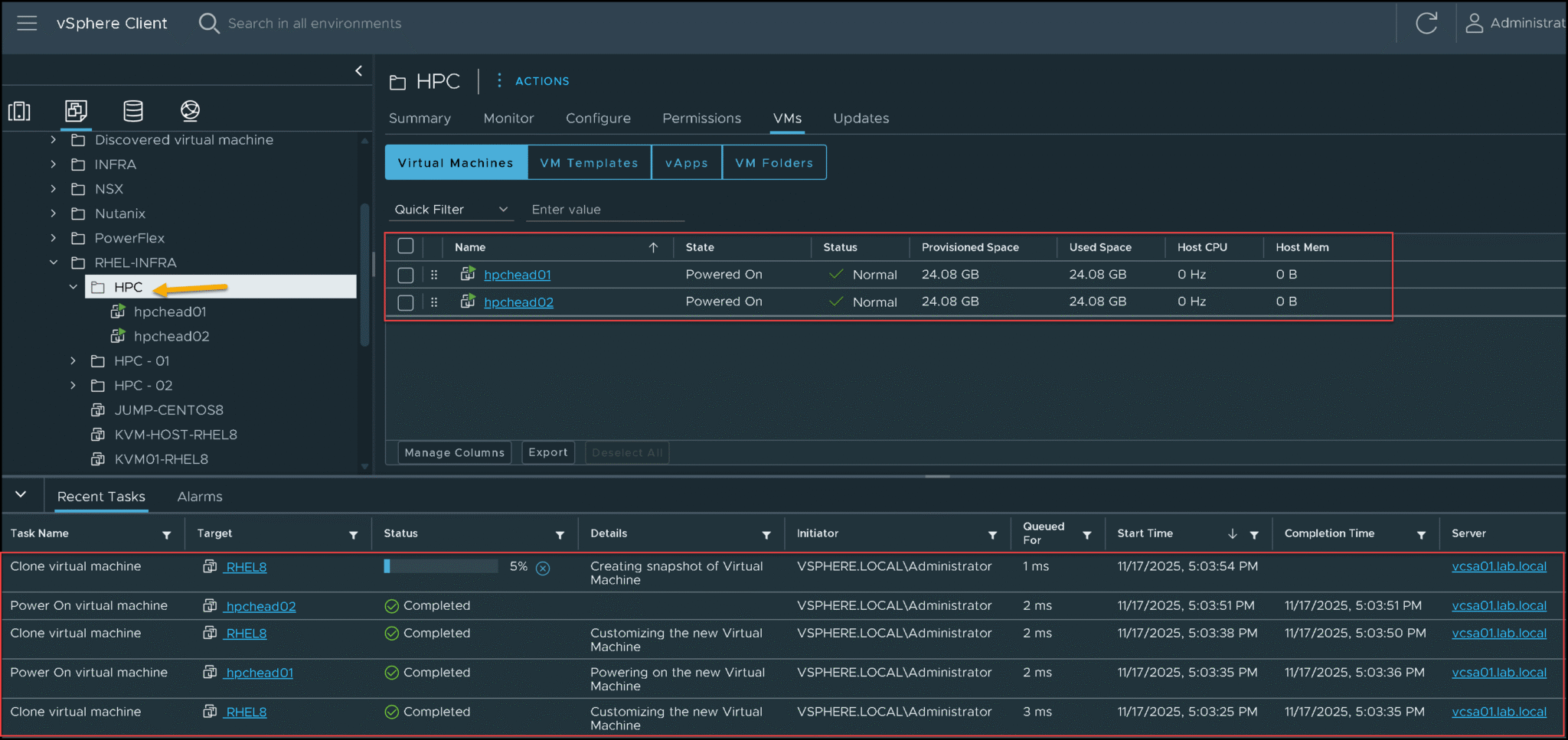

As we can see in the following picture, the VMs are being created under the “HPC” VM Folder:

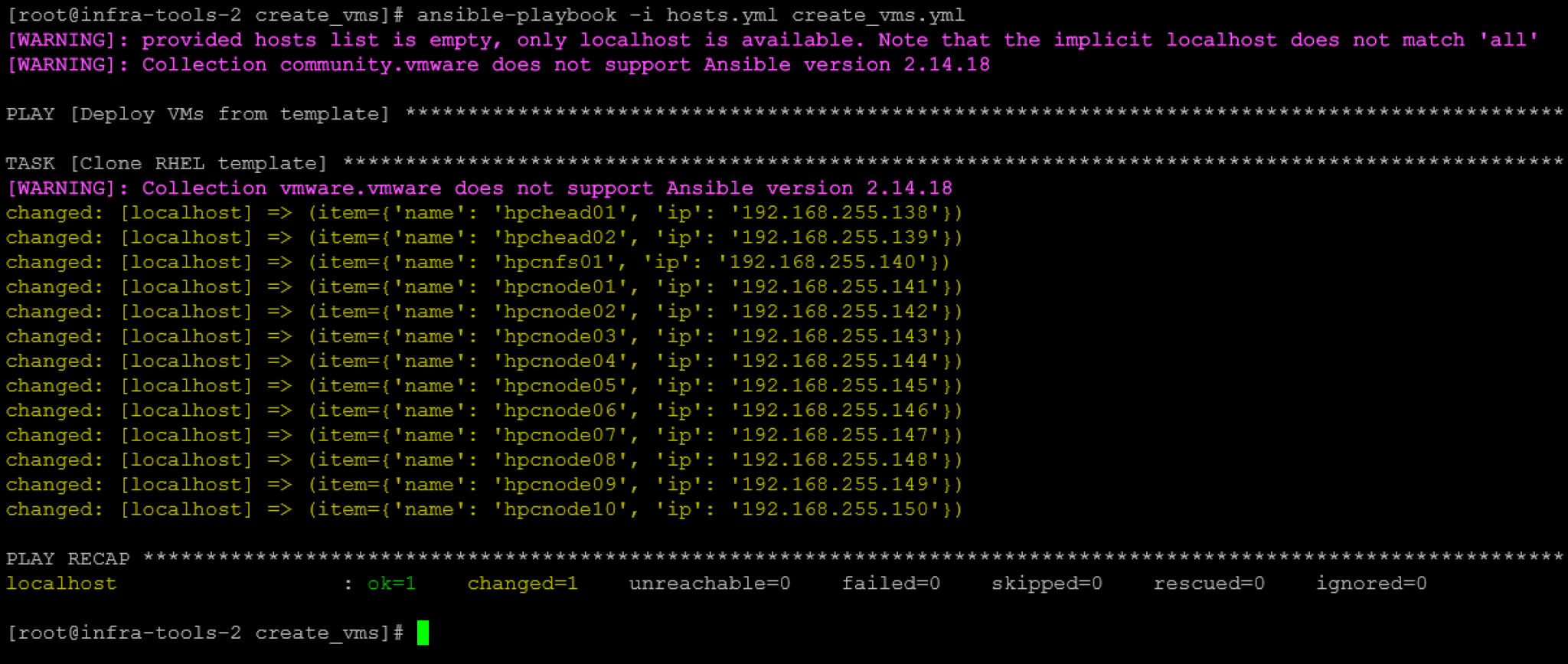

Here, the playbook executed successfully:

And all VMs were created as well:

6- On the Ansible Control Node, install the “sshpass” package and copy the SSH public key to the remote hosts (for passwordless authentication). The aim is to provide Ansible with the capability to access each cloned VM without using a password (aka passwordless authentication). We also have an article about that. Click here to access the article:

dnf install -y sshpass

export SSHPASS='Your_Password_Here'

for i in {01..02}; do sshpass -e ssh-copy-id -o StrictHostKeyChecking=no root@hpchead$i; done

for i in {01..10}; do sshpass -e ssh-copy-id -o StrictHostKeyChecking=no root@hpcnode$i; done

sshpass -e ssh-copy-id -o StrictHostKeyChecking=no root@hpcnfs01

unset SSHPASSNotes:

- Since we’re using the root username to copy the SSH keys to the remote hosts, we need to input the root password in the variable “SSHPASS“.

- You need to adjust the commands to satisfy your environment details.

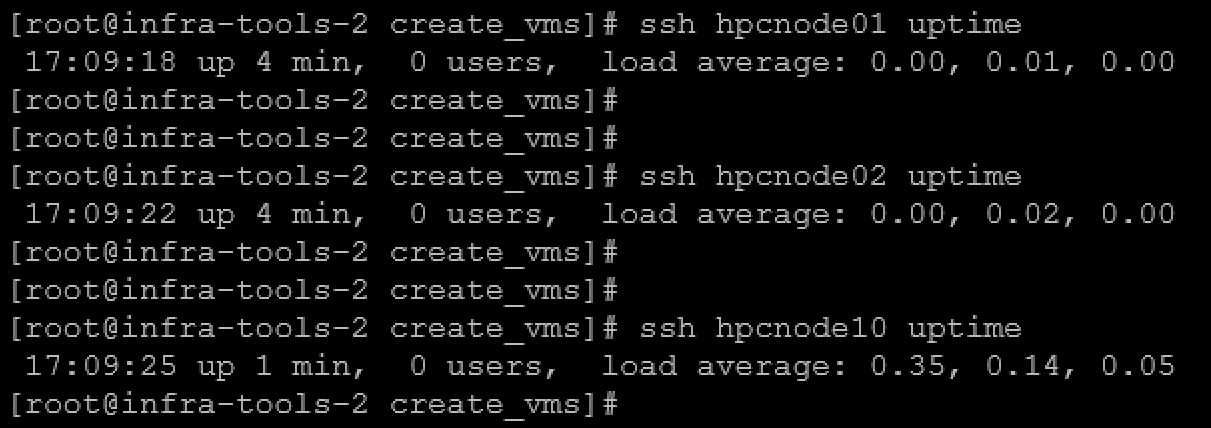

Afterward, we can test the command execution on remote hosts from Ansible without entering a password, as we can see in the following picture:

Registering RHEL VMs

So, using Ansible, we’ll register the cloned virtual machines with Red Hat. To do that, you need to have a Red Hat account, get your ORG ID, and generate an Activation Key.

1- Creating the Playbook “register_rhsm.yml” to fix cloned VM identity & register RHSM:

---

- name: Fix cloned VM identity & register RHSM

hosts: hpc_hosts

become: yes

tasks:

- name: Reset machine-id (ensure unique identity)

command: >

bash -c 'truncate -s 0 /etc/machine-id && systemd-machine-id-setup'

- name: Remove old SSH host keys

file:

path: /etc/ssh/ssh_host_*

state: absent

- name: Clean cloud-init state

command: cloud-init clean --logs

ignore_errors: yes

- name: Reboot after cleanup

reboot:

- name: Unregister system (ignore if not registered)

command: subscription-manager unregister

ignore_errors: yes

- name: Clean local subscription data

command: subscription-manager clean

ignore_errors: yes

- name: Register system using activation key

redhat_subscription:

state: present

org_id: "XXXXXXX"

activationkey: "XXXXXXXX"

- name: Enable BaseOS & AppStream repos

rhsm_repository:

name:

- rhel-8-for-x86_64-baseos-rpms

- rhel-8-for-x86_64-appstream-rpms

state: enabledNote: Replace the fields “org_id” and “activationkey” with your values!

2- Creating the inventory file “hpc_hosts.ini”:

[hpc_hosts]

hpchead01 ansible_host=192.168.255.138

hpchead02 ansible_host=192.168.255.139

hpcnfs01 ansible_host=192.168.255.140

hpcnode01 ansible_host=192.168.255.141

hpcnode02 ansible_host=192.168.255.142

hpcnode03 ansible_host=192.168.255.143

hpcnode04 ansible_host=192.168.255.144

hpcnode05 ansible_host=192.168.255.145

hpcnode06 ansible_host=192.168.255.146

hpcnode07 ansible_host=192.168.255.147

hpcnode08 ansible_host=192.168.255.148

hpcnode09 ansible_host=192.168.255.149

hpcnode10 ansible_host=192.168.255.1503- Executing the Playbook:

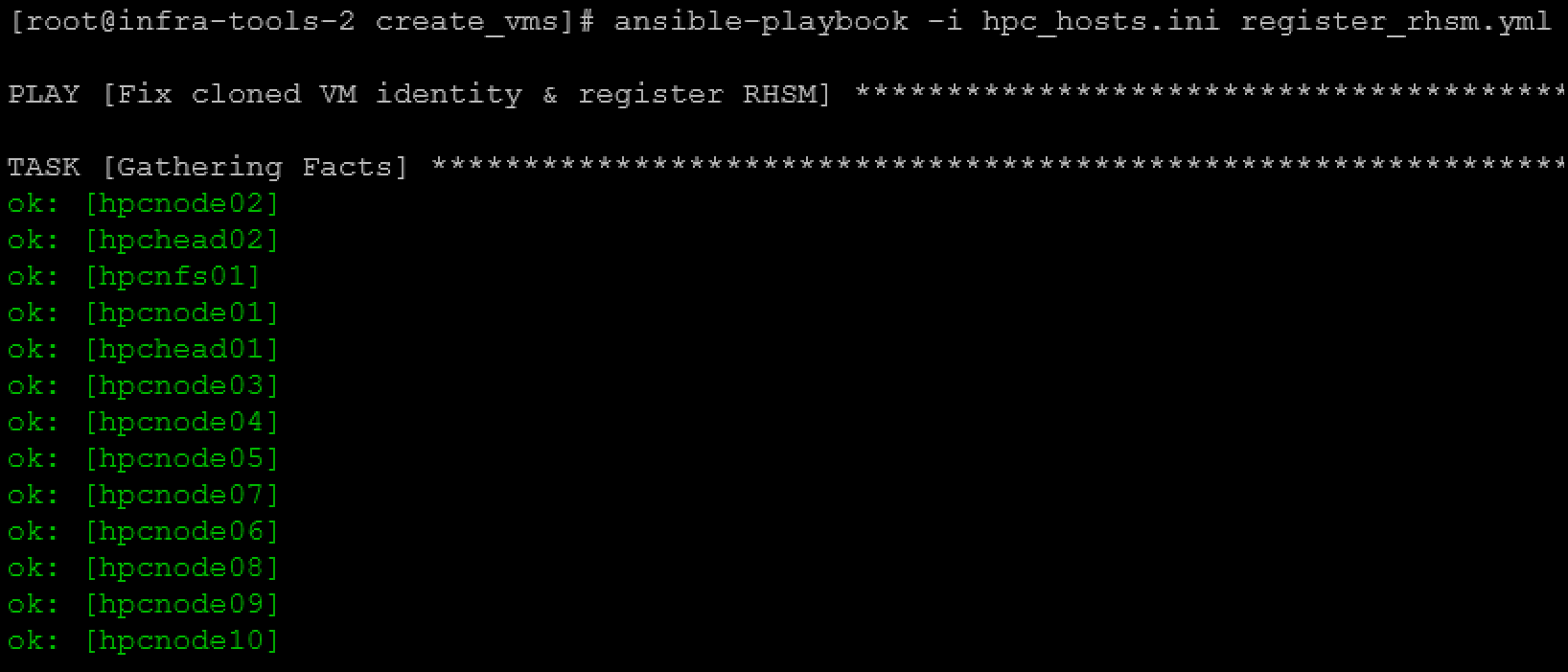

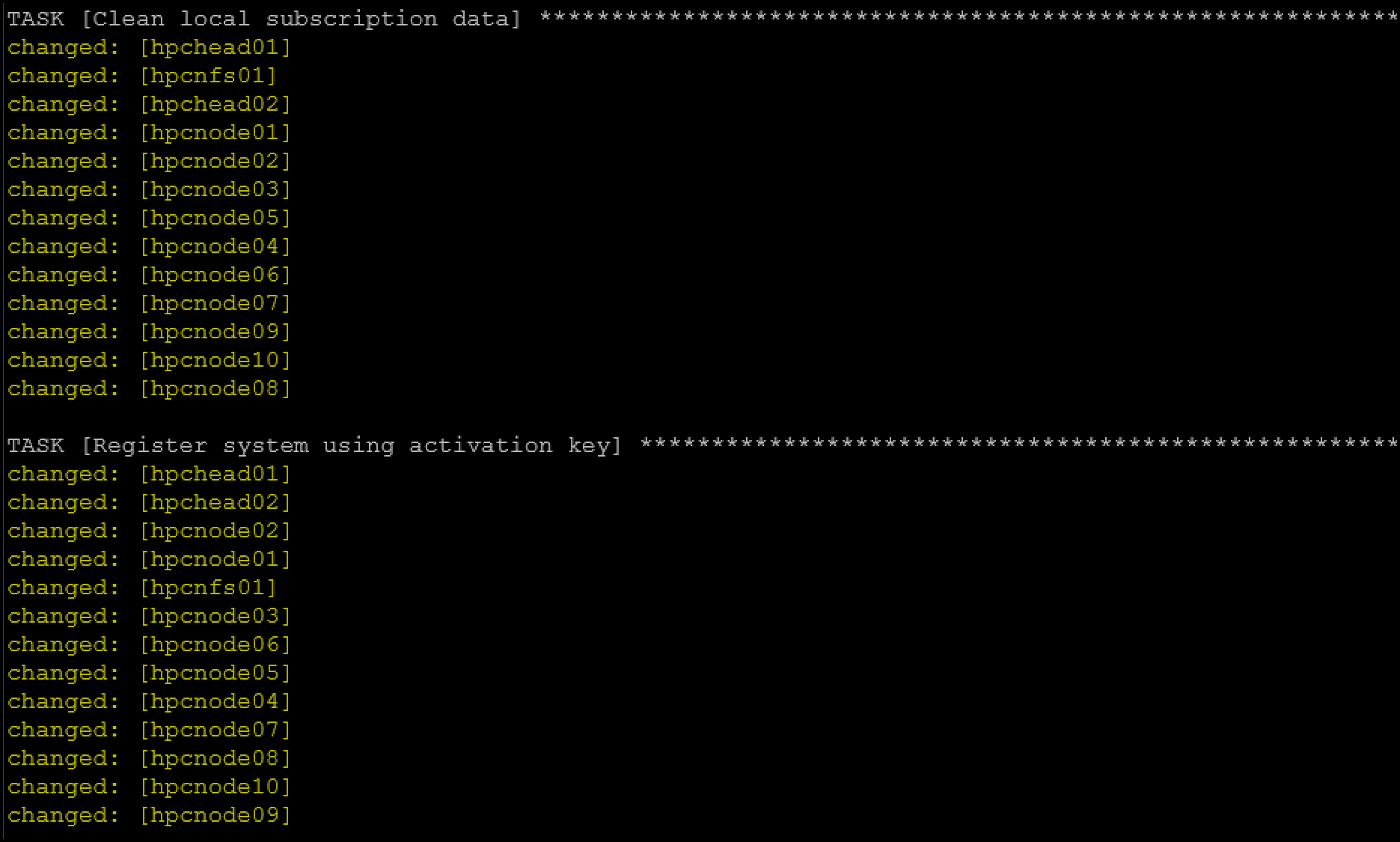

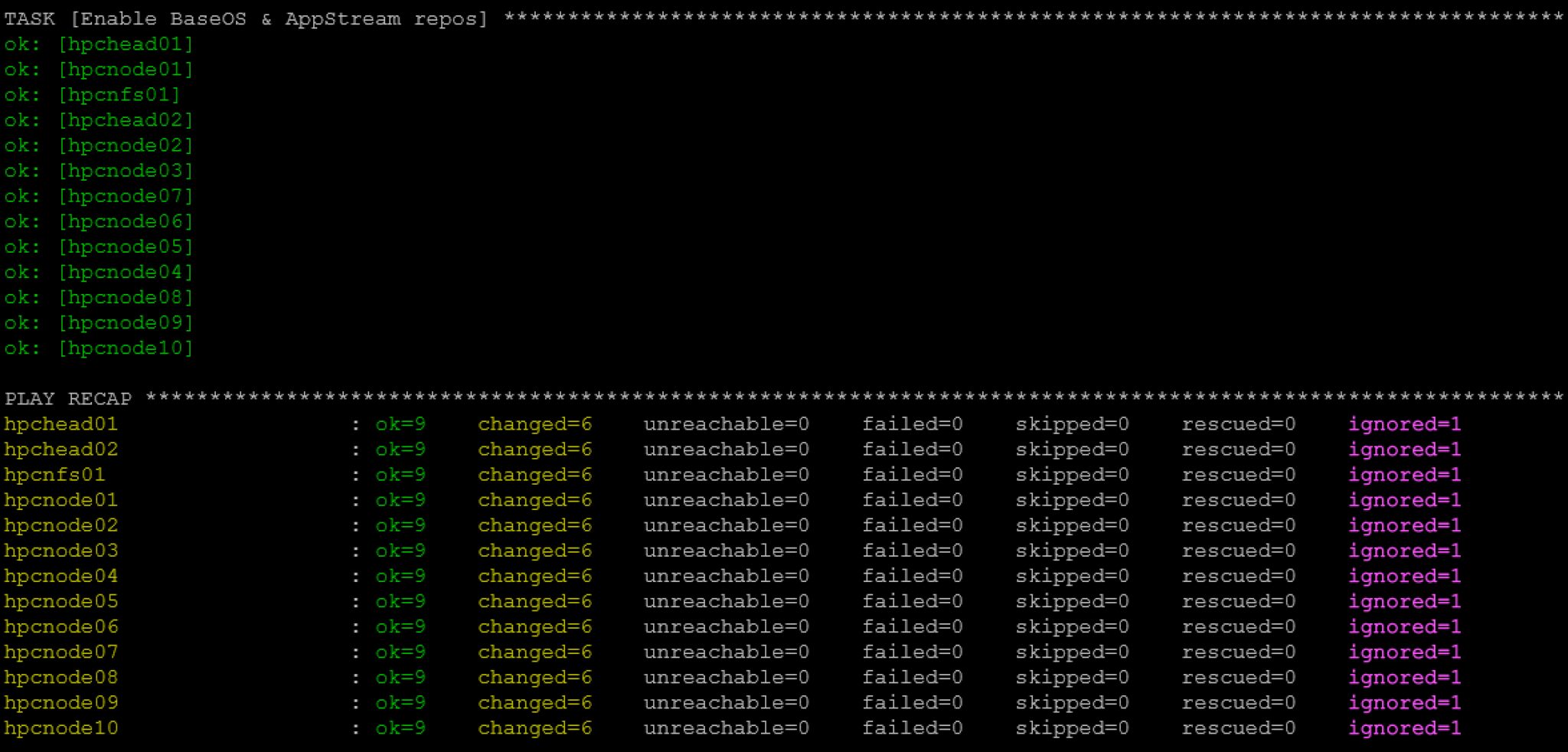

ansible-playbook -i hpc_hosts.ini register_rhsm.yml

Configuring Chronyd on All VMs

Chrony is an implementation of the Network Time Protocol (NTP). Many Linux distributions have it installed by default.

Accurate timekeeping is one of the most critical configurations required for reliable communication between network-based computers. Computers can utilize the Network Time Protocol (NTP) to synchronize their time to an upstream time server or a pool of servers to maintain accurate time.

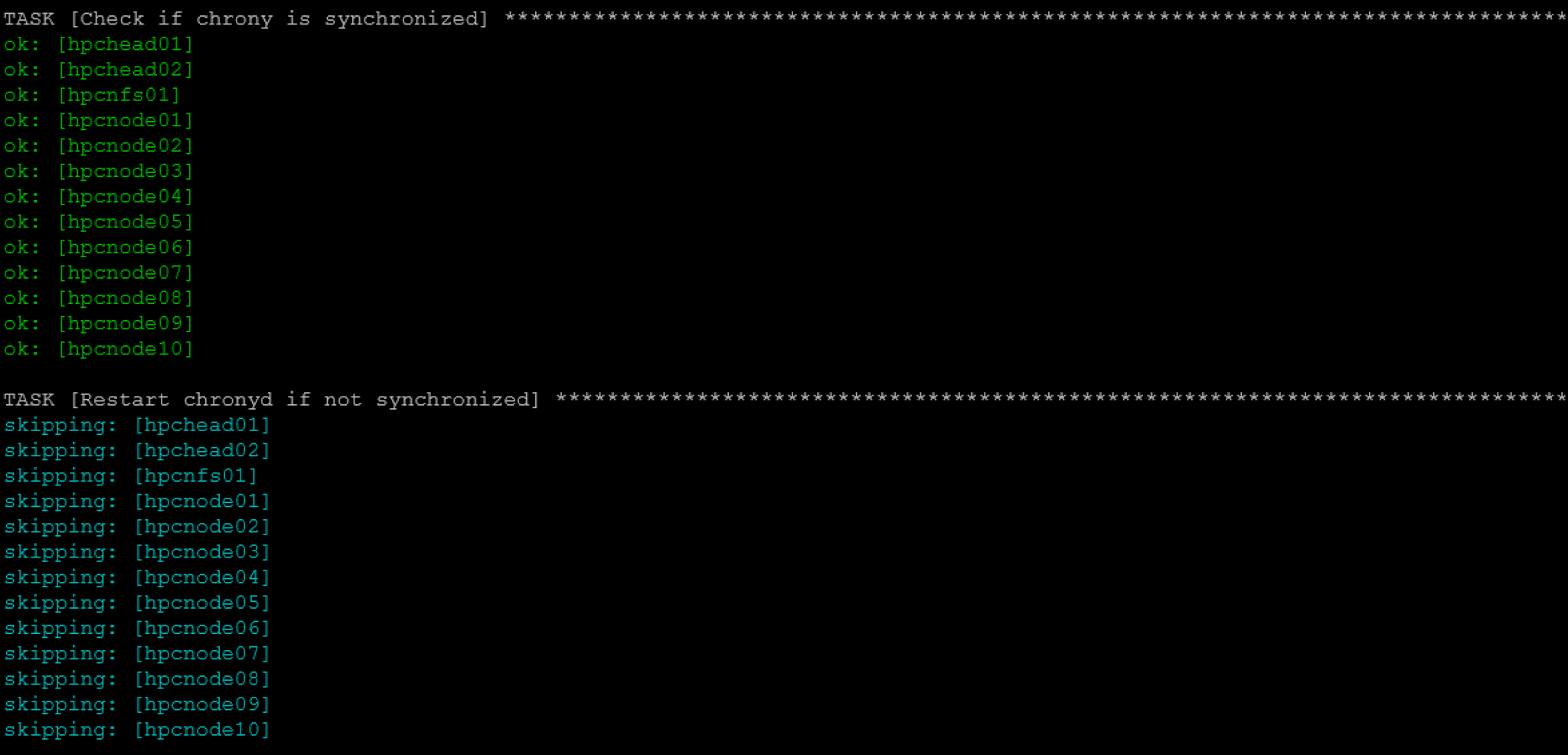

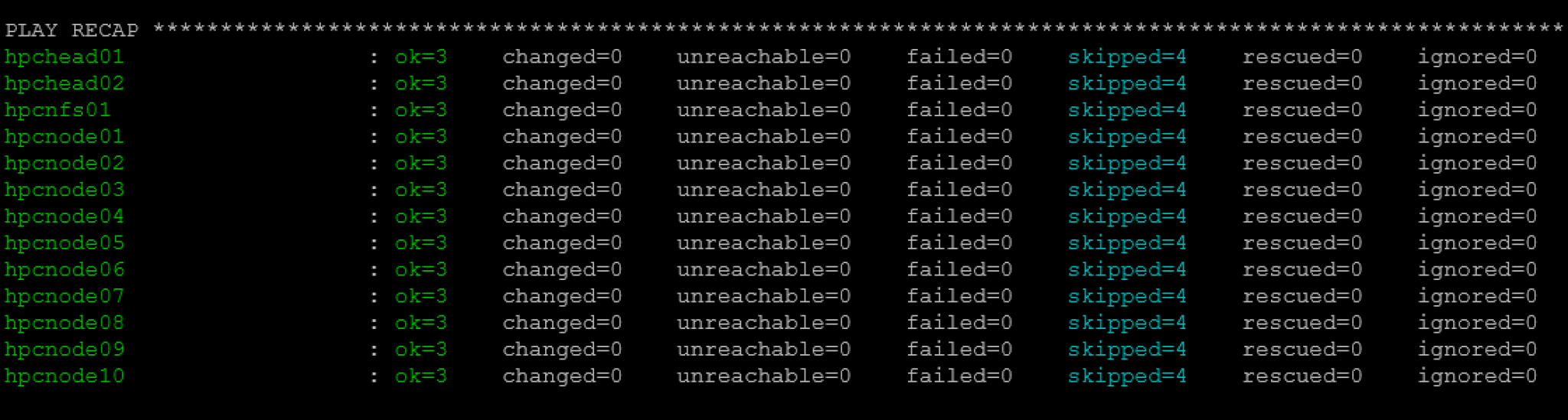

So, using Ansible, we’ll ensure NTP configurations are consistently on all cloned VMs:

1- Creating a Playbook “config_chrony.yml” to ensure chronyd is synced:

---

- name: Deploy chrony configuration and validate (auto-fix)

hosts: all

become: yes

tasks:

- name: Deploy chrony.conf from template

template:

src: ../templates/chrony.conf.j2

dest: /etc/chrony.conf

owner: root

group: root

mode: '0644'

notify: Restart chronyd

- name: Check if chrony is synchronized

shell: chronyc sources | grep '\^\*'

register: chrony_sync

failed_when: false # don't fail here

changed_when: false

- name: Restart chronyd if not synchronized

service:

name: chronyd

state: restarted

when: chrony_sync.rc != 0

- name: Wait and re-check synchronization after fix

shell: chronyc sources | grep '\^\*'

register: chrony_sync_after

when: chrony_sync.rc != 0

failed_when: false

changed_when: false

- name: Configure chronyd to start at boot

service:

name: chronyd

enabled: true

- name: Final validation — fail if still not synced

fail:

msg: "Chrony is still NOT synchronized after auto-fix"

when: (chrony_sync_after is defined) and ((chrony_sync_after.rc | default(0)) != 0)

- name: Success message

debug:

msg: "Chrony synchronized successfully"

when: (chrony_sync_after is defined) and ((chrony_sync_after.rc | default(0)) != 0)

handlers:

- name: Restart chronyd

service:

name: chronyd

state: restarted2- Creating the template (the template will be used to create the chronyd.config file dynamically) and the template file:

mkdir -p /root/ansible/create_vms/templates

vi /root/ansible/create_vms/templates/chrony.conf.j2

#+++++++++++++++++++++

# Managed by Ansible #

#+++++++++++++++++++++

{#

Template for chrony.conf

Variables:

chrony_pool - NTP pool or server

chrony_driftfile - path to driftfile

chrony_makestep - makestep value

chrony_keyfile - path to keyfile

chrony_leapsectz - leap second timezone

chrony_logdir - log directory

#}

pool {{ chrony_pool }} iburst

driftfile {{ chrony_driftfile }}

makestep {{ chrony_makestep }}

rtcsync

keyfile {{ chrony_keyfile }}

leapsectz {{ chrony_leapsectz }}

logdir {{ chrony_logdir }}3- Creating the group_vars directory and the “all.yml” variables file:

mkdir -p /root/ansible/create_vms/group_vars

vi /root/ansible/create_vms/group_vars/all.yml

chrony_pool: "192.168.255.3"

chrony_driftfile: "/var/lib/chrony/drift"

chrony_makestep: "1.0 3"

chrony_keyfile: "/etc/chrony.keys"

chrony_leapsectz: "right/UTC"

chrony_logdir: "/var/log/chrony"Note: You must adjust the “all.yml” file content to fit your needs. Basically, you need to change the IP “192.168.255.3” with the IP of your NTP server!

4- Executing the Playbook:

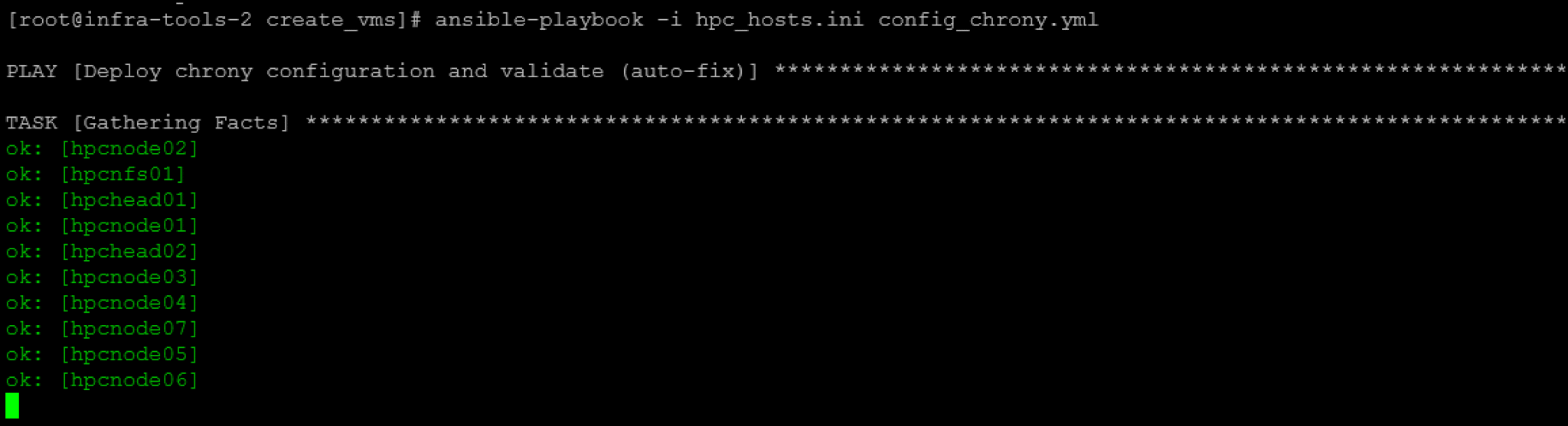

ansible-playbook -i hpc_hosts.ini config_chrony.yml

That’s it for now 🙂